What are the challenges of implementing AI in Fraud Detection for Healthcare Billing?

Artificial intelligence (AI) offers a transformative solution, providing the speed and analytical power to identify complex and subtle fraud schemes in real time. However, the implementation of AI in this high-stakes environment is not a simple technological deployment; it is a continuous, strategic endeavor fraught with significant and interconnected challenges.

The annual cost of healthcare fraud, waste, and abuse in the United States is a staggering financial drain, estimated to be between $100 and $170 billion, with some analyses citing figures as high as $350 billion annually. Traditional fraud detection methods, relying on reactive, rules-based systems and time-consuming manual reviews, have proven insufficient to combat the scale and complexity of this issue. Artificial intelligence (AI) offers a transformative solution, providing the speed and analytical power to identify complex and subtle fraud schemes in real time. However, the implementation of AI in this high-stakes environment is not a simple technological deployment; it is a continuous, strategic endeavor fraught with significant and interconnected challenges.

This report examines the core obstacles to effective AI implementation, which are categorized into four primary domains: foundational data and technical complexities, regulatory and ethical minefields, operational and human-centric hurdles, and the emerging threat of AI-generated fraud. The findings reveal that success depends on a strategic commitment to overcoming these challenges through robust data governance, a focus on explainable and equitable AI models, and the cultivation of a collaborative framework where human expertise is augmented, not replaced. The report concludes that by addressing these intrinsic hurdles, organizations can unlock AI's full potential to save billions in wasted resources, redirecting critical funds back to patient care and ultimately building a more transparent and trustworthy healthcare system.

The Strategic Imperative and Its Intrinsic Hurdles

The vast and fragmented healthcare ecosystem is a primary target for fraudulent activities, which are perpetrated by a diverse array of participants, including providers, patients, and third-party entities. These schemes, ranging from phantom billing and upcoding to falsified records and forged prescriptions, not only cost taxpayers and insurers billions of dollars annually but also compromise the integrity of care and can put patients' lives at risk through unnecessary procedures.

For decades, the fight against healthcare fraud has been a reactive, labor-intensive process. Traditional methods, such as rules-based systems and statistical modeling, have struggled to keep pace with the growing volume of claims and the sophistication of modern fraud schemes. These legacy approaches often have high false-positive rates, are slow to process, and cannot scale to meet the demand for real-time analysis. The inherent limitations of these manual and static systems have historically led health plans to focus their limited resources on high-cost, high-volume areas, often overlooking the more nuanced, lower-volume fraud that collectively represents a significant financial loss.

AI offers a paradigm shift in this fight, enabling a transition from a reactive to a proactive and preventative posture. By leveraging machine learning (ML), natural language processing (NLP), and predictive analytics, AI-powered solutions can analyze massive volumes of claims data in real time, identifying subtle patterns and anomalies that are impossible for humans or legacy systems to detect. The promise of AI is to move beyond simply catching fraud after the fact and to prevent it from happening in the first place. However, realizing this potential is contingent upon successfully navigating a complex landscape of implementation challenges, which are explored in detail throughout this article.

The Foundational Challenges—Data and Technical Complexities

The efficacy of any AI system is directly proportional to the quality and accessibility of the data it consumes. The healthcare industry presents a unique and paradoxical set of data challenges that form the bedrock of AI implementation hurdles.

The Data Paradox: Scarcity vs. Abundance

The healthcare sector generates an overwhelming volume of data daily, with claims, patient records, and provider information all contributing to a rich data landscape. While AI systems thrive on this abundance, traditional data science workflows and legacy infrastructure often lack the capacity to analyze such immense quantities of information in a timely manner. On the other hand, a critical data deficit exists for fraud detection in niche healthcare services, such as home meal delivery. These claims are low-volume, highly variable, and lack sufficient historical data to establish patterns that traditional methods could recognize.

A superficial analysis might simply present this as a dual problem of having too much data in some areas and too little in others. A deeper examination reveals that this is a paradox that AI is uniquely positioned to address, but only with the right data strategy. AI's core value lies in its ability to detect a weak signal within a fragmented and noisy data environment. To achieve this, AI models must be able to combine internal datasets with external sources, such as online provider reviews, to uncover overbilling patterns that would be invisible using internal data alone. This necessitates a fundamental shift in how organizations manage data, requiring significant investment in new data ingestion and analytics capabilities.

The Scarcity and Flawed Nature of Labeled Fraud Data

A major technical challenge for AI implementation is the severe class imbalance inherent in fraud data. The number of fraudulent claims is a minuscule fraction of the total volume, with a fraud rate estimated to be between 0.038% and 0.074%. Training a supervised machine learning model on such an imbalanced dataset is a difficult task, as the model may simply learn to classify everything as non-fraudulent.

Adding to this complexity is the flawed nature of the most common source of labeled fraud data: the List of Excluded Individuals and Entities (LEIE) compiled by the Office of the Inspector General. This dataset lists providers who have been prosecuted for fraud but does not specify which of their claims were fraudulent. Consequently, researchers are forced to assume all claims from a provider on the list are fraudulent, which introduces significant "noise and bias" into the training data. This creates a "garbage-in, garbage-out" scenario where the model may learn to identify incorrect or misleading patterns, ultimately compromising its performance and effectiveness. A model trained on such a flawed dataset may fail to detect new, subtle forms of fraud not represented in the historical data, while also incorrectly flagging legitimate providers, which creates a potential for legal liability.

The Unstructured Data Challenge and Its Interdependencies

A vast majority of healthcare data—estimated to be 80-90%—is unstructured, consisting of clinical notes, medical images, and voice recordings. This data is a goldmine of contextual information that is essential for a complete understanding of a patient's care journey and the legitimacy of a claim. However, it is challenging for algorithms to process without the aid of specialized AI techniques.

Natural Language Processing (NLP) is a critical component that allows AI to interpret and analyze free-text notes from clinicians and discharge summaries. The real value of AI in fraud detection is not simply analyzing structured billing codes but in creating a holistic, contextualized view by cross-referencing structured and unstructured data. For instance, AI can use NLP to detect a mismatch between a submitted diagnosis and the procedure documented in a clinical note, which can uncover a fraudulent upcoding scheme. Without the ability to process unstructured data, a model would have a major blind spot and could be easily circumvented by fraudsters who understand this limitation.

Technical Integration and Infrastructure Hurdles

The technical implementation of AI is further complicated by the fragmented nature of the healthcare technology landscape. Many healthcare organizations still rely on legacy IT and outdated Electronic Health Record (EHR) platforms that are not designed for seamless integration with modern AI solutions. An AI system must be able to sync seamlessly with various systems, including EHRs, practice management (PM) systems, and clearinghouses. The process of building custom connectors, middleware, and conducting prolonged testing cycles can introduce unplanned costs that run into "six figures for larger networks". These costs are a significant and often overlooked portion of the overall budget, adding 20-40% to the initial implementation price. This operational barrier can stall or even derail a project, regardless of its technical merit, and helps explain the sluggish adoption of AI in the sector.

The following table summarizes these foundational challenges, demonstrating their interconnected nature and their direct impact on the effectiveness of AI.

Navigating the Regulatory and Ethical Minefield

Even if the technical data challenges can be overcome, the implementation of AI in healthcare is a highly regulated and ethically sensitive endeavor. A failure to proactively address these issues can expose an organization to severe legal, financial, and reputational risks.

Data Privacy and Security: The Bedrock of Compliance

AI systems require vast amounts of data, including Protected Health Information (PHI), for training and operation, which creates an inherent tension with strict data privacy laws like the Health Insurance Portability and Accountability Act (HIPAA) in the U.S. and the General Data Protection Regulation (GDPR) in Europe. The challenge is not merely to comply with these rules but to manage the conflict between AI's need for a "whole picture" view and the legal demand for data minimization and strong security protocols.

Specific risks include unauthorized PHI exposure, insufficient authentication protocols for AI-powered portals, and a lack of adequate audit trails, which HIPAA requires for all PHI access. These vulnerabilities are not theoretical; the average cost of a healthcare data breach is the highest across all industries, and non-compliance can result in severe financial penalties. HIPAA fines can reach up to $1.5 million per year per violation category, while GDPR penalties can be as high as €20 million or 4% of annual global turnover. The responsibility for compliance extends to third-party AI vendors, requiring formal Business Associate Agreements (BAAs) to define a clear chain of accountability.

Algorithmic Bias and the Imperative of Health Equity

AI systems are only as good as the data they are trained on. If historical data reflects or is an implicit product of societal or systemic biases, the AI will not only perpetuate these biases but can amplify them. This is a profound ethical concern in healthcare, as it can exacerbate existing health inequities and disproportionately impact underserved populations.

Documented examples illustrate this risk. An algorithm that used race to estimate kidney function delayed organ transplant referrals for Black patients, and a commercial algorithm required Black individuals to be sicker than White individuals to qualify for chronic disease management programs. In the context of fraud detection, a biased model could unfairly flag claims from certain demographic or provider groups, creating a de facto form of discrimination. These compromises to data integrity not only jeopardize patient safety but could also expose healthcare organizations to legal liability under regulations like the False Claims Act if inaccurate coding leads to improper billing. This dynamic transforms the challenge from a purely technical problem of model accuracy to a complex ethical and legal issue of promoting health equity.

The "Black Box" Dilemma: Transparency, Explainability, and Trust

Many advanced AI models are considered "black boxes" because their decision-making processes are opaque and difficult for humans to understand or audit. This lack of transparency undermines accountability and trust on multiple levels. For compliance and auditing, it complicates documentation and makes it difficult for a human to trace a flagged claim back to the specific data points and logic that triggered the alert.

This opacity also has a direct impact on the human users of the system. Practitioners may feel uncomfortable and skeptical, as a Pew Research Center survey found that 60% of Americans are uncomfortable with providers relying on AI in their healthcare. For AI tools to be effectively used and trusted, healthcare professionals must understand how they arrive at their conclusions, enabling them to validate the outputs and make informed decisions. This is particularly crucial in a domain where human judgment and ethical considerations are non-negotiable. Without explainability, a vicious cycle of mistrust can emerge: opacity leads to a lack of human understanding, which leads to skepticism, and ultimately, to the erosion of trust among patients and providers.

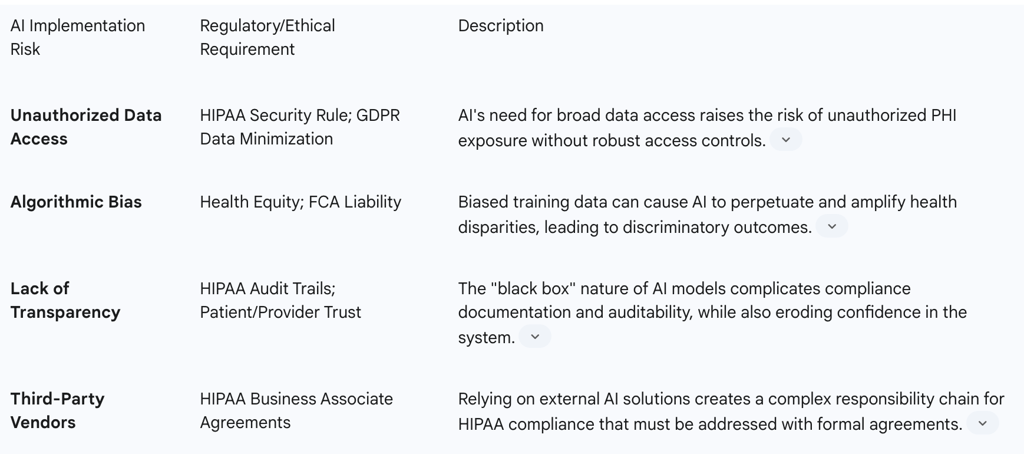

The following table provides a clear mapping of these AI risks to their corresponding regulatory and ethical requirements.