Comparative Analysis of GPT-4V and LLaVA: Architectures, Capabilities, and Performance in Multimodal AI

Multimodal Large Language Models (MLLMs), systems capable of processing and generating information across diverse modalities such as text and images. OpenAI's GPT-4V stands as a leading proprietary model, distinguished by its advanced reasoning, extensive knowledge.

The landscape of artificial intelligence has been profoundly reshaped by the advent of Multimodal Large Language Models (MLLMs), systems capable of processing and generating information across diverse modalities such as text and images. Within this rapidly evolving domain, OpenAI's GPT-4V stands as a leading proprietary model, distinguished by its advanced reasoning, extensive knowledge, and superior performance across a wide array of complex tasks. In contrast, LLaVA (Large Language and Vision Assistant) emerges as a prominent open-source alternative, recognized for its innovative modular architecture, computational efficiency, and impressive capabilities, particularly given its significantly smaller scale and reliance on GPT-4 generated data for instruction tuning.

Head-to-head evaluations consistently demonstrate GPT-4V's qualitative and quantitative superiority in tasks requiring deep visual comprehension, fine-grained object detection, complex reasoning, and robust multilingual understanding. Its proprietary nature, backed by immense computational resources and extensive Reinforcement Learning from Human Feedback (RLHF), contributes to its higher accuracy, broader generalizability, and advanced conversational fluidity. However, this comes with limitations such as a knowledge cutoff, potential biases inherited from training data, and a closed-source, API-driven access model that incurs usage costs.

LLaVA, while generally trailing GPT-4V in raw performance on many benchmarks, offers compelling advantages rooted in its open-source philosophy. Its modular design, which integrates pre-trained vision encoders (CLIP) and language models (Vicuna) via a lightweight MLP projector, enables remarkably efficient training and deployment, even on consumer-grade hardware. The strategic use of GPT-4 to generate its instruction-following dataset has been pivotal to its development, showcasing a novel approach to data curation. This allows LLaVA to serve as a cost-effective, accessible, and adaptable solution for researchers and developers, fostering broader innovation within the AI community.

The comparative analysis reveals a dynamic interplay between these models. GPT-4V pushes the boundaries of MLLM performance, setting high benchmarks for advanced capabilities. LLaVA, by democratizing access to powerful multimodal AI through its efficient design and open-source availability, acts as a critical catalyst for wider adoption and further research. The ongoing development of both proprietary and open-source MLLMs signifies a continuous push towards more human-like AI systems, each contributing uniquely to the field's progression while navigating distinct challenges related to scalability, accessibility, and ethical deployment.

Introduction to Multimodal Large Language Models (MLLMs)

Definition and Significance of MLLMs

Multimodal Large Language Models (MLLMs) represent a significant frontier in artificial intelligence, defined as advanced AI systems engineered to process, interpret, and generate information across multiple modalities. These modalities typically include text, images, audio, and video, distinguishing MLLMs from their unimodal predecessors that were restricted to a single data type. This capability to integrate diverse forms of input allows MLLMs to develop a more holistic understanding of context and content, mirroring the way humans perceive and interact with the world.

The emergence of MLLMs marks a transformative shift from traditional AI paradigms. Previously, specialized models were required for each modality—Large Language Models (LLMs) for text and computer vision models for images. MLLMs bridge this gap, enabling a deeper, more integrated comprehension that is crucial for complex, real-world applications. For instance, an MLLM can not only describe an image but also answer nuanced questions about its content, summarize text embedded within it, or even infer the humor in a visual scene. This integrated understanding is vital for applications demanding complex contextual awareness, such as assistive technologies for the visually impaired, advanced medical diagnostics, or sophisticated content generation tools. The ability of these models to process information from various sensory inputs allows for more intuitive and powerful interactions, pushing the boundaries of what AI can achieve and moving closer to artificial general intelligence (AGI). This integration of senses is a crucial step towards AI systems that can reason and operate in a manner akin to human cognition.

Brief Historical Context and the Rise of Vision-Language Models

The journey to MLLMs began with significant advancements in Large Language Models (LLMs), notably the development of transformer architectures. Transformer networks, with their attention mechanisms, revolutionized natural language processing by enabling models to better understand relationships between words and process longer sequences of text. This foundational breakthrough paved the way for powerful text-only models like GPT-3.5.

The next evolutionary step involved extending these architectural principles to other modalities, particularly vision. The Vision Transformer (ViT) demonstrated that transformer architectures, originally designed for text, could effectively process image inputs by treating image patches as sequences of tokens. A pivotal development in vision-language integration was Contrastive Language-Image Pre-Training (CLIP), which learned to align images and text in a shared embedding space. This meant that visual concepts could be understood in relation to linguistic descriptions, forming a crucial bridge between the two modalities.

The progression from text-only LLMs to sophisticated MLLMs like GPT-4V and LLaVA underscores the accelerating pace of AI innovation. This rapid evolution is largely driven by the strategic combination of these powerful, pre-trained, specialized models. For example, LLaVA’s architecture directly leverages a frozen CLIP vision encoder and a Vicuna LLM, connected by a lightweight MLP projector. This modularity is a key enabler, allowing developers to build advanced multimodal capabilities without incurring the immense computational cost and time of training an entire model from scratch. This efficient integration of existing, high-performing unimodal components has been instrumental in the rapid development and deployment of effective vision-language models.

Overview of the Current MLLM Landscape, Positioning GPT-4V and LLaVA

The contemporary MLLM landscape is characterized by a dynamic interplay between proprietary and open-source models, each offering distinct advantages and contributing to the field's rapid advancement. OpenAI's GPT-4V stands as a leading proprietary model, widely recognized for its cutting-edge performance, broad capabilities, and sophisticated understanding of nuanced instructions. Launched in March 2023, GPT-4V (a version of GPT-4 capable of processing images in addition to text) quickly established a benchmark for multimodal intelligence, demonstrating human-level performance on various professional and academic tests. Its development is backed by substantial computational resources and a closed-source approach, allowing OpenAI to focus on rigorous optimization and safety measures.

In parallel, LLaVA (Large Language and Vision Assistant) has emerged as a prominent open-source alternative, developed by researchers from the University of Wisconsin-Madison, Microsoft Research, and Columbia University. LLaVA is celebrated for its efficiency, accessibility, and strong performance, particularly considering its modular architecture and significantly smaller scale compared to proprietary giants. It has demonstrated the ability to replicate many of GPT-4's capabilities in conversing with images, often outperforming other open-source solutions while using orders of magnitude less training data. This makes LLaVA faster and cheaper to train, and crucially, more suitable for inference on consumer hardware, even running on devices like a Raspberry Pi.

The coexistence of these proprietary and open-source leaders highlights a compelling dynamic within the AI ecosystem. While proprietary models like GPT-4V frequently push the performance envelope, setting new benchmarks for capabilities and often benefiting from vast, undisclosed training data and computational power, open-source alternatives like LLaVA democratize access to advanced AI technologies. LLaVA's approach, which strategically leverages GPT-4 itself for generating high-quality instruction-following data , exemplifies a synergistic relationship where proprietary advancements can accelerate open-source development. This rich and competitive landscape fosters continuous innovation, allowing for both frontier research and widespread practical application of MLLMs.

GPT-4V: A Deep Dive into OpenAI's Flagship Multimodal Model

3.1 Core Architecture and Design Principles

Transformer-style Architecture

GPT-4V is fundamentally constructed upon a transformer-style neural network, an architecture that has proven revolutionary in natural language processing and has been successfully adapted for multimodal tasks. This design enables the model to effectively understand intricate relationships between tokens, which in the context of GPT-4V, encompass both textual and visual representations. A core component of this architecture is the attention mechanism, which allows the neural network to dynamically weigh the importance of different pieces of input data, focusing on the most relevant information to generate coherent and contextually appropriate responses. This mechanism is critical for its ability to process complex, nuanced instructions and synthesize information from disparate modalities.

The foundation in a proven transformer architecture suggests that GPT-4V's multimodal prowess is an extension of its robust language understanding capabilities. By treating image inputs as sequences of "patches" or tokens, the same powerful attention mechanisms that have enabled deep comprehension of linguistic patterns can be applied to visual data. This means that GPT-4V's visual capabilities are not merely an add-on but are deeply integrated into a powerful text-based reasoning framework. The model leverages its extensive linguistic patterns, learned during its massive text pre-training, to interpret and reason about visual tasks. This integrated approach allows it to go beyond simple object recognition, enabling it to understand visual humor, summarize text from images, and answer complex questions that combine visual and textual elements.

Multimodal Input Processing

GPT-4V is engineered to accept diverse input modalities, primarily text and images. A particularly advanced capability is its support for

interleaved image-text inputs. This feature allows users to provide a mix of images and text within a single conversation, enabling a more natural and dynamic interaction where visual cues and textual context are combined in real-time. For example, a user could upload an image, ask a question about it, then upload another image, and continue the conversation, with the model retaining context from both modalities throughout the dialogue.

The support for interleaved inputs is a critical development for creating truly conversational multimodal AI. This capability closely mimics the way humans integrate visual information into ongoing dialogues, where visual observations continuously inform and refine spoken or written communication. This signifies a more advanced form of multimodal fusion, moving beyond simple one-shot image-to-text processing to enable sustained, context-aware interactions. The model must maintain a coherent understanding of a conversation that jumps between textual and visual elements, requiring a sophisticated internal state and memory. This indicates a higher level of multimodal integration and conversational intelligence, allowing for more complex problem-solving and nuanced exchanges.

Vision Encoder Integration

For processing image inputs, GPT-4V incorporates a pre-trained Vision Transformer (ViT). This ViT likely leverages techniques from Contrastive Language-Image Pre-Training (CLIP), a method that aligns images and text in a shared embedding space, making it easier for the model to relate visual content to linguistic concepts. In training processes for models like KOSMOS-1 (which shares architectural similarities and is referenced in discussions about GPT-4's potential architecture), the parameters of the ViT are often frozen, with only the last layer potentially being fine-tuned. This strategy allows the model to capitalize on the robust, generalized visual representations already learned by CLIP from massive datasets, without incurring the immense computational cost of training a vision model from scratch.

Freezing the majority of the pre-trained vision encoder parameters during training is an efficient and effective strategy. It enables GPT-4V to leverage the powerful visual feature extraction capabilities of CLIP, which has been trained on a vast array of image-text pairs to understand a wide range of visual concepts. By "locking in" most of CLIP's knowledge, OpenAI can focus its computational resources on training the connection between the visual and language modalities, rather than on raw visual feature extraction. This approach allows for effective alignment of visual information with the language model's internal representations, contributing to GPT-4V's ability to interpret images in a linguistically meaningful way.

Proprietary Nature and Undisclosed Details

OpenAI maintains a high degree of confidentiality regarding the precise technical specifications and internal statistics of GPT-4, including GPT-4V. The exact model size, the detailed composition of its vast training dataset, and the specifics of its training infrastructure remain undisclosed. While some estimates, such as a rumor suggesting GPT-4 might have 1.76 trillion parameters, circulate within the community, these figures have not been officially confirmed by OpenAI.

This lack of transparency, while a common practice for proprietary, commercially sensitive AI models, presents significant challenges for independent researchers and the broader AI community. It hinders the ability to reproduce the model's results, making it difficult for external parties to fully audit for potential biases, limitations, or emergent behaviors. Without access to the underlying mechanisms, researchers cannot easily understand

why the model performs in certain ways or how to mitigate its flaws, which can impede scientific progress. Furthermore, this proprietary approach limits the ability to build directly upon its foundational elements, contrasting sharply with the open-source philosophy championed by models like LLaVA, which makes its data and code publicly available. This "black box" nature raises questions about accountability and trust, as the internal workings and training processes are not publicly verifiable.

3.2 Training Methodology and Alignment

Two-Stage Training with RLHF

GPT-4V's training regimen involves two principal stages, designed to imbue it with robust language understanding and human-aligned behavior. Initially, the model undergoes extensive pre-training on vast datasets of text, comprising both publicly available data and information licensed from third-party providers. During this phase, the model learns to predict the next token in a sequence, thereby developing a profound grasp of language structure, semantics, and a wide array of factual knowledge.

Following this foundational pre-training, the model undergoes a critical fine-tuning process aimed at human alignment and policy compliance. This stage notably incorporates Reinforcement Learning from Human Feedback (RLHF). RLHF involves human reviewers providing feedback on the model's outputs, which is then used to train a reward model. This reward model, in turn, guides the main language model to generate responses that are more helpful, harmless, and aligned with human instructions and ethical guidelines. This iterative process is instrumental in making GPT-4V more reliable, creative, and capable of interpreting and responding to nuanced instructions effectively. The strong foundation in text-only pre-training, coupled with extensive RLHF, is paramount to GPT-4V's advanced conversational abilities, its adherence to safety policies, and its nuanced understanding of human instructions. This suggests that its visual understanding is deeply integrated into a sophisticated language model that has been extensively shaped by human preferences and ethical considerations. When vision is integrated, it is into an LLM that has already undergone this rigorous alignment, meaning GPT-4V's visual interpretations are filtered through a "moral compass" and conversational style explicitly shaped by human feedback.

Evaluation on Human-Designed Exams

OpenAI has subjected GPT-4 to rigorous evaluation across a diverse set of benchmarks, including simulating exams originally designed for humans. These evaluations encompassed a range of professional and academic tests, such as the Uniform Bar Examination, SATs, and various AP exams. A notable aspect of these tests is that OpenAI conducted them without specific training for the exams themselves, utilizing the post-trained RLHF model.

The results demonstrated GPT-4's remarkable capabilities, with the model achieving a score on the simulated bar exam that placed it within the top 10% of test takers, a significant improvement compared to GPT-3.5, which scored in the bottom 10%. Performance on such complex, human-designed exams, particularly those requiring advanced reasoning in legal or academic contexts, indicates a substantial leap in general intelligence and the ability to generalize knowledge across diverse domains. This suggests a robust capacity for abstract reasoning and problem-solving, which is a hallmark of advanced AI. These exams are not merely about recalling facts; they test critical thinking, analytical skills, and the ability to apply knowledge in novel situations. GPT-4V's strong performance here, especially without explicit training, implies a deep, transferable understanding rather than mere memorization. This is a strong indicator of its potential for broad applicability in complex cognitive tasks.

Context Window

The context window, which refers to the amount of information a model can consider at any given time during a conversation or task, is a critical factor in its performance. GPT-4 was initially released with context windows of 8,192 and 32,768 tokens, representing a significant improvement over its predecessors, GPT-3.5 and GPT-3, which were limited to 4,096 and 2,048 tokens, respectively. More recent iterations, such as GPT-4 Turbo and GPT-4o, feature even larger context windows, extending to 128,000 tokens.

A larger context window substantially enhances the model's ability to process and retain more information from extended conversations or lengthy documents. This is crucial for understanding complex, multi-turn prompts, where the model needs to recall previous interactions and integrate new information. It also allows the model to maintain coherence over prolonged dialogues and perform tasks that require synthesizing large amounts of both visual and textual information, leading to more comprehensive and accurate responses. The context window is effectively the model's short-term memory. A larger window means it can "remember" more of the conversation and input data, directly impacting its capacity to handle intricate instructions, understand nuances across a long exchange, or process entire documents with embedded images, making it more capable in real-world, multi-faceted tasks.

3.3 Key Capabilities and Real-World Applications

Advanced Visual Question Answering (VQA) & Detailed Description

GPT-4V demonstrates exceptional proficiency in interpreting visual content, showcasing a nuanced understanding that extends beyond simple object identification. It excels at tasks ranging from describing the humor in unusual images to accurately summarizing text from screenshots and providing answers to exam questions that incorporate complex diagrams. Furthermore, GPT-4V can generate rich and detailed descriptions of intricate scenes, moving beyond basic captioning to articulate fine-grained visual elements and their contextual relationships.

The ability to discern humor or provide detailed, nuanced descriptions implies a level of abstract and contextual understanding that goes beyond mere visual recognition. This brings GPT-4V closer to human-like perception and interpretation of visual information, enabling more sophisticated interactions. Understanding humor, for instance, requires a grasp of subtle visual cues, cultural context, and implied meaning, which are high-level cognitive functions. Its capacity to summarize text from screenshots and answer diagram-based questions demonstrates the practical application of its visual OCR (Optical Character Recognition) and spatial reasoning capabilities, making it a versatile tool for information extraction and comprehension from visual sources.

Multimodal Reasoning and Content Generation

GPT-4V exhibits strong capabilities in multimodal reasoning, particularly in interpreting and analyzing data presented in visual formats such as graphs, charts, and tables. It demonstrates robust visual math reasoning, allowing it to solve simple mathematical equations presented visually and even provide step-by-step solutions. Its document understanding capabilities are also strong, including accurate scene text recognition. A particularly transformative application is its ability to generate functional website code directly from hand-drawn sketches or transform a sketch into an architecture diagram.

The capability to translate visual designs, such as hand-drawn sketches, into functional code represents a significant advancement. This application has the potential to accelerate development workflows dramatically and bridge the gap between design conceptualization and implementation. This showcases a powerful form of cross-modal translation and creative problem-solving, where the model interprets abstract visual ideas and translates them into structured, executable code. Sketch-to-code is a complex task that involves visual parsing, understanding human intent, and generating structured code; it is not merely recognition but a creative synthesis. This capability points towards a future where AI can act as a highly intelligent co-creator or assistant across various design and engineering domains.

Real-World Use Cases and Societal Impact

GPT-4V has been integrated into a variety of practical applications, underscoring its utility and perceived reliability across diverse sectors. A notable example is its use by Be My Eyes, a Danish organization that leverages a GPT-4-powered 'Virtual Volunteer' to assist visually impaired and low-vision individuals with everyday activities. This includes tasks such as reading website content, navigating challenging real-world circumstances, and making informed decisions, much like a human volunteer would. Other applications include Duolingo, where GPT-4 is used to explain mistakes and facilitate practice conversations , and Khan Academy, which employs GPT-4 as a tutoring chatbot named "Khanmigo". Beyond these, GPT-4V is also being explored for analyzing medical images and scans to provide detailed health or disease information, as well as for identifying and classifying NSFW (Not Safe For Work) objects in images for content moderation.

The deployment of GPT-4V in high-impact applications like Be My Eyes highlights its perceived robustness and its potential for significant positive societal contributions, particularly in enhancing accessibility and independence for individuals with disabilities. Its use in such a critical service indicates that the model has met stringent standards for practical, real-world assistance, making it a powerful tool for assistive technology and demonstrating its capacity for direct human benefit. These applications are not just demonstrations; they are critical services where accuracy and reliability are paramount.

Multilingual Multimodal Understanding

GPT-4V exhibits strong multilingual capabilities, which significantly broadens its global applicability. It can generate accurate image descriptions in various languages and effectively recognizes input text prompts in different languages, responding appropriately. This extends to multilingual scene text recognition, allowing the model to read and interpret text embedded in images across diverse linguistic contexts. Furthermore, GPT-4V demonstrates a degree of multicultural understanding, adapting its responses to cultural nuances. A notable improvement in GPT-4o, a later iteration, is its enhanced tokenizer, which uses fewer tokens for certain languages, especially those not based on the Latin alphabet, making it more cost-efficient for global use.

Robust multilingual capabilities are crucial for expanding the global reach and utility of MLLMs, making advanced multimodal AI accessible to a much wider user base and diverse linguistic contexts. This is a vital step towards truly global AI adoption and equitable access to AI technologies. By excelling in multiple languages for multimodal tasks, GPT-4V helps to dismantle language barriers, enabling applications in diverse markets and for global user bases. The improved token efficiency for non-English languages also makes its deployment more economically viable for a broader range of international users.

Interaction with External Interfaces

GPT-4V possesses the capability to interact with external interfaces and tools, transforming it from a static knowledge base into a dynamic, extensible agent. For example, the model can be instructed to enclose a query within specific tags, such as <search></search>, to initiate a web search. The results of this search are then inserted back into the model's prompt, allowing it to form a more informed and up-to-date response. This functionality enables GPT-4V to perform tasks beyond its inherent text-prediction capabilities, such as utilizing various APIs, generating images, and accessing and summarizing web pages.

The ability to call external tools and APIs is a transformative feature. It allows GPT-4V to overcome its inherent knowledge cutoff, which limits its understanding to events up to its last training data update (e.g., September 2021 for GPT-4, September/October 2023 for GPT-4o). By integrating with external data sources like search engines, GPT-4V can access the most current information, effectively bypassing its static knowledge base. This makes it a more versatile and "intelligent" system, mimicking the human ability to use tools to extend their cognitive capabilities and adapt to real-time information. This dynamic interaction greatly expands its utility and adaptability to rapidly changing environments.

3.4 Identified Limitations and Safety Considerations

Hallucinations and Factual Inaccuracies

Despite significant advancements in reliability and accuracy, GPT-4V is not entirely immune to "hallucinations." This phenomenon refers to the model generating information that is not present in its training data or producing outputs that contradict the user's prompt, sometimes fabricating "facts" or flawed logic. The model can exhibit severe hallucinations, particularly when dealing with fine-grained world knowledge. OpenAI explicitly acknowledges this limitation, often including warnings such as "ChatGPT can make mistakes. Verify important information" beneath its chat interface.

The persistence of hallucinations, even in advanced models like GPT-4V, highlights a fundamental and ongoing challenge in generative AI: the inherent trade-off between creative fluency and strict factual accuracy. While techniques like Reinforcement Learning from Human Feedback (RLHF) are employed to reduce such occurrences, they are not entirely eliminated. This means that for high-stakes applications, such as those in medical or legal domains, independent verification of GPT-4V's output is not merely recommended but is a mandatory step. This underscores that current MLLMs are powerful tools for information synthesis and generation, but they are not infallible oracles, and human oversight remains crucial for ensuring accuracy and reliability.

Knowledge Cutoff

A significant limitation of GPT-4V, like other pre-trained models, is its knowledge cutoff. The foundational GPT-4 model's knowledge is limited to events occurring before September 2021. More recent iterations, such as GPT-4o, have a slightly more updated knowledge cutoff of September or October 2023. This means that the model lacks information on events, developments, or data that have emerged since its last training update.

This knowledge cutoff implies that for current events, rapidly evolving information, or niche, real-time data, GPT-4V cannot provide accurate or up-to-date responses solely from its internal knowledge. To address this, the model requires external data injection, often facilitated through its ability to make web search API calls, or explicit user-supplied context to provide relevant and current information. This highlights a practical limitation for applications that demand real-time awareness or access to the latest information. Models are static snapshots of data up to their training cutoff, whereas the real world is dynamic. This fundamental mismatch means GPT-4V cannot be a real-time information source on its own; it needs to be augmented with external, up-to-date data sources, which adds complexity to its deployment for certain use cases.

Bias Concerns

OpenAI acknowledges that GPT-4V, similar to its predecessors, continues to reinforce social biases and worldviews. These biases can include harmful stereotypical and demeaning associations for certain marginalized groups, which are inherited from the vast and diverse datasets used during its training. The training data, often sourced from the internet, reflects existing societal biases, and consequently, the model learns and perpetuates these patterns. OpenAI advises users to be aware of this limitation and to implement their own measures to handle bias within their specific use cases, as the model cannot fully resolve these issues on its own.

The perpetuation of biases, even after extensive efforts such as Reinforcement Learning from Human Feedback (RLHF) aimed at alignment, indicates that bias mitigation is a deeply complex and multifaceted problem that cannot be fully resolved by post-training alignment alone. This suggests that biases are deeply embedded in the vast, real-world training datasets, necessitating ongoing research into more equitable data curation, advancements in model architecture, and the development of robust ethical deployment practices. The responsibility for addressing bias is thus shared by both model developers and the end-users who deploy these systems.

Restricted for Risky Tasks

By design and as a measure of responsible AI deployment, GPT-4V is programmed to refuse certain high-risk tasks. For instance, it is unable to answer questions that involve identifying specific individuals in an image, reflecting a commitment to privacy and ethical boundaries. Furthermore, OpenAI explicitly advises users to refrain from employing GPT-4V for other high-risk applications due to potential inaccuracies or harmful outputs. These include tasks requiring scientific proficiency, where the model might miss critical text or characters, overlook mathematical symbols, or fail to recognize spatial locations and color mappings in complex scientific visuals.

Similarly, for medical advice, while the model may sometimes provide correct responses based on medical imaging, its answers can be inconsistent, making it unreliable as a replacement for professional medical consultation. There are also significant disinformation risks, as the model can generate plausible, realistic, and targeted text content tailored for an image input, potentially leading to the spread of misinformation. Additionally, dealing with hateful content remains a challenge, as the model may not always refuse questions containing hate symbols or extremist content. OpenAI's explicit restrictions and warnings for high-risk tasks demonstrate a proactive commitment to responsible AI deployment, acknowledging the current limitations and potential for misuse. This is a crucial aspect of safety and ethical AI development, guiding users away from dangerous applications where model reliability and accuracy are not yet guaranteed. These warnings signal that while the model is powerful, it is not a general-purpose expert in all domains, especially those with high stakes like healthcare or national security, emphasizing that AI's current capabilities are still bounded and require human oversight in critical areas.

Cost Implications

GPT-4V is primarily accessed through a hosted API, meaning its usage incurs costs based on the volume of tokens processed. While OpenAI continually refines its pricing structure, with newer versions like GPT-4o offering more cost-effective rates compared to older GPT-4 versions, usage-based fees remain a factor. For example, GPT-4o is priced at $2.50 per million input tokens and $10 per million output tokens, while the smaller GPT-4o mini is significantly cheaper at $0.15 per million input tokens and $0.60 per million output tokens.

The API-based, pay-per-token model, while offering convenience and scalability for some users, can be cost-prohibitive for smaller companies, academic research with limited grants, or high-volume, continuous applications. This creates a financial barrier to entry and favors larger organizations or those with specific budget allocations, contrasting sharply with the cost-effectiveness and accessibility of open-source models like LLaVA, which can be run on owned hardware without per-token charges. Proprietary models like GPT-4V operate on a Software-as-a-Service (SaaS) model, where every interaction incurs a cost. This economic model means that for intensive or large-scale use cases, costs can quickly accumulate, making it less accessible for certain types of research or low-budget applications compared to open-source alternatives.

IV. LLaVA: An Open-Source Alternative for Visual Instruction Tuning

4.1 Modular Architecture and Components

Integration of Pre-trained Models

LLaVA (Large Language and Vision Assistant) distinguishes itself through a highly efficient and modular architectural design. Instead of building a massive end-to-end model from scratch, LLaVA seamlessly integrates two powerful, pre-trained models: a CLIP (Contrastive Language-Image Pre-Training) vision encoder and a Vicuna Large Language Model (LLM). The original LLaVA utilized

CLIP-ViT-L/14, which was later upgraded to CLIP-ViT-L-336px for LLaVA-1.5, allowing for higher input resolution and finer detail capture. The Vicuna LLM, an instruction-tuned version of Meta's LLaMA-2, serves as the language backbone, known for its conversational fluency and instruction-following capabilities.

This modular design is a cornerstone of LLaVA's efficiency and accessibility. By leveraging existing, robust pre-trained components, LLaVA circumvents the immense computational cost and data requirements typically associated with training a large, end-to-end multimodal model from scratch. Training large foundation models is prohibitively expensive, both in terms of compute and data. LLaVA's strategy of combining existing powerful unimodal models (CLIP for vision, Vicuna for language) means it can achieve strong multimodal capabilities by focusing resources on how these models communicate, rather than building everything from the ground up. This approach makes LLaVA a viable and attractive open-source solution for a wide range of users, from academic researchers to individual developers, by significantly reducing the barriers to entry for advanced AI development.

Modality Translator (MLP Projector)

A critical component in LLaVA's architecture is the lightweight, trainable element known as the MLP projector. This component serves as a crucial bridge, translating the visual embeddings generated by the vision encoder into the input space of the language model. In the initial version of LLaVA, this was implemented as a simple linear projection layer, which effectively mapped CLIP's 1024-dimensional output embeddings into the language model's input space (e.g., 5120-dimensions for Vicuna-13B).

A significant improvement introduced in LLaVA-1.5 was the upgrade of this component to a more expressive two-layer Multi-Layer Perceptron (MLP) with a GELU activation function. This architectural refinement allows for a non-linear transformation of visual features, greatly enhancing the model's ability to bridge modalities. The evolution from a simple linear projection to a more complex MLP signifies a recognition that a more expressive, non-linear transformation is necessary to effectively align complex visual features with the nuanced embedding space of the language model. A linear layer might be too simplistic to capture the rich and complex relationships between visual and linguistic semantics. A multi-layer perceptron, with its non-linear activations, can learn more intricate mappings, enabling a deeper "understanding" of how visual elements translate into linguistic concepts, thereby improving the overall multimodal capability and performance.

Computational Efficiency

One of LLaVA's defining strengths lies in its highly efficient and modular training strategy. A key aspect of this efficiency is that the CLIP vision encoder is kept completely frozen during both stages of the training process. This design choice is fundamental to its computational efficiency and scalability.

Freezing the vision encoder parameters preserves CLIP's strong generalization capabilities, which were learned from massive image-text datasets, and dramatically reduces the number of trainable parameters in the overall LLaVA model. This approach makes LLaVA significantly faster and cheaper to train compared to end-to-end models, and crucially, it renders it more suitable for inference on consumer-grade hardware. For instance, LLaVA models can run on machines requiring only 8GB of RAM and 4GB of free disk space, with demonstrations even showing successful operation on a Raspberry Pi. This remarkable accessibility is a major advantage for democratizing advanced AI, allowing individual developers, small research teams, and those with limited computational resources to experiment with and deploy powerful multimodal AI systems, fostering innovation outside of large data centers.

4.2 Two-Stage Training Regimen

Stage 1: Pre-training the Projector

LLaVA employs a two-stage training process designed to effectively align its visual and language components. The initial stage, known as pre-training the projector, focuses exclusively on establishing a connection between the frozen vision encoder (CLIP) and the frozen language model (Vicuna). The primary objective during this phase is to train

only the MLP projector. This projector learns to effectively "translate" the high-dimensional visual features extracted by the CLIP ViT into a format that the Vicuna LLM can readily understand and integrate into its linguistic processing pipeline.

This stage utilizes a carefully filtered subset of image-caption pairs, typically comprising around 558K samples from large datasets such as LAION, CC, and SBU. The selection emphasizes concrete visual concepts, focusing on objects and scenes containing identifiable nouns to ensure robust visual-linguistic alignment. The task during this stage is standard auto-regressive language modeling: given an input image, the model predicts its caption token by token. The projector's role is to map CLIP's visual features into the language model's embedding space in a way that facilitates this prediction. This pre-training phase is remarkably efficient, completing in approximately 6 hours on a single 8x A100 GPU node. The outcome of this stage is a checkpoint where the MLP projector functions as a competent modality translator, effectively aligning vision embeddings with the LLM's internal representation space.

Stage 2: Fine-tuning the Language Model and Projector

The second stage of LLaVA's training regimen involves fine-tuning both the pre-trained MLP projector and the language model (Vicuna) together. Crucially, the vision encoder (CLIP) remains completely frozen during this stage as well, preserving its pre-trained visual knowledge and contributing to computational efficiency. This stage is where the model truly learns to function as a helpful visual assistant, aligning its multimodal capabilities to perform complex instruction-following tasks.

This fine-tuning process leverages a comprehensive instruction tuning mixture, which for LLaVA-1.5, includes a diverse set of 665K samples. This dataset is strategically curated and includes:

Multi-turn conversations: These plausible dialogues between a user and an assistant discussing images teach the model to handle natural conversational flow and retain context over extended interactions.

Detailed descriptions: Rich and nuanced paragraphs that go beyond basic captioning, helping the model develop fine-grained scene understanding and descriptive articulation.

Visual Question Answering (VQA): Incorporating datasets like GQA, OKVQA, and A-OKVQA, this strengthens the model's performance on fact-based and commonsense visual question answering, particularly for short, precise responses.

OCR-based reasoning: Datasets such as OCR-VQA, TextVQA, and ScienceQA are included to improve the model's ability to read, understand, and reason over textual elements embedded within images, an essential real-world skill.

Region-level grounding data: Utilizing datasets like RefCOCO and Visual Genome, the model is trained to understand region-referenced language (e.g., "the red ball on the right"), improving its spatial grounding and referential accuracy.

Text-only instruction tuning: High-quality, text-only instructions from ShareGPT are included to enhance the reasoning, dialogue coherence, and overall intelligence of the Vicuna language backbone, leading to stronger multimodal capabilities.

Through this comprehensive instruction tuning, the model learns to understand and respond to image-based questions, generate detailed scene descriptions, read and reason over textual content within images, and maintain coherent and helpful conversations. This two-phase training approach is highly resource-efficient and avoids redundant computation: Stage 1 ensures the projector understands how to “speak the LLM's language,” while Stage 2 allows the full system to generalize and behave like a capable assistant, all without ever modifying the vision encoder.

4.3 Reliance on GPT-4 Generated Data

A distinctive and innovative aspect of LLaVA's training methodology is its strategic reliance on GPT-4 (or GPT-4o for later versions like LLaVA-Video) to generate a significant portion of its instruction-following data. This approach, termed Visual Instruction Tuning, was proposed by the LLaVA authors as a practical and scalable alternative to the prohibitively slow, costly, and difficult manual curation of large-scale, human-annotated image-based instruction-response pairs.

By prompting GPT-4 with image captions and relevant context, the advanced reasoning capabilities of this powerful proprietary LLM are harnessed to effectively turn it into a data generator. For example, the initial LLaVA model collected 158K unique language-image instruction-following samples based on the COCO dataset, generated by interacting with a language-only GPT-4. This dataset included 58K conversations, 23K detailed descriptions, and 77K complex reasoning samples. This strategy not only improved data efficiency but also enabled the creation of a diverse, high-quality dataset crucial for LLaVA's training and overall performance.

The idea of using GPT-4 to generate multimodal instruction-following data is considered inspiring and has yielded strong, encouraging results, indicating that instruction tuning an LLM to accept multimodal information is a promising direction. LLaVA takes image captioning or object detection results as input for GPT-4, which then generates visual instruction data, providing an effective way to quickly create large amounts of such data.

However, this reliance on synthetic data generated by a proprietary model like GPT-4 also introduces certain considerations and potential weaknesses:

Data Quality and Validation: A primary concern is the data quality. While LLaVA's authors iterate and validate prompts on a subset of samples (e.g., ~1000) using GPT-4 itself to validate the visual groundness of generated outputs, and find GPT-4 consistently provides higher quality data , there is a general lack of independent human verification of the entire automatically generated dataset. Some concurrent works, like MiniGPT-4, manually verify the correctness of each image description, highlighting the importance of high-quality instruction data. The dataset, being entirely synthetic, may also lack the diverse annotation styles that come from human raters across cultures or age groups.

Bias Replication: The synthetic nature of the dataset, generated using GPT-4 and filtered heuristics, raises concerns about the replication of biases from the underlying LLMs. These biases could include cultural stereotypes, gender norms, or Western-centric viewpoints, potentially reinforcing model hallucinations or surface-level reasoning if the synthetic data lacks real-world nuance. Research indicates that current Large Vision-Language Models (LVLMs) can suffer from "training bias," failing to fully leverage their training data and hallucinating more frequently on images seen during training, with this bias primarily located in the language modeling head.

Completeness and Inconsistency: Synthetic datasets may contain incompleteness or inaccuracies, stemming from imperfections in the generation process, which can impede a model's capacity to accurately predict or manage scenarios characterized by real-world data incompleteness. They may also lack the natural noise and intricacies inherent in real-world data, potentially hampering the model's efficacy in realistic environments. Furthermore, synthetic data can lack the inconsistency found in authentic datasets, which often embody variations from diverse sources, temporal epochs, and environmental conditions, potentially leading to challenges for models in adapting to multifaceted real-world vicissitudes.

Ethical and Legal Concerns: Aggregating videos from public sources for synthetic dataset creation (as seen in LLaVA-Video) raises questions about permissive licensing for redistribution and whether derived annotations fall under fair use. There are also broader impact concerns regarding potential misuse cases, such as automated surveillance or behavioral profiling, if safeguards are not adequately considered.

Despite these considerations, the use of GPT-4 as a data generator has allowed LLaVA to achieve impressive multimodal chat abilities, sometimes exhibiting behaviors akin to multimodal GPT-4 on unseen images/instructions, and yielding a 85.1% relative score compared with GPT-4 on a synthetic multimodal instruction-following dataset. When fine-tuned on Science QA, the synergy of LLaVA and GPT-4 achieved a new state-of-the-art accuracy of 92.53%. This approach highlights a powerful method for rapidly scaling multimodal AI development, albeit with the necessity for ongoing vigilance regarding data quality and bias.

4.4 Key Capabilities and Accessibility

LLaVA, as an open-source generative AI model, has demonstrated impressive capabilities in conversing with images, replicating many of the functionalities seen in proprietary models like GPT-4. Its core strength lies in its ability to process image inputs and engage in natural language dialogues about their content, allowing users to discuss visual information, describe ideas, and provide context in a visual manner.

Key capabilities of LLaVA include:

Image Description and Reasoning: LLaVA can generate detailed descriptions of images and make inferences and perform reasoning based on the elements within an image. This includes tasks like identifying a movie or a person from visual clues, and even generating website code from a drawing.

Multimodal Instruction Following: The model is highly proficient in following complex instructions that involve both visual and textual inputs. Its training on diverse instruction-following datasets, including multi-turn conversations, visual question answering (VQA), OCR-based reasoning, and region-level grounding, enables it to understand and respond to a wide range of multimodal queries.

Conversational Fluency: LLaVA is designed to handle natural conversational flow and context retention, allowing for plausible dialogues between a user and an AI assistant about an image.

OCR and Text-Rich Image Understanding: LLaVA-1.5 significantly improved its ability to read, understand, and reason over textual content embedded within images, an essential real-world skill, by incorporating datasets like OCR-VQA and TextVQA. LLaVA-NeXT further enhances OCR capabilities.

Zero-shot Generalization: LLaVA-1.5, despite its smaller model size, has demonstrated strong zero-shot multilingual capabilities without specific fine-tuning for multilingual multimodal instruction following, even outperforming some larger models in Chinese multimodal instruction following. It also exhibits zero-shot format instruction generalization, effectively handling "Unanswerable" responses when questions lack sufficient visual context.

A compelling feature of LLaVA is its accessibility and suitability for inference on consumer hardware. The model requires only 8GB of RAM and 4GB of free disk space, and has been successfully run on devices as compact as a Raspberry Pi. This low resource requirement, combined with its open-source availability (code and models are publicly available on HuggingFace and can be loaded using standard libraries like Transformers) , makes LLaVA a highly accessible and cost-effective alternative to proprietary models. This accessibility democratizes advanced AI research and development, enabling a broader community of developers and researchers to contribute to and benefit from multimodal AI, fostering innovation and application development in resource-constrained environments.

4.5 Training Data Limitations and Challenges

Despite its innovative training methodology and open-source nature, LLaVA's approach to data and training presents certain limitations and challenges.

Data Quality Concerns: As discussed, the reliance on automatically generated data from GPT-4, while scalable, raises questions about the thoroughness of human validation for the entire dataset. While LLaVA's authors validate prompts on subsets, the absence of comprehensive human checking for the entire dataset means potential inaccuracies or biases from the source model (GPT-4) could be propagated. This can lead to a potential gap in real-world QA alignment and model behavior under human-style supervision or dialogue.

Sufficiency of Pre-training Data: Questions have been raised regarding whether 595K image-text pairs are sufficient for robust vision-language alignment, especially when compared to models like Blip2, which use over 100 million image-text pairs for alignment. While LLaVA's CLIP encoder is already pre-trained for image-text contrastive loss, potentially aiding faster alignment, the scale of pre-training data remains a point of discussion for further improvement.

Computational Cost of Visual Tokens: LLaVA inputs a relatively large number of CLIP visual tokens (256) into the LLM, which is significantly more than some other models (e.g., MiniGPT4 uses ~30 tokens). This design choice can make the training process slower, despite LLaVA's overall efficiency.

Base Model Degradation in Continual Learning: While LLaVA-c (a continual learning variant) aims to acquire new knowledge while retaining existing capabilities, current continual learning methods often prioritize task-specific performance and can lead to "base model degradation". This phenomenon occurs when models overfit to task-specific instructions (e.g., "answer in a single word") when learning new tasks, leading to a loss of their ability to handle diverse instructions and generate reliable responses for general tasks. This exposes a shortcoming in evaluating MLLM-related continual learning methods, which may neglect general performance while focusing on task-specific improvements.

Limitations in Multitask Learning: The multitask learning paradigm, which LLaVA utilizes to some extent, faces inherent challenges such as task balancing and expansion costs. Balancing task-specific data becomes increasingly challenging as task coverage expands due to potential conflicts between datasets. Additionally, learning new tasks independently can lead to catastrophic forgetting of existing knowledge, necessitating costly retraining with both old and new data to add new capabilities.

Inability to Process Multiple Images: LLaVA v1 and v1.5 were instruction-tuned using one image and one instruction. Consequently, while technically possible, passing multiple images for a single query does not yield good results, and the model does not properly support multiple images as input. This limits its applicability in scenarios requiring complex reasoning across multiple visual inputs.

Batch Inference Limitations: The current LLaVA implementation does not properly support batch inference, which can hinder its efficiency in high-throughput applications. A workaround involves submitting concurrent prediction requests, relying on vLLM to dispatch requests to different workers.

Over-smoothing and Incomplete Data in Synthetic Generation: Synthetic datasets, including those used by LLaVA, may exhibit an undue sterility, lacking the multifarious noise and intricacies inherent in real-world data. Some synthetic data generation models may oversimplify data, resulting in attenuated representations devoid of nuanced details and diversity, which can impede the model's ability to assimilate complex variations in genuine data. The presence of lacunae or partial information within synthetic datasets can also stem from imperfections or errors in the generation process, compromising the model's fidelity to real-world phenomena.

These limitations highlight ongoing research areas for LLaVA, particularly in refining data generation, improving continual learning strategies, and enhancing multi-image processing capabilities to further close the gap with state-of-the-art proprietary models.

V. Head-to-Head Performance Comparison

A direct comparison between GPT-4V and LLaVA reveals distinct strengths and weaknesses, largely influenced by their underlying architectures, training scales, and development philosophies. While GPT-4V, as a proprietary model with immense resources, generally demonstrates superior capabilities across a broader range of complex tasks, LLaVA, as an open-source alternative, offers impressive performance given its efficiency and accessibility.

5.1 Quantitative Benchmarks

Quantitative evaluations provide a structured means to compare the performance of GPT-4V and LLaVA across various multimodal tasks.

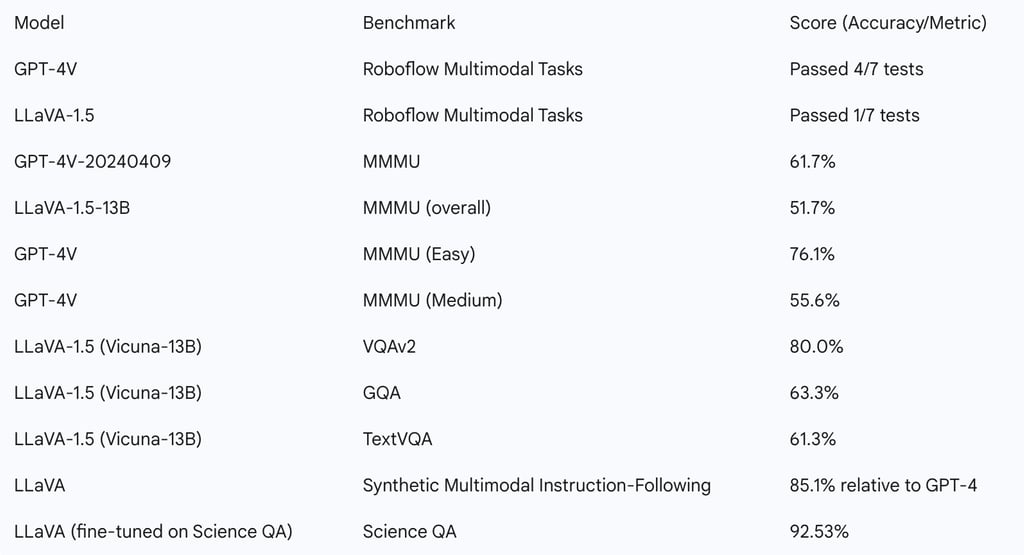

Roboflow Tests

In tests conducted by Roboflow on November 23rd, 2023, GPT-4 with Vision (GPT-4V) demonstrated a clear performance advantage over LLaVA-1.5 on multimodal tasks. Across seven state-of-the-art Large Multimodal Models (LMMs), GPT-4V passed four out of seven tests, whereas LLaVA-1.5 passed only one. This indicates that, based on these specific tests, GPT-4V generally performs better than LLaVA at multimodal tasks.

MMMU Benchmark

The MMMU (Massive Multi-discipline Multimodal Understanding and Reasoning) benchmark is designed to evaluate MLLMs on advanced perception and reasoning tasks requiring domain-specific knowledge, akin to challenges faced by human experts. The evaluation of 14 open-source LMMs and the proprietary GPT-4V highlights the substantial challenges posed by MMMU. Even the advanced GPT-4V only achieves a 56% overall accuracy, indicating significant room for improvement across the board.

When broken down by difficulty levels, GPT-4V consistently outperforms other models, though the gap narrows with increasing complexity.

Easy Category: GPT-4V demonstrates significantly higher proficiency, achieving a success rate of 76.1%.

Medium Category: GPT-4V maintains a lead with an accuracy of 55.6%.

Hard Category: The performance gap between GPT-4V and other models, including LLaVA, almost disappears. This might reflect a current limitation in handling expert-level challenging queries, indicating that as task complexity increases, the advantage of more advanced models like GPT-4V diminishes.

Specific scores for LLaVA-1.5 on the MMMU benchmark are available from other sources, showing its performance relative to GPT-4V and other models. For instance, LLaVA-1.5-13B achieved an overall score of 51.7% on the MMMU validation set. The OpenCompass leaderboard, which includes MMMU_VAL, shows various LLaVA models with scores typically in the 30s to 40s for MMMU_VAL, while GPT-4V-20240409 scores 61.7%. This further reinforces GPT-4V's lead in this challenging benchmark.

VQA Benchmarks

In Visual Question Answering (VQA) tasks, which assess an AI system's ability to interpret and reason about visual content in conjunction with textual information, GPT-4V generally achieves state-of-the-art (SOTA) performance. It demonstrates enhanced explanation generation, particularly when using composite images as few-shots.

LLaVA models, particularly LLaVA-1.5, have also shown strong performance on various VQA benchmarks, often achieving state-of-the-art results across 11 benchmarks. LLaVA-1.5, for example, achieved scores of 78.5% on VQAv2, 62.0% on GQA, and 58.2% on TextVQA with its Vicuna-7B variant, and slightly higher with the Vicuna-13B variant (80.0% on VQAv2, 63.3% on GQA, 61.3% on TextVQA). These scores are competitive, especially considering LLaVA's efficiency.

The following table summarizes selected benchmark performances where data is available for both models or their close variants:

This table illustrates GPT-4V's generally stronger performance on broad multimodal tasks and complex reasoning (e.g., MMMU, Roboflow tests). However, LLaVA demonstrates competitive or even superior performance in specific domains or when fine-tuned for particular tasks like Science QA, indicating its specialized strengths and the effectiveness of its instruction tuning approach.

5.2 Qualitative Examples and Specific Task Comparisons

Beyond quantitative benchmarks, qualitative assessments and specific task comparisons reveal the nuances of how GPT-4V and LLaVA stack up in real-world scenarios.

General Image Understanding and Detail Recognition

In a crude comparison involving a screenshot from a tech presentation, GPT-4V demonstrated a significantly higher level of understanding and detail recognition compared to LLaVA 1.6 34B.

GPT-4V: Provided a highly detailed explanation, recognizing specific AWS services (e.g., API Gateway, EventBridge, Step Functions, Lambda) on a blackboard. It even correctly identified a seemingly random number ("42 million, 7000") and speculated on its relation to delivery metrics. GPT-4V also noted "Taco Bell" and a car icon, connecting them to a logistics context, and even identified a misspelling ("LAHDA" instead of "Lambda"). Its contextual understanding allowed it to infer the setting as a professional presentation and the person as a presenter due to AWS-branded apparel. The author concluded that GPT-4V was "leagues ahead when it comes to understanding a picture".

LLaVA 1.6 34B: Offered a more high-level and general description, noting "two individuals standing in front of a large screen with various drawings and text." While it identified some icons (e.g., delivery, API, event, location), its recognition was not as specific as GPT-4V's. It mentioned numbers but did not provide specifics or potential meanings. LLaVA did make some socio-professional inferences about engagement and informal work environment, but lacked the granular detail of GPT-4V.

This comparison, while acknowledged as "crude" and "unfair" due to model size differences, highlights GPT-4V's superior ability to extract fine-grained details and infer deeper context from visual information. This suggests that GPT-4V possesses a more robust visual perception and a more extensive knowledge base to draw upon for contextual interpretation.

OCR (Optical Character Recognition)

In OCR tasks, particularly involving handwritten text, GPT-4V generally exhibits higher proficiency.

GPT-4V: Demonstrates strong capabilities in handling handwritten text, with only minor errors detected in its interpretation.

LLaVA: Encounters challenges with deciphering handwritten texts. While it acknowledges its limitations and can provide recommendations for improved performance, it struggles when text is rotated beyond 90 degrees. Both models, however, struggle to effectively decipher overlapped text, for instance, failing to read the second "A" in a provided logo example.

This indicates that while both models face challenges with complex OCR scenarios like overlapped or highly rotated text, GPT-4V generally offers more accurate and reliable performance for standard handwritten text recognition.

Sudoku and Crossword Puzzles

Tasks requiring structured logical reasoning from visual input, such as puzzles, reveal different strengths and weaknesses.

GPT-4V: Struggles with solving Sudoku puzzles. While it can understand the task's objective, it often misinterprets the grid, leading to consistently incorrect answers. In contrast, GPT-4 demonstrates a better grasp of crossword puzzles, successfully solving them, albeit with occasional errors. This suggests a stronger ability to handle textual constraints and patterns in a grid format.

LLaVA: Tends to struggle significantly with Sudoku puzzles, finding it difficult to comprehend the image and the task's nuances. For crossword puzzles, LLaVA does not provide direct answers but instead offers explanations on how to solve the puzzle, reflecting its conversational instruction-following abilities rather than direct problem-solving.

This comparison indicates that while neither model is perfect at visual puzzle-solving, GPT-4V shows a greater capacity for direct problem resolution in text-based puzzles like crosswords, whereas LLaVA leans more towards instructional guidance.

Fine-Grained Object Detection

In tasks requiring the detection of small or subtle objects, GPT-4V generally outperforms LLaVA.

GPT-4V: Excels in object detection, effectively recognizing subtle objects such as a closed umbrella, which can be challenging even for human perception. It also consistently performs well in identifying animals in complex scenes, such as a tiger and its cubs in the wild.

LLaVA: Its performance diverges when detecting small or subtle objects. For example, when tasked with identifying humans holding umbrellas, LLaVA tends to overlook closed umbrellas. It may also misidentify animals in images, such as a tiger and its cubs.

This demonstrates GPT-4V's superior visual acuity and its ability to discern fine-grained details within complex scenes, which is crucial for applications requiring precise object recognition.

Data Analysis and Mathematical Reasoning

GPT-4V: Adeptly interprets mathematical expressions, conducts required calculations, and provides detailed step-by-step processes for straightforward mathematical equations presented visually. In data analysis, when presented with a graph, GPT-4 goes beyond a mere description, offering elaborate insights and observations derived from the data. While it may make minor errors (e.g., misinterpreting a starting year on a graph), it generally provides a good understanding of overall context and trends.

LLaVA: Struggles to comprehend straightforward mathematical equations presented visually. In data analysis, LLaVA primarily offers a description of the visual representation of a graph rather than providing deeper insights or observations.

This highlights GPT-4V's stronger capabilities in mathematical OCR and reasoning, as well as its ability to extract and interpret insights from data visualizations.

Overall, GPT-4V generally demonstrates superior performance in tasks requiring deep visual comprehension, fine-grained detail recognition, complex reasoning, and robust OCR. Its extensive training and proprietary optimization likely contribute to its higher accuracy and broader generalizability. LLaVA, while highly capable and efficient, particularly for an open-source model, shows limitations in these more challenging visual and reasoning tasks, often providing more general descriptions or struggling with nuanced interpretations. However, LLaVA’s ability to perform well in conversational contexts and its efficiency make it a valuable tool for many applications where the absolute cutting edge of performance is not the sole criterion.

VI. Conclusions and Recommendations

The head-to-head comparison between OpenAI's GPT-4V and the open-source LLaVA reveals a complex and evolving landscape in multimodal AI. GPT-4V consistently demonstrates superior performance across a broad spectrum of visual understanding and reasoning tasks, including fine-grained object detection, complex data analysis, and robust multilingual multimodal comprehension. Its strength stems from a foundation in a sophisticated transformer architecture, extensive pre-training on vast and likely proprietary datasets, and rigorous fine-tuning through Reinforcement Learning from Human Feedback (RLHF). This enables GPT-4V to exhibit human-level performance on challenging academic and professional benchmarks, understand nuanced instructions, and integrate seamlessly into high-impact real-world applications like assistive technologies. The model's ability to handle interleaved image-text inputs and interact with external tools further extends its utility and adaptability to dynamic, real-time scenarios. However, GPT-4V's proprietary nature means its precise architectural details and training data remain undisclosed, hindering reproducibility and external auditing. Its usage also incurs costs, and it is not entirely free from limitations such as hallucinations, a knowledge cutoff, and inherited biases, necessitating careful human oversight in critical applications.

LLaVA, as a prominent open-source alternative, offers a compelling value proposition by democratizing access to powerful multimodal AI. Its modular architecture, which efficiently integrates pre-trained and frozen CLIP vision encoders with Vicuna LLMs via a lightweight MLP projector, allows for remarkably efficient training and deployment, even on consumer-grade hardware. This accessibility is a significant advantage for researchers and developers with limited computational resources, fostering broader innovation within the community. LLaVA's innovative reliance on GPT-4 to generate its instruction-following dataset has been pivotal to its rapid development and strong performance on various benchmarks, demonstrating a synergistic approach where proprietary advancements can accelerate open-source progress. While LLaVA generally trails GPT-4V in raw performance on the most challenging tasks, it achieves impressive results given its scale and resource efficiency, particularly in conversational visual understanding and specific VQA domains. Its limitations include concerns about the quality and potential biases of its synthetic training data, challenges with multi-image processing, and occasional struggles with highly complex visual reasoning.

Key Conclusions:

Performance Hierarchy: GPT-4V generally holds a performance edge over LLaVA in tasks demanding deep visual comprehension, fine-grained detail extraction, and complex, multi-modal reasoning. This is evident in its superior performance on broad benchmarks like MMMU and qualitative assessments of detail recognition.

Architectural Philosophies: GPT-4V's strength lies in its massive, proprietary, end-to-end optimization, leveraging undisclosed scale and extensive human alignment. LLaVA's strength is its efficient, modular design, which cleverly combines existing powerful components, making advanced multimodal AI accessible and cost-effective.

Data Strategy Impact: LLaVA's innovative use of GPT-4 for synthetic data generation highlights a scalable approach to data curation but also introduces considerations regarding data quality, potential bias propagation, and the need for robust validation.

Accessibility vs. Frontier Performance: GPT-4V pushes the frontier of AI capabilities but is accessible primarily through a paid API. LLaVA, while not always matching GPT-4V's peak performance, offers a powerful, open-source, and resource-efficient alternative that can be deployed locally, significantly lowering the barrier to entry for multimodal AI development and research.

Complementary Roles: The two models, despite being competitors, play complementary roles in the AI ecosystem. GPT-4V drives innovation at the cutting edge, setting high standards, while LLaVA fosters widespread adoption, experimentation, and further development within the open-source community.

Recommendations:

For High-Stakes and Cutting-Edge Applications: Organizations requiring the absolute highest levels of accuracy, nuanced understanding, and broad generalizability in multimodal tasks, particularly those involving complex reasoning or requiring integration with external tools for real-time information, should consider leveraging GPT-4V via its API. However, a robust human-in-the-loop verification process is essential to mitigate risks associated with hallucinations, biases, and the knowledge cutoff.

For Resource-Constrained Environments and Open-Source Development: For researchers, startups, or developers operating with limited computational resources, or those committed to an open-source development philosophy, LLaVA (and its newer iterations like LLaVA-1.5 or LLaVA-NeXT) presents an excellent and highly capable alternative. Its efficiency and local deployability make it ideal for experimentation, custom fine-tuning, and applications where cost-effectiveness and transparency are paramount.

Future Research Directions for LLaVA: To further enhance LLaVA's capabilities and address its current limitations, future research should focus on:

Improving Synthetic Data Quality: Developing more sophisticated validation mechanisms for GPT-4 generated data, potentially incorporating more extensive human feedback or advanced AI-driven quality checks to reduce biases and inaccuracies.

Enhancing Multi-Image Processing: Addressing the current limitation of processing multiple images effectively within a single query to enable more complex visual reasoning scenarios.

Mitigating Base Model Degradation: Exploring advanced continual learning strategies that prevent overfitting to task-specific instructions, thereby preserving the general capabilities of the base model.

Optimizing Visual Token Processing: Researching methods to reduce the number of visual tokens required for input without sacrificing detail, potentially improving training and inference speed.

Strategic Collaboration and Hybrid Approaches: The AI community could benefit from increased collaboration between proprietary and open-source initiatives. Hybrid approaches, where open-source models like LLaVA are fine-tuned or augmented with proprietary tools (e.g., using GPT-4 for data generation or evaluation), can accelerate progress for both paradigms. This synergy can lead to more robust, accessible, and ethically aligned multimodal AI systems.

FAQ

What are Multimodal Large Language Models (MLLMs)?

MLLMs are advanced artificial intelligence systems designed to process, interpret, and generate information across multiple forms of data, known as modalities. These typically include text, images, audio, and video. Unlike earlier AI models that were limited to a single data type, MLLMs can integrate diverse inputs to develop a more comprehensive understanding of context and content, much like humans do. This capability allows them to perform complex tasks such as describing an image, answering nuanced questions about its content, summarising text embedded within it, or even inferring humour from a visual scene. The development of MLLMs marks a significant leap in AI, bridging the gap between previously specialised models for different modalities and moving closer to artificial general intelligence (AGI) by enabling more intuitive and powerful interactions.

How do MLLMs integrate different modalities like vision and language?

The integration of vision and language in MLLMs is a sophisticated process that builds upon advancements in transformer architectures. Initially, transformer networks revolutionised natural language processing (NLP) by enabling models to understand complex relationships between words. This concept was then extended to vision through models like Vision Transformer (ViT), which processes image inputs by treating image patches as sequences of tokens, similar to how text is processed. A crucial development was Contrastive Language-Image Pre-Training (CLIP), which learned to align images and text in a shared embedding space.

In models like LLaVA, this integration is achieved through a modular design: a pre-trained vision encoder (CLIP) extracts visual features from images, and a language model (like Vicuna) handles text. A lightweight Multi-Layer Perceptron (MLP) projector acts as a bridge, translating the visual embeddings from the vision encoder into a format that the language model can understand. This modularity allows for efficient development by leveraging existing high-performing unimodal components, focusing computational resources on effectively connecting these different modalities rather than training an entire model from scratch.

What are the key differences between GPT-4V and LLaVA?

GPT-4V and LLaVA represent two distinct approaches within the MLLM landscape:

GPT-4V (Proprietary):

Architecture & Performance: GPT-4V, from OpenAI, is a leading proprietary model known for its advanced reasoning, extensive knowledge, and superior performance across a wide array of complex tasks, including deep visual comprehension, fine-grained object detection, and complex reasoning. It consistently outperforms LLaVA in head-to-head evaluations requiring nuanced understanding.

Training & Resources: It benefits from immense computational resources and extensive Reinforcement Learning from Human Feedback (RLHF), contributing to higher accuracy, broader generalisability, and advanced conversational fluidity. Its precise details (model size, training data) are undisclosed.

Accessibility: Access is API-driven and incurs usage costs based on token volume, which can be prohibitive for some users.

Limitations: Has a knowledge cutoff, can exhibit hallucinations and biases inherited from training data, and is restricted for high-risk tasks.

LLaVA (Open-Source):

Architecture & Performance: LLaVA (Large Language and Vision Assistant) is a prominent open-source alternative. It features an innovative modular architecture, integrating pre-trained vision encoders (CLIP) and language models (Vicuna) via a lightweight MLP projector. While generally trailing GPT-4V in raw performance on many benchmarks, it offers impressive capabilities given its significantly smaller scale and efficiency.

Training & Resources: Its strategic use of GPT-4 to generate its instruction-following dataset has been pivotal, showcasing a novel approach to data curation. Its modular design allows for remarkably efficient training and deployment, even on consumer-grade hardware.

Accessibility: As an open-source model, it is cost-effective, accessible, and adaptable for researchers and developers, fostering broader innovation within the AI community. Code and models are publicly available.

Limitations: Reliance on GPT-4 generated data raises concerns about data quality, bias replication, and completeness. It also has limitations in processing multiple images simultaneously and may struggle with complex OCR or fine-grained object detection compared to GPT-4V.

In essence, GPT-4V pushes the boundaries of MLLM performance, setting high benchmarks, while LLaVA democratises access to powerful multimodal AI through its efficient design and open-source availability.

What are some real-world applications of GPT-4V?

GPT-4V's advanced capabilities have led to its integration into a variety of practical applications with significant societal impact:

Assistive Technology: It is used by "Be My Eyes" to power a 'Virtual Volunteer' that assists visually impaired and low-vision individuals. This includes tasks like reading website content, navigating real-world scenarios, and making informed decisions.

Education and Tutoring: Duolingo uses GPT-4 to explain mistakes and facilitate practice conversations for language learners. Khan Academy employs it as a tutoring chatbot named "Khanmigo."

Medical Imaging Analysis: It is being explored for analysing medical images and scans to provide detailed health or disease information, though human oversight is critical due to potential inaccuracies.

Content Moderation: GPT-4V can identify and classify NSFW (Not Safe for Work) objects in images, aiding in content moderation efforts.

Code Generation from Sketches: A transformative application is its ability to generate functional website code directly from hand-drawn sketches or transform a sketch into an architecture diagram, accelerating development workflows.