How AI Vendors Comply with GDPR/CCPA Regulations

The proliferation of Artificial Intelligence (AI) technologies presents both transformative opportunities and complex data privacy challenges. For AI vendors, navigating the stringent requirements of regulations such as the General Data Protection Regulation (GDPR) in Europe and the California Consumer Privacy Act (CCPA) in the United States is not merely a legal obligation but a strategic imperative. Adherence to these frameworks fosters user trust, mitigates significant financial penalties, and enhances long-term market resilience. This report delineates the foundational principles of GDPR and CCPA as they apply to AI, explores the unique compliance hurdles inherent in AI development and deployment, and outlines the essential technical, organizational, and governance measures AI vendors must implement to achieve and sustain regulatory compliance. Key areas of focus include embedding privacy by design, addressing the "black box" nature of many AI models, managing vast data demands, and establishing robust data governance frameworks coupled with continuous vendor management.

1. Introduction: The AI-Privacy Nexus

The rapid advancement and widespread adoption of Artificial Intelligence (AI) technologies have revolutionized industries, offering unprecedented opportunities for innovation and efficiency. However, this transformative power comes with inherent data privacy challenges, particularly concerning the vast amounts of personal data AI systems often collect, process, and analyze. Data privacy regulations, notably the GDPR in Europe and the CCPA in California, establish stringent frameworks designed to protect individual data rights. For AI vendors, understanding and proactively complying with these regulations is paramount, not only to avoid severe penalties but also to build and maintain user trust in an increasingly data-conscious world. This report delves into the mechanisms, challenges, and best practices for AI vendors to navigate this complex regulatory landscape. The emphasis on privacy within AI systems is increasingly viewed not as a burden, but as a differentiator that builds long-term user trust and regulatory resilience, signaling a shift in industry perspective.

2. Foundational Principles: GDPR and CCPA in the AI Context

Effective compliance for AI vendors begins with a deep understanding of the core principles and data subject rights enshrined in GDPR and CCPA. These regulations, while sharing common goals, present distinct requirements that significantly impact AI system design and operation.

2.1. Core Principles of GDPR and their AI Implications

The GDPR's Article 5 lays out fundamental principles that govern the processing of personal data, directly influencing how AI vendors must operate:

Lawfulness, Fairness, and Transparency: Personal data must be processed lawfully, fairly, and in a transparent manner. For AI, this necessitates clearly informing individuals about data collection, the specific purposes for processing, and the involvement of AI algorithms in decision-making processes. A significant challenge arises with "black box" AI models, where the underlying decision-making logic is opaque, making it difficult for organizations to provide the required level of transparency to data subjects and regulators. This opacity directly impacts the ability to demonstrate fairness and accountability in AI-driven outcomes.

Purpose Limitation: Data should be collected for specified, explicit, and legitimate purposes and not further processed in a manner incompatible with those purposes. AI vendors must define clear, understandable purposes for using personal data and limit processing accordingly. This principle is particularly critical in preventing the repurposing of data beyond agreed terms, especially for training AI models or selling derived insights to third parties without explicit consent, a practice that constitutes a significant privacy risk.

Data Minimization: Processing must be adequate, relevant, and limited to what is necessary for the intended purposes. This principle often stands in direct conflict with AI's inherent need for vast datasets for effective training and performance optimization. To reconcile this tension, AI vendors must strive to collect only essential data, implement privacy-by-design techniques from the outset, and explore alternatives such as synthetic data generation where real personal data is not strictly necessary. The challenge here is to balance the utility of AI with the imperative of privacy.

Accuracy: Personal data must be accurate and, where necessary, kept up to date. AI systems, particularly those involved in profiling or automated decision-making, can perpetuate or even amplify inaccuracies present in their training data, leading to biased or unfair outcomes for individuals. This necessitates robust data quality management and mechanisms for data rectification, allowing individuals to correct their information.

Storage Limitation: Data should be kept only for as long as necessary for the purposes for which it is processed. AI vendors must establish clear data retention policies that align with this principle, examining and regularly reviewing the duration of data storage for all AI tools and models. This prevents unnecessary accumulation of personal data, reducing the risk exposure over time.

Integrity and Confidentiality (Security): Personal data must be processed in a manner that ensures appropriate security, including protection against unauthorized or unlawful access, processing, loss, destruction, or damage. This mandates the implementation of robust technical and organizational measures (TOMs) such as encryption (both in transit and at rest), strict access controls, and regular security audits and penetration testing. The accountability for securing data extends beyond the vendor's direct infrastructure to any third-party AI services utilized.

Accountability: The data controller (and by extension, the AI vendor as a processor) is responsible for, and must be able to demonstrate, compliance with all aforementioned principles. This requires comprehensive internal documentation, clear policies, and robust governance frameworks that can demonstrate adherence to GDPR requirements to supervisory authorities.

2.2. Core Principles of CCPA and their AI Implications

The CCPA (and its amendment, CPRA) grants California consumers significant rights over their personal information, impacting AI vendors through:

Vendor Contracting Requirements: A cornerstone of CCPA compliance is the requirement for companies to have written contracts with suppliers, service providers, and other third parties, including AI vendors. These contracts must explicitly guarantee that personal data is handled and disseminated solely as directed by the contracting company and in full compliance with CCPA regulations. This provision underscores the critical importance of robust Data Processing Addenda (DPAs) that clearly delineate responsibilities and obligations between the data controller and the AI vendor (data processor).

Consent and Opt-Outs: A notable distinction from GDPR is CCPA's general allowance for opt-out, particularly concerning the sale or sharing of personal data, rather than requiring explicit opt-in for all data processing. AI vendors must provide clear, conspicuous, and simple opt-out mechanisms on their websites or applications, respecting user choices, especially when data is used for targeted marketing or financial advantage. This difference in consent models necessitates a flexible approach to consent management for vendors operating globally.

Rights to Privacy: The CCPA empowers consumers with rights to know what personal information is collected about them, to access that data, to delete it, and to correct inaccurate information, mirroring many of the rights under GDPR. To effectively respond to these Data Subject Requests (DSRs), AI vendors must maintain a comprehensive understanding of all systems holding connected data, ensuring cross-functional cooperation throughout the organization for accurate and effective DSR fulfillment.

2.3. Key Data Subject Rights and AI

Both GDPR and CCPA empower individuals with a comprehensive set of rights over their data, which AI vendors must facilitate through their systems and processes:

Right to be Informed: Individuals have the fundamental right to know what personal data is collected about them, the specific purposes for collection, the identities of those collecting the data, the retention period, how to file a complaint, and whether data sharing is involved. This includes transparent information about any automated decision-making and profiling that impacts them. All such information must be conveyed in straightforward and easily understandable language.

Right of Access: Individuals can submit requests to ascertain whether their personal information is being processed and to obtain a copy of that data, along with supplementary information such as the purposes of processing, categories of data, recipients, and information about automated decision-making. AI vendors must be equipped to provide this data, ideally in a commonly used, machine-readable format to facilitate data portability.

Right to Rectification: Individuals have the right to request that organizations update any inaccurate or incomplete personal data held about them. If the inaccuracy is confirmed, organizations, including AI vendors, typically have one month to respond to such requests. For AI systems, this means implementing mechanisms to update or correct data used in models, especially if the data forms the basis for automated decisions.

Right to be Forgotten (Erasure): Also known as the right to erasure, this allows individuals to request the deletion of their personal data under certain circumstances. This right presents significant technical challenges for AI models, particularly large language models (LLMs) and generative AI, where data is deeply embedded through training. The difficulty lies in selectively removing specific data without requiring a complete retraining of the model, a process often deemed impractical and costly due to the scale and complexity of these models. Efforts towards "machine unlearning" are emerging as a potential solution, but the ability to fully erase learned patterns remains a complex technical hurdle.

Right to Restrict Processing: Individuals can request that an organization limit how it uses their personal data, even if full deletion is not required. This right applies in specific situations, such as during the verification of data accuracy or when processing is unlawful but the individual prefers restriction over erasure. Once data is restricted, its processing is generally prohibited without consent, legal claims, or protection of others' rights.

Right to Data Portability: This right allows individuals to obtain and reuse their personal data for different services, enabling them to move their data easily between different IT environments. AI vendors must provide data in a structured, commonly used, and machine-readable format to facilitate this right.

Right to Object to Processing: Individuals can object to the processing of their personal data, particularly when it is based on legitimate interests or tasks carried out in the public interest. This right extends to objecting to automated decision-making and profiling.

Rights in Relation to Automated Decision-Making and Profiling: Both GDPR (Article 22) and CCPA grant individuals specific rights concerning decisions made solely by automated means, including profiling, that produce legal effects concerning them or significantly affect them. This often mandates the right to human intervention, the ability to express one's point of view, and the right to contest the decision. The distinction between "fully automated decisions" and "decision assistance systems" is legally relevant; the prohibition under Article 22 GDPR does not apply if there is genuine human involvement and critical scrutiny of the AI's output.

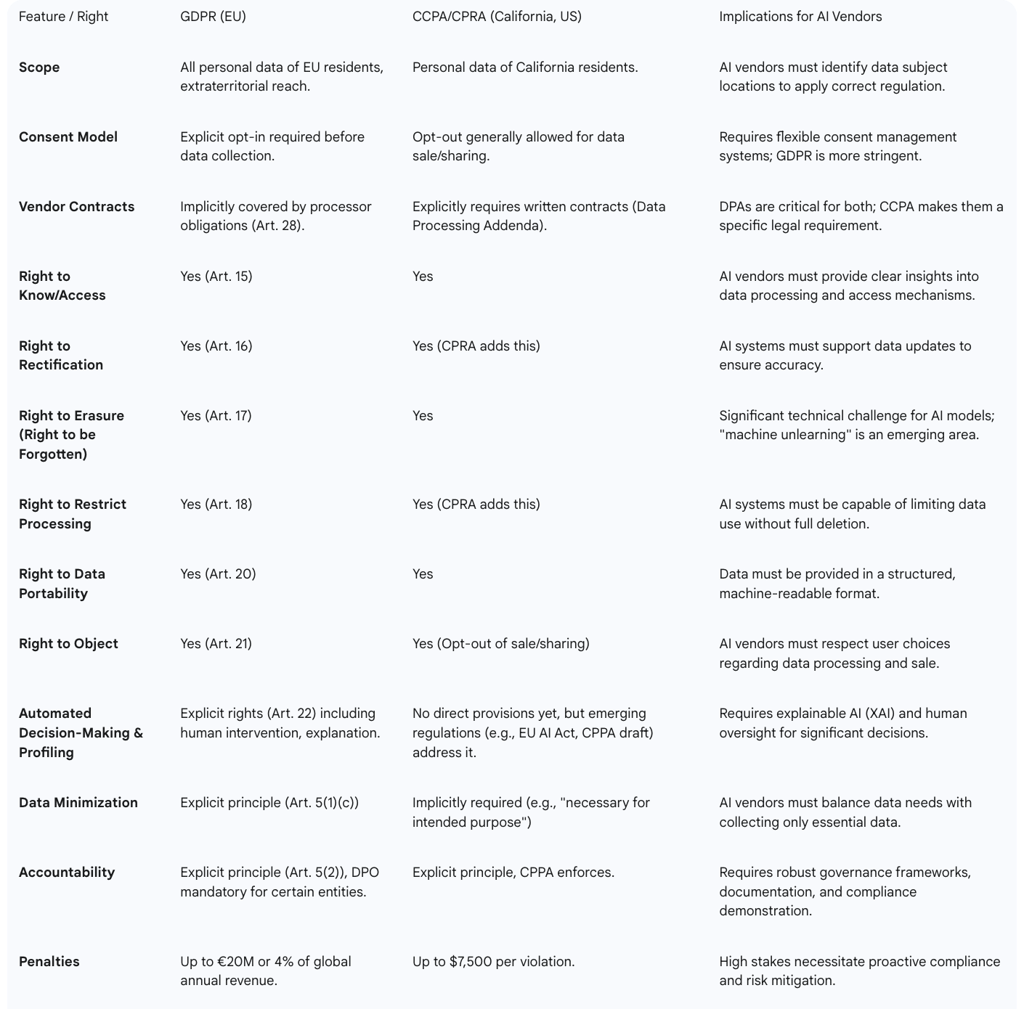

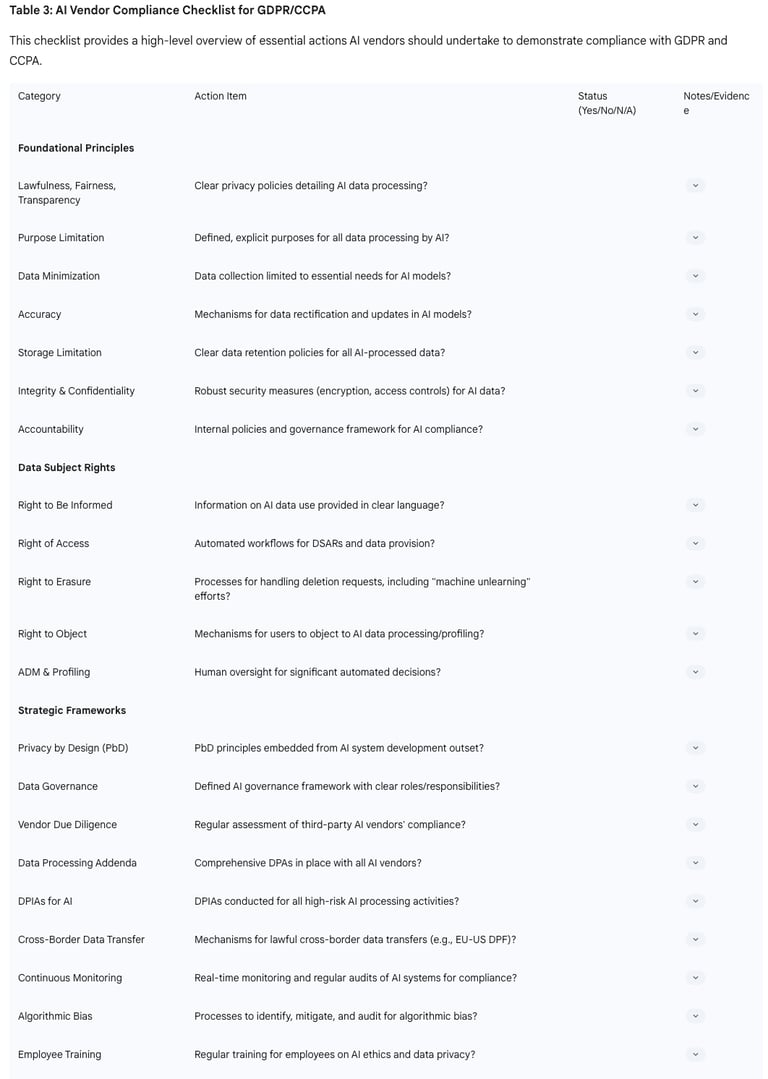

Table 1: Comparative Overview of GDPR and CCPA Principles and Data Subject Rights for AI Vendors

This table provides a concise comparison of key features and rights under GDPR and CCPA, offering a quick reference for AI vendors navigating compliance in both jurisdictions. The nuances in consent models and specific rights highlight the need for a tailored, rather than a one-size-fits-all, compliance strategy.