Quantum Artificial Intelligence: Foundations, Frontier Advancements, and Strategic Outlook

Quantum Artificial Intelligence (QAI) represents a profound convergence of quantum computing and artificial intelligence, poised to redefine the limits of computational capability. This interdisciplinary field harnesses the unique principles of quantum mechanics to significantly enhance machine learning and problem-solving, offering solutions to complex challenges currently intractable for classical systems.

Quantum Artificial Intelligence (QAI) represents a profound convergence of quantum computing and artificial intelligence, poised to redefine the limits of computational capability. This interdisciplinary field harnesses the unique principles of quantum mechanics to significantly enhance machine learning and problem-solving, offering solutions to complex challenges currently intractable for classical systems. The inherent ability of quantum computers to process data in entirely new ways, leveraging phenomena like superposition and entanglement, positions QAI as a transformative force across numerous sectors.

Recent advancements in quantum hardware, particularly the stabilization and development of logical qubits, alongside significant theoretical algorithmic developments, are accelerating QAI's progress. While the field remains in its nascent stages, with much research still in the proof-of-concept phase, QAI is anticipated to deliver transformative impacts across industries such as drug discovery, materials science, and finance. However, significant challenges persist, including the inherent instability of quantum hardware, scalability limitations, and the critical need for highly specialized expertise. The future trajectory of QAI points strongly towards hybrid quantum-classical systems, where artificial intelligence itself plays a crucial role in improving the reliability and efficiency of quantum hardware and software. To fully realize QAI's immense potential and responsibly navigate its inherent risks, proactive investment in foundational research, the cultivation of interdisciplinary talent, and the establishment of robust governance frameworks are essential.

II. Fundamentals of Quantum Artificial Intelligence

Defining Quantum AI: The Synergy of Quantum Computing and AI

Quantum Artificial Intelligence (QAI) stands at the intersection of two transformative technological domains: quantum computing and artificial intelligence. At its core, QAI refers to the implementation of AI algorithms on quantum computers, leveraging the unique principles of quantum mechanics to process data in fundamentally new ways. This integration moves beyond the binary bits (0s and 1s) of classical computers, which can only exist in one state at a time, to quantum bits, or qubits, which possess far richer information-carrying capabilities. By harnessing these quantum properties, QAI aims to significantly enhance machine learning and problem-solving capabilities, addressing computational challenges that are beyond the reach of conventional AI systems.

Core Quantum Principles: Qubits, Superposition, Entanglement, and their Computational Advantage

The computational prowess of QAI stems directly from the unique principles of quantum mechanics:

Qubits: Unlike classical bits, which are restricted to a state of either 0 or 1, qubits can exist in a superposition of states, meaning they can be both 0 and 1 simultaneously. This property allows a quantum system with

n qubits to represent 2n states concurrently, leading to an exponential increase in information density and processing potential. This inherent parallelism provides QAI with a theoretical advantage over traditional AI models by enabling multiple calculations to be performed at once.

Superposition: This principle allows AI models to explore vast solution spaces simultaneously. Instead of sequentially checking each possibility, a quantum algorithm can operate on a superposition of all possible inputs, effectively evaluating numerous potential solutions in parallel. This capability can drastically reduce the time required for complex computations, particularly in areas like optimization and data analysis.

Entanglement: Entanglement describes a phenomenon where two or more qubits become linked in such a way that the state of one instantaneously influences the state of the others, regardless of the physical distance between them. This interconnectedness enables faster and more efficient information processing by allowing qubits to correlate in ways that are impossible for classical bits. The combined power of superposition and entanglement could revolutionize machine learning, optimization, and data analysis by significantly cutting down the time needed for complex computations.

The fundamental difference in information representation and processing—moving from sequential, binary logic to parallel exploration of an exponential number of states—signifies a paradigm shift that extends beyond mere speed improvements. Classical AI often relies on iterating through possibilities or employing clever heuristics to find approximate solutions. Quantum AI, by contrast, can encode and explore an exponential number of states concurrently. This changes the computational approach from a "brute force and clever heuristics" model to one that can, in theory, explore the entire solution landscape simultaneously. This capability suggests a qualitative leap in problem-solving ability, enabling the tackling of problems that are computationally intractable for classical methods, even with infinite time, due to the exponential growth of possibilities. Examples include certain complex optimization problems and high-fidelity molecular simulations.

Distinction from Classical AI: Paradigms, Capabilities, and Complementary Roles

The emergence of Quantum AI does not signal an outright replacement of classical AI, but rather a powerful evolution towards a complementary computational landscape.

Classical AI: Traditional AI systems operate on classical computers using binary bits, which inherently limits their processing power. While highly mature and widely deployed, classical AI excels at tasks such as pattern recognition, data analysis, and predictive modeling within the confines of current computational limits. However, it struggles with exponentially larger datasets and problems that demand vast computational resources, such as complex combinatorial optimization or intricate quantum simulations.

Quantum AI: By leveraging qubits, QAI can handle exponentially larger datasets and perform computations at unprecedented speeds. It is uniquely suited for complex problem-solving in domains where classical AI falters, including optimizing supply chains, predicting protein folding, and improving financial risk assessments. QAI's ability to process high-dimensional data in parallel offers a significant advantage in areas like image classification and natural language processing.

Rather than replacing classical computing, QAI is expected to complement and enhance existing systems. This leads to the "hybrid imperative," a fundamental architectural choice driven by the inherent strengths and weaknesses of both paradigms. Classical computers remain highly efficient for routine, sequential tasks and general-purpose data management. Quantum computers, however, excel at specific, computationally hard subroutines where quantum advantage can be realized. The specialized nature of quantum advantage, coupled with the current fragility and high cost of quantum hardware, necessitates offloading general-purpose tasks to classical systems. This approach ensures resource efficiency and practical applicability, maximizing the return on investment in quantum technologies. This implies that the future of advanced computation will be deeply integrated, requiring new software stacks and programming paradigms that seamlessly orchestrate classical and quantum resources. This also suggests a strategic shift within the quantum industry from focusing solely on hardware development to prioritizing integrated solutions and sophisticated software abstraction layers. Organizations will likely adopt hybrid approaches, using QAI for specialized computations while relying on classical AI for routine tasks.

III. Quantum Machine Learning (QML) and Quantum Neural Networks (QNNs)

Theoretical Frameworks of QML: Enhancing Data Processing and Computational Speed

Quantum Machine Learning (QML) is a burgeoning research area that explores the intricate interplay between quantum computing and machine learning. Its primary objective is to leverage qubits and quantum operations to significantly enhance the computational speed and data storage capabilities of algorithms. QML investigates whether quantum computers can provide acceleration for the training or evaluation phases of machine learning models. Conversely, machine learning techniques are also being applied to aid in foundational quantum research, such as uncovering novel quantum error-correcting codes, estimating the properties of complex quantum systems, or developing entirely new quantum algorithms. QML represents the primary subset of QAI focused on augmenting AI capabilities through the application of quantum principles.

Quantum Associative Memories and Pattern Recognition: Mechanisms for Superior Capacity

A compelling example of QML's potential lies in Quantum Associative Memories (QAMs), which offer a qualitative leap over their classical counterparts in pattern recognition. Associative memories are designed to recognize stored content based on a measure of similarity, a critical function for machine learning tasks like pattern recognition. Classical associative memories, however, are severely limited by a phenomenon known as "cross-talk." When too many patterns are stored, spurious memories emerge and proliferate, leading to a disordered energy landscape where retrieval becomes impossible. The number of storable patterns in classical systems is typically limited by a linear function of the number of neurons.

Quantum associative memories overcome this fundamental limitation by storing patterns in a unitary matrix that acts on the Hilbert space of qubits. Retrieval in QAMs is achieved through the unitary evolution of a fixed initial state to a quantum superposition of the desired patterns, with a probability distribution peaked on the most similar input pattern. This retrieval process is inherently probabilistic due to its quantum nature. Critically, QAMs are inherently free from cross-talk, meaning spurious memories are never generated. This absence of cross-talk directly contributes to their superior capacity, allowing them to efficiently store any polynomial number of patterns, a significant advantage over the linear capacity limit of classical systems.

The case of quantum associative memories highlights a crucial distinction within QML: some approaches aim to use quantum computers as accelerators for classical machine learning problems, for instance, by performing complex matrix operations faster or optimizing training processes. Other approaches, exemplified by QAMs, focus on developing quantum-native solutions that leverage quantum phenomena to fundamentally overcome limitations inherent in classical computational models, leading to qualitatively different capabilities, such as freedom from cross-talk and exponential capacity. This suggests a dual research strategy: one focused on near-term hybrid solutions to accelerate existing AI, and another on foundational quantum algorithms that could redefine what AI can achieve in the long run.

Computational Models of QNNs: From Feed-Forward Networks to Variational Circuits

Quantum Neural Networks (QNNs) represent a specific class of machine learning models that integrate concepts from quantum computing and artificial neural networks. The primary motivation behind their development is to create more efficient algorithms, particularly for applications involving large datasets, by exploiting quantum parallelism, interference, and entanglement.

Early QNN models, emerging in the 1990s, attempted to directly translate the modular structure and nonlinear activation functions of classical feed-forward and recurrent neural networks into quantum algorithms. However, these direct emulations often faced significant challenges, as chains of linear and nonlinear computations can be "unnatural" for quantum computers, and the no-cloning theorem poses a fundamental hurdle for direct copying of qubit states between layers, unlike classical neurons. The advantage of these early models for machine learning has not been conclusively established. More recent research has addressed these problems by suggesting special measurement schemes or modifications to neural networks to make them more amenable to quantum computing. Some proposals involve replacing classical binary neurons with qubits, often referred to as "qurons," allowing neural units to exist in a superposition of "firing" and "resting" states.

Variational Quantum Circuits (VQCs): Architecture, Optimization, and Role in Near-Term Devices

Increasingly, the term "quantum neural network" is used to refer to variational or parameterized quantum circuits (VQCs). VQCs represent a pragmatic and powerful hybrid approach, blending quantum computation with classical optimization techniques.

The architecture of a VQC typically comprises three main components :

Parameterized Quantum Circuit (Ansatz): This is a sequence of quantum gates with adjustable parameters. These parameters allow the circuit to be modified and optimized during the training process, enabling the exploration of a vast solution space. Classical data is first encoded into the quantum states of qubits, and then the parameterized quantum circuit is applied.

Cost Function: This function measures the quality of the solution obtained from the quantum circuit. It is typically calculated by measuring the output qubits and classically processing the results.

Classical Optimizer: An algorithm that iteratively adjusts the parameters of the quantum circuit to minimize the defined cost function. Classical optimization methods, such as gradient descent, are commonly employed to train VQCs by iteratively updating circuit parameters based on the computed cost. Open-source frameworks like PennyLane facilitate this process by supporting quantum differentiable programming, enabling the training of quantum circuits.

The variational approach is particularly significant because it makes VQCs resilient to certain types of noise and errors inherent in current quantum hardware. This resilience makes VQCs highly suitable for Noisy Intermediate-Scale Quantum (NISQ) devices, which are currently limited in size and prone to errors. VQCs bridge the gap between theoretical quantum advantage and the practical limitations of existing quantum hardware.

VQCs are being applied to a diverse range of problems by designing appropriate cost functions and tailored quantum circuits. These applications include optimization problems (such as the maximum cut problem, traveling salesman problem, and portfolio optimization), various machine learning tasks (including classification, regression, and clustering), and quantum chemistry (simulating molecular systems and estimating ground state energies).

The shift from direct quantum emulation of classical neural networks to VQCs reflects a pragmatic adaptation to the realities of current quantum hardware, specifically the NISQ era. The "unnatural" fit of classical neural network structures for quantum computers, coupled with challenges like the no-cloning theorem, compelled researchers to find quantum-native yet hardware-compatible models. VQCs, with their hybrid quantum-classical optimization loop, are inherently designed to be more resilient to noise and errors , establishing them as the most viable path for demonstrating quantum machine learning on existing, imperfect hardware. This trend indicates that the immediate future of QML will likely be dominated by hybrid approaches that cleverly distribute computational load between classical and quantum processors, rather than relying solely on purely quantum systems, until fault-tolerant quantum computers become widely available.

IV. Recent Breakthroughs and Research Landscape (2024-2025)

The period of 2024-2025 has witnessed significant advancements across the Quantum AI landscape, particularly in hardware development, algorithmic innovation, and the synergistic application of AI to quantum challenges.

Hardware Innovations: Progress in Logical Qubits, Fault Tolerance, and Qubit Stabilization

A notable shift occurred in the quantum technology industry in 2024, moving beyond simply increasing the raw number of physical qubits to a concentrated effort on stabilizing qubits. This emphasis on stability represents a critical turning point, indicating that quantum technology is progressing towards becoming a potentially reliable component for mission-critical industries.

Logical Qubits: A crucial development in 2024 was the experimental demonstration of logical qubits—error-corrected qubits that function reliably over time—beginning to outperform their underlying physical qubits. This is a pivotal step towards building truly scalable quantum systems. For instance, in September 2024, Microsoft and Quantinuum successfully entangled 12 logical qubits, tripling the logical qubit count from just six months prior and achieving a significant reduction in physical error rates (from 0.024 to 0.0011). This achievement led to the first-ever chemistry simulation combining high-performance computing, AI, and quantum computing.

Fault Tolerance: Advances in physical gate fidelity, particularly in trapped ion systems, have brought error rates closer to the theoretical threshold required for fault tolerance. This threshold marks the point where adding more qubits and error correction mechanisms can reliably reduce errors rather than compound them. Consequently, researchers now consider it plausible that quantum computers will be capable of performing useful scientific simulations in fields such as chemistry and materials science within the next decade.

Qubit Architectures and Control: Companies are actively pursuing diverse qubit architectures and control solutions. QuEra, for example, launched a logical quantum processor based on reconfigurable atom arrays, while Atom Computing and Microsoft are collaborating on quantum error correction solutions. Breakthroughs in semiconductor nanolasers are also enhancing optical technologies, which could have implications for future quantum hardware, particularly in neuromorphic computing and hybrid optical-electronic systems.

This shift signifies a critical maturation in quantum hardware development. Previously, the focus was on simply increasing the number of physical qubits. However, the inherent fragility of qubits, characterized by decoherence and high error rates, meant that merely adding more noisy qubits did not necessarily lead to more powerful or reliable computation. The understanding that error correction itself demands thousands of physical qubits to create a single stable logical qubit has driven the industry to prioritize the

quality (stability, error reduction) over the raw quantity of physical qubits. This progression indicates that the path to achieving "quantum advantage"—outperforming classical computers on commercially viable tasks —will be paved by robust, error-corrected logical qubits, even if it implies a slower growth in total qubit numbers in the short term. This also underscores the increasing importance of quantum error correction (QEC) as a core research and development area.

Algorithmic Advancements: Developments in Quantum Optimization, Simulation, and Error Correction

While hardware developments have been impressive, algorithmic progress comparable to foundational classical algorithms like Shor's or Grover's remains a significant area of ongoing research. Nevertheless, substantial advancements are being made in various algorithmic domains:

Optimization: The NASA Quantum Artificial Intelligence Laboratory (QuAIL) has conducted pioneering work in quantum optimization. This includes the development of hybrid quantum-classical algorithms, such as the quantum alternating-operator ansatz and iterative quantum optimization, with potential applications in complex logistical problems like flight gate assignment. Quantum optimization algorithms are also being applied to specific problems, such as the Maximum Cut Problem, demonstrated on superconducting quantum computers.

Simulation: Algorithmic progress includes optimized quantum simulation algorithms for scalar quantum field theories and compiler optimizations for quantum Hamiltonian simulation. Quantum AI holds the promise of accelerating drug discovery by simulating molecular interactions at the quantum level with unprecedented accuracy.

Error Correction & Mitigation: A particularly significant area of algorithmic development involves the crucial role of AI in enhancing quantum error correction (QEC). Advanced machine learning techniques, including convolutional neural networks for decoding, reinforcement learning for real-time adaptability, and generative models for capturing complex noise dynamics, are being leveraged to improve QEC. AI can assist in discovering more efficient error-correcting codes and optimizing quantum algorithms, leading to more reliable and longer-running computations. NASA QuAIL has also reported advancements in uniformly decaying subspaces for error-mitigated quantum computation.

This highlights a powerful, symbiotic relationship: it is not merely "Quantum for AI" (using quantum computers to enhance AI algorithms), but increasingly "AI for Quantum" (using AI to accelerate the development and reliability of quantum hardware and software). Quantum hardware faces severe limitations such as noise and decoherence , and traditional QEC methods are often resource-intensive and complex. AI and machine learning techniques offer a promising avenue to overcome these QEC limitations by improving decoding efficiency, adaptability, and error modeling. This creates a self-reinforcing innovation cycle where AI optimizes quantum systems, which in turn could run more powerful AI algorithms. This interdependency implies that the progress of Quantum AI relies heavily not only on breakthroughs in quantum physics but also on advancements in classical AI and machine learning, particularly in areas like control systems and error mitigation.

Key Research Highlights: Insights from Academic Papers (ArXiv, Nature) and Institutional Contributions (e.g., NASA QuAIL)

The vibrant research landscape of QAI is evidenced by numerous contributions from leading academic institutions and research laboratories:

NASA QuAIL: The NASA Quantum Artificial Intelligence Laboratory (QuAIL) is actively mandated to advance quantum computation for future NASA missions. Their 2024-2025 research portfolio includes significant theoretical advancements such as efficient photon distillation protocols, methods for generating hard Ising instances for benchmarking optimization algorithms, advancements in quantum error correction utilizing the Bacon-Shor code, compiler optimizations for quantum Hamiltonian simulation, and the synthesis of qudit circuits. Experimentally, QuAIL has reported on benchmarking qudit performance and demonstrating quantum optimization algorithms for problems like the Maximum Cut Problem on superconducting quantum hardware.

Nature Communications: Recent work published in Nature Communications on quantum machine learning indicates that fault-tolerant quantum computing could provide provably efficient resolutions for generic (stochastic) gradient descent algorithms, potentially enhancing large-scale machine learning problems. Another impactful paper in

Nature Communications demonstrated an exponential reduction in the training data requirements for predicting ground state properties of quantum many-body systems by ingeniously incorporating knowledge of the system's geometry into the machine learning algorithm.

ArXiv: Preprints on ArXiv from 2024-2025 highlight ongoing efforts to integrate quantum computing and machine learning (QML) and to automate the design of high-performance quantum circuit architectures. One notable research proposal combines quantum mechanics with Generative AI to simulate the emergence and evolution of social norms, modeling uncertainty and interdependence in complex social systems.

AAAI 2025: Mitsubishi Electric Research Laboratories (MERL) presented several papers at the Association for the Advancement of Artificial Intelligence (AAAI) 2025. These contributions included a quantum diffusion model designed to overcome challenges in quantum few-shot learning and a quantum counterpart of implicit neural representation (quINR) that leverages the expressivity of quantum neural networks for improved signal compression.

These examples provide concrete evidence of the active and diverse research landscape in QAI, showcasing both theoretical breakthroughs and experimental progress from a wide array of leading institutions and companies globally.

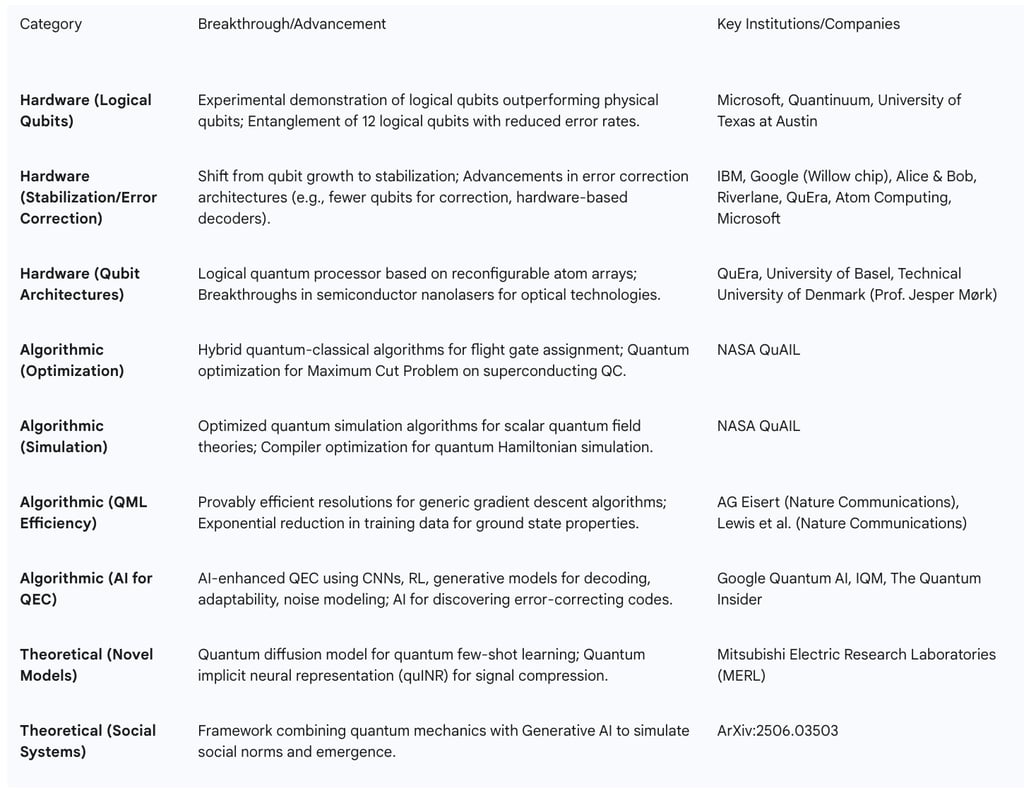

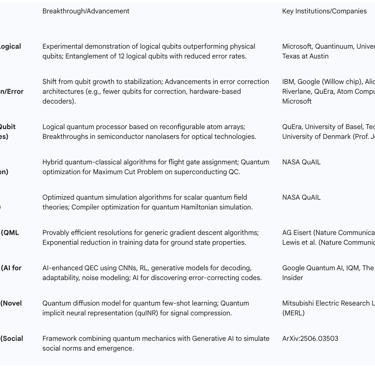

Table 2: Recent Quantum AI Breakthroughs (2024-2025)

Value of Table 2: This table provides a structured overview of the most significant and recent advancements in Quantum AI between 2024 and 2025. It categorizes breakthroughs into hardware and algorithmic domains, making it easier to track progress in distinct but interconnected areas. By listing key institutions and companies alongside specific achievements and their supporting references, the table offers a concise yet comprehensive snapshot of the active research front, highlighting who is leading innovation and where the most impactful developments are occurring.

V. Transformative Applications Across Industries

Quantum Artificial Intelligence holds the potential to disrupt and revolutionize numerous industries by solving problems previously considered intractable for classical computation. The ability of quantum systems to process vast datasets and explore complex solution spaces simultaneously unlocks new capabilities across diverse sectors.

Accelerating Drug Discovery and Materials Science

One of the most promising and frequently cited applications of QAI is in drug discovery and materials science. Quantum AI could significantly accelerate the discovery and development of new drugs by simulating molecular interactions at the quantum level with unprecedented accuracy. This capability can drastically reduce the time and cost associated with pharmaceutical research, leading to faster identification of new molecules for therapeutic use. Companies are already making progress in this area, using quantum-accelerated simulations to train better machine learning models for drug discovery. This forms a virtuous cycle where improved quantum chemistry simulations generate superior training data, ultimately enhancing machine learning models for pharmaceutical research.

Similarly, in materials science, QAI can enable the discovery of new materials with extraordinary properties. Understanding how materials behave at the atomic and molecular level is crucial for designing advanced materials, but these simulations rapidly exceed the capacity of classical computers as system complexity grows. Quantum algorithms can model these interactions with unparalleled precision, accelerating the development of cutting-edge technologies, fostering environmental sustainability, and advancing healthcare through novel materials. Recent work has even shown an exponential reduction in the training data required for predicting ground state properties of quantum many-body systems by incorporating geometric knowledge into machine learning algorithms, making such applications more feasible.

Revolutionizing Financial Modeling and Optimization

The financial sector is another area ripe for significant disruption by Quantum AI. Managing and optimizing investment portfolios involves computationally intensive analysis across vast datasets. Quantum computers could streamline this process with unprecedented speed and accuracy by analyzing detailed market data and accounting for numerous variables simultaneously. This capability extends to improving financial risk assessments, enhancing trading strategies, and delivering competitive insights to investors. Furthermore, quantum techniques, capable of analyzing patterns in massive datasets, could more effectively identify anomalies, thereby transforming fraud detection and mitigating economic losses on a global scale. Startups like Scenario X are already developing financial risk management platforms that integrate advanced scenario-based modeling with real-time data processing for optimized decision-making.

Enhancing Complex Problem-Solving: Logistics, Cybersecurity, and Beyond

Beyond drug discovery and finance, QAI's capacity for complex problem-solving extends to a multitude of other critical domains:

Logistics and Supply Chain Optimization: Seemingly simple problems like optimizing delivery routes or managing resources across complex supply chains often involve immense computational challenges that push the limits of even the most advanced classical systems. Quantum algorithms have the potential to not only reduce computational time but also provide more efficient and sustainable solutions, delivering tangible benefits to the global economy by optimizing shipping and traffic routes in real-time, reducing costs and emissions.

Cybersecurity: Quantum computing is poised to rapidly transform cybersecurity. While quantum computers pose a threat to current encryption methods (e.g., Shor's algorithm for factoring large numbers), quantum AI also introduces new security principles through quantum cryptography, such as quantum key distribution, offering inherently secure communication systems. Organizations are exploring quantum communication technologies to defend against future threats.

Natural Language Processing (NLP): Quantum-enhanced NLP models could process language structures more efficiently, leading to better chatbots, translators, and AI assistants. Quantum Large Language Models (QLLMs) are being developed to leverage quantum computation for enhanced language processing and advanced machine learning capabilities, with patented quantum algorithms conducting large-scale tests to maximize performance in data-heavy tasks.

Autonomous Vehicle Testing: Accelerated road testing for autonomous systems demands simulating millions of scenarios related to weather, traffic, and terrain. This involves complex optimization, risk modeling, and anomaly detection in high-dimensional sensor data. Quantum computing will be instrumental in performing these simulations much faster.

Food Waste Reduction: Quantum algorithms could be applied to optimize the food supply chain, improving demand forecasting, logistics, and resource allocation to reduce significant global food waste.

Emerging Applications and Use Cases

The potential of QAI extends to other areas such as telecom network optimization, where it can enhance network resilience and user experiences. In manufacturing, AI is already being used to create virtual models for process optimization, and quantum capabilities could further enhance these digital twin technologies for greater efficiency and cost savings. Furthermore, research is exploring combining quantum mechanics with generative AI to simulate complex social systems and the emergence of social norms, offering a novel computational lens for social theory.

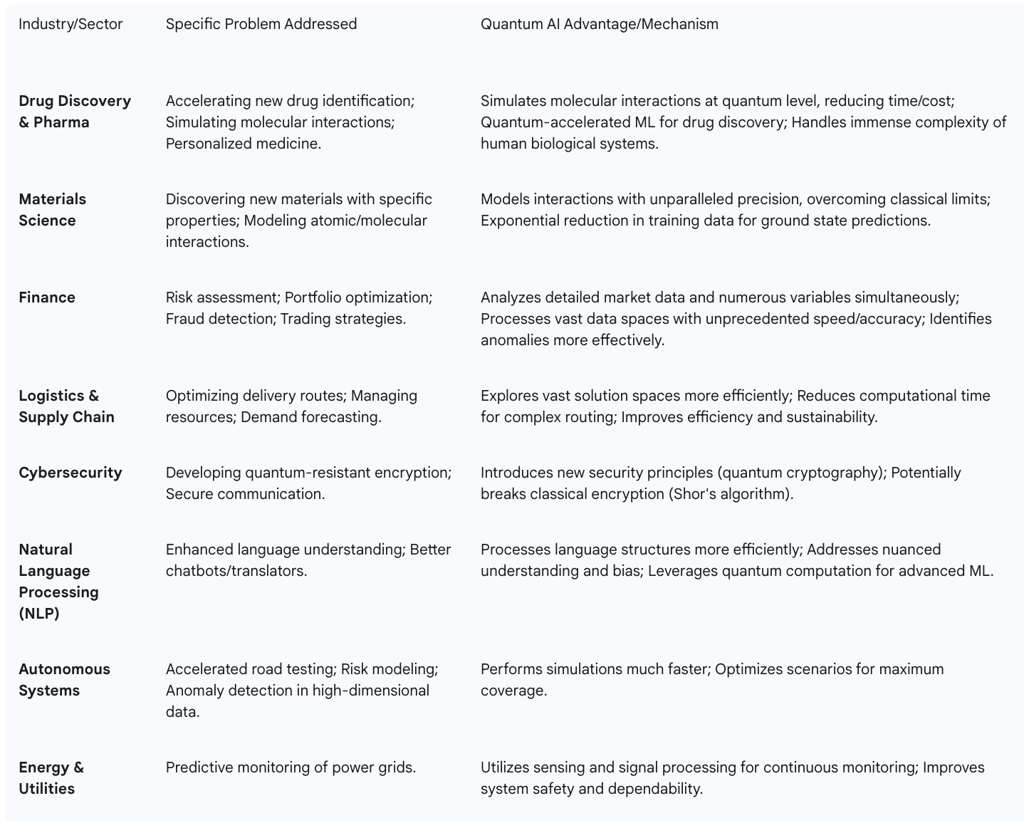

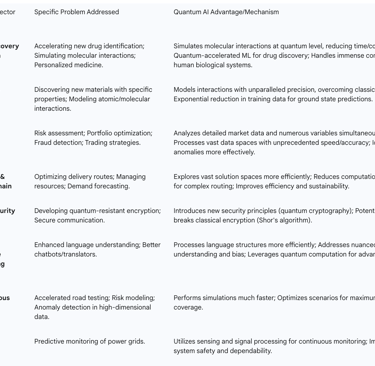

Table 3: Illustrative Applications of Quantum AI by Industry

Value of Table 3: This table provides concrete, illustrative examples of Quantum AI's potential impact across various industries. By clearly outlining the specific problems QAI can address and the mechanisms by which it offers an advantage, it moves beyond abstract concepts to practical applications. This helps stakeholders visualize the tangible benefits and identify areas where QAI could deliver significant return on investment, fostering a deeper understanding of its real-world relevance.

VI. Challenges, Limitations, and the Path Forward

Despite the immense promise of Quantum AI, its widespread adoption and full realization face significant challenges across hardware, software, and human capital domains. Understanding these limitations is critical for charting a realistic path forward.

Hardware Constraints: Decoherence, Scalability, and Environmental Requirements

Current quantum computing hardware is in its infancy and faces three primary limitations: qubit instability, scalability challenges, and the need for highly specialized environments.

Qubit Instability (Decoherence): Qubits are extremely fragile and highly susceptible to errors due to environmental interference, a phenomenon known as decoherence. Most qubits, such as superconducting circuits or trapped ions, lose their delicate quantum state within microseconds or milliseconds, severely limiting the duration and complexity of computations. Error rates for basic operations are also high, often ranging from 0.1% to 1% per gate, necessitating extensive error correction.

Scalability Challenges: Scaling quantum systems to useful sizes remains a major hurdle. While processors with 50-100+ qubits have been built, these systems often lack the necessary connectivity and uniformity for complex algorithms. Adding more qubits frequently increases noise and crosstalk (unwanted interactions between qubits), which degrades overall performance. The challenge is compounded by the fact that effective error correction demands thousands of physical qubits to create a single stable "logical qubit," a threshold that no current hardware can fully meet.

Specialized Environments: Quantum hardware requires highly specialized, costly, and complex environments for operation and maintenance. For instance, superconducting qubits must operate at temperatures near absolute zero (-273°C), necessitating large, power-intensive dilution refrigerators. Trapped-ion systems require ultra-high vacuum chambers and precise laser control. These stringent environmental controls make integration with classical infrastructure difficult and often limit access to cloud-based quantum systems rather than local hardware.

Algorithmic and Software Development Hurdles

The development of algorithms and software for Quantum AI presents its own set of formidable challenges:

Novel Algorithm Development: Quantum computers operate fundamentally differently from classical ones, necessitating entirely new algorithms that can leverage quantum properties effectively. Designing quantum algorithms that can exploit superposition and entanglement for machine learning and AI tasks is an intricate undertaking, demanding expertise across two highly specialized fields: quantum physics and machine learning.

Integration with Existing AI Frameworks: Most current AI systems are designed for classical computers. Seamlessly integrating quantum computing with these established frameworks requires extensive development to ensure efficient data transfer and effective hybrid processing. This involves creating new programming languages, compilers, and development frameworks from scratch, a journey that is still in its early stages.

Optimization Bottlenecks: Even classical AI models face optimization bottlenecks, particularly in training deep learning networks which require enormous datasets and computational power, consuming vast energy resources. While quantum algorithms like QAOA and VQE offer potential for enhanced optimization, developing scalable quantum machine learning algorithms that provide real-world advantages over classical AI remains an active research area.

The Expertise Gap and Interdisciplinary Collaboration Needs

A significant challenge hindering the rapid advancement and deployment of Quantum AI solutions is the acute shortage of professionals with expertise spanning both quantum computing and artificial intelligence. This skills gap slows down research, development, and commercialization efforts. Addressing this requires fostering interdisciplinary collaboration and developing robust workforce development programs that bridge the knowledge divide between quantum physicists, computer scientists, and machine learning engineers. Collaborative platforms and initiatives are emerging to bring together these diverse experts to tackle the complex challenges of quantum error correction with AI-driven solutions.

Ethical and Societal Considerations: Access, Governance, and Responsible Development

As with any powerful emerging technology, Quantum AI raises profound ethical and societal questions that demand proactive consideration and responsible governance.

Technological Inequality and Access: The high cost and specialized nature of quantum hardware could exacerbate existing technological inequalities, widening the gap between nations and communities that can invest in and implement these cutting-edge systems and those that are left behind. Ensuring equitable access to quantum resources is a critical concern for future policy.

Bias and Misuse: The sheer power that QAI promises means it could become a tool of extraordinary benefit or significant risk, depending on how it is governed. As with classical AI, if QAI systems are trained on biased data, they may perpetuate and even amplify those biases in their decision-making, with potentially far-reaching consequences in fields like healthcare and finance where transparency is essential.

Algorithmic Transparency: Many advanced AI models function as "black boxes," making their decisions difficult to explain or interpret. The added complexity of quantum mechanics could further obscure the interpretability of QAI models, posing challenges for accountability and trust. Responsible governance must be built into the development process, rather than bolted on after widespread deployment.

VII. Future Trajectory and Long-Term Impact

The future trajectory of Quantum AI is characterized by continued rapid innovation, particularly through hybrid approaches, and a growing economic and societal impact that will reshape industries and human capabilities.

The Evolution of Hybrid Quantum-Classical Computing

The consensus among researchers and industry leaders is that the immediate future of Quantum AI will be dominated by hybrid quantum-classical computing models. This approach leverages the strengths of both computational paradigms: classical computers handle routine tasks, data pre- and post-processing, and optimization loops, while quantum computers are reserved for specific, computationally intensive subroutines where they can provide a quantum advantage. This cyclical interaction between quantum and classical systems is an emerging approach that enables researchers to take advantage of quantum parallelism while relying on classical systems for stability, scalability, and overall optimization. As quantum hardware matures, this hybrid model will facilitate the transition from noisy intermediate-scale quantum devices to more robust, error-corrected quantum computers.

Market Projections, Investment Trends, and Innovation Ecosystems

The economic impact of quantum technology, including Quantum AI, is projected to be substantial. Research indicates that the three core pillars of quantum technology—quantum computing, quantum communication, and quantum sensing—could collectively generate up to $97 billion in revenue worldwide by 2035. Quantum computing is expected to capture the largest share, potentially growing from $4 billion in revenue in 2024 to as much as $72 billion by 2035. This growth is fueled by increasing investments; in 2024, private and public investors poured nearly $2.0 billion into quantum technology startups globally, a 50% increase from the previous year. This surge in funding underscores growing confidence in the measurable value that quantum startups can generate.

The industry is also witnessing the formation of innovation "clusters" globally, bringing together startups, academic institutions, research centers, and investors. Emerging hubs are observed in Asia (e.g., Abu Dhabi, Tel Aviv, Tokyo) and the United States (e.g., Illinois, Maryland). Companies like IBM, Google, Microsoft, and IonQ are leading the charge in quantum research and development, focusing on scalable systems, error correction, and making quantum computing accessible through cloud platforms. There is an anticipated value shift in quantum technology startups, moving from hardware towards software in the next five to ten years.

Societal Implications: Addressing Global Challenges and Mitigating Inequalities

The transformative power of Quantum AI extends to addressing some of humanity's most pressing global challenges, including public health, food security, and climate emergencies. By enabling unprecedented computational capabilities, QAI offers the promise of addressing questions once deemed unsolvable and venturing into uncharted intellectual territories. It invites a future where humanity can not only work more effectively with existing knowledge but also explore realms of inquiry yet to be imagined, constructing answers to problems still being formulated.

However, the acceleration of computational capabilities also profoundly impacts how data is managed, how algorithmic transparency is ensured, and how equitable access to quantum resources is provided. Without careful planning and robust governance, Quantum AI could exacerbate existing technological inequalities, widening the gap between those with the resources to invest in these cutting-edge systems and those who are excluded. Therefore, responsible AI development, addressing issues like bias, transparency, and accountability, is crucial as the technology evolves. The sheer power of QAI necessitates that important concerns are addressed proactively, rather than after widespread deployment.

VIII. Conclusion and Strategic Recommendations

Quantum Artificial Intelligence represents a frontier of computational science, integrating the unique principles of quantum mechanics with advanced AI algorithms to unlock problem-solving capabilities far beyond the reach of classical systems. The field is characterized by a rapid pace of innovation, particularly in the development of more stable logical qubits and the increasing application of AI itself to enhance quantum hardware and error correction. While the promise of QAI is immense, offering transformative potential across drug discovery, materials science, finance, and complex optimization, it remains in a foundational stage, grappling with significant hardware limitations, algorithmic complexities, and a critical shortage of interdisciplinary expertise.

The prevailing trend towards hybrid quantum-classical computing underscores a pragmatic understanding of the technology's current maturity and future trajectory. This integrated approach, where classical systems manage general tasks and quantum processors handle specialized, intractable computations, is crucial for realizing practical applications in the near-to-mid term. The symbiotic relationship between AI and quantum computing, where each field accelerates the other's progress, is a powerful driver of innovation.

To responsibly navigate this evolving landscape and maximize the benefits of Quantum AI, the following strategic recommendations are put forth:

Prioritize Hybrid System Development: Continued investment in hybrid quantum-classical architectures and the development of seamless integration frameworks is essential. This pragmatic approach will enable the leveraging of nascent quantum capabilities for real-world problems while relying on the stability and scalability of classical systems.

Intensify Research in Quantum Error Correction (QEC): Given the inherent fragility of qubits, breakthroughs in QEC are paramount for achieving fault-tolerant quantum computers. Research should focus on AI-driven error mitigation techniques, novel error-correcting codes, and hardware-software co-design to enhance qubit stability and coherence.

Foster Interdisciplinary Talent and Education: Address the expertise gap by investing in educational programs that bridge quantum physics, computer science, and machine learning. Collaborative research initiatives between academia, industry, and government are crucial for building a skilled workforce capable of advancing and deploying QAI solutions.

Invest in Scalable Quantum Algorithms: While hardware progresses, continued emphasis on developing scalable quantum algorithms that demonstrate clear quantum advantage for commercially viable problems is critical. This includes both quantum-accelerated classical algorithms and truly quantum-native solutions that leverage unique quantum phenomena.

Establish Proactive Governance and Ethical Frameworks: Develop ethical guidelines and regulatory frameworks for QAI deployment early in its development cycle. This includes addressing concerns related to equitable access, algorithmic bias, transparency, and potential misuse to ensure that the technology serves humanity broadly and responsibly.

Encourage Industry-Specific Pilot Programs: Support and fund pilot projects in high-impact sectors like pharmaceuticals, finance, and logistics. These early applications will provide valuable insights into practical challenges, refine algorithms, and demonstrate tangible returns on investment, accelerating broader adoption.

By strategically addressing these areas, stakeholders can collectively guide the evolution of Quantum Artificial Intelligence from its foundational stages to a transformative technology that redefines computational possibilities and addresses some of the most complex challenges facing society.

FAQ

What is Quantum Artificial Intelligence (QAI) and how does it differ from classical AI?

Quantum Artificial Intelligence (QAI) is a groundbreaking field that merges quantum computing with artificial intelligence. Unlike classical AI, which relies on binary bits (0s and 1s) to process data sequentially, QAI leverages the unique principles of quantum mechanics, such as superposition, entanglement, and interference, through quantum bits (qubits). This allows qubits to exist in multiple states simultaneously and become interconnected, leading to an exponential increase in information density and processing potential. This fundamental shift enables QAI to explore vast solution spaces in parallel, potentially solving complex problems that are intractable for classical AI, even with infinite time.

QAI does not aim to replace classical AI but rather to complement it. Classical AI excels at routine, sequential tasks and general-purpose data management, while QAI is uniquely suited for specialized, computationally challenging problems like complex combinatorial optimization or intricate quantum simulations. The future will likely see a "hybrid imperative," where classical computers manage general tasks and quantum computers handle specific, computationally hard subroutines, creating a seamless and integrated computational landscape.

How do core quantum principles like qubits, superposition, and entanglement give QAI its computational advantage?

The computational power of QAI originates directly from these unique quantum principles:

Qubits: Unlike classical bits, which are either 0 or 1, qubits can exist in a superposition of states, meaning they can be both 0 and 1 simultaneously. An N-qubit system can represent 2^N states concurrently, leading to an exponential increase in information density and processing potential. This inherent parallelism allows QAI to perform multiple calculations at once.

Superposition: This principle enables QAI models to explore vast solution spaces simultaneously. Instead of checking possibilities one by one, a quantum algorithm can operate on a superposition of all possible inputs, effectively evaluating numerous potential solutions in parallel. This significantly reduces the time for complex computations, especially in optimization and data analysis.

Entanglement: Entanglement links two or more qubits such that the state of one instantaneously influences the state of the others, regardless of distance. This interconnectedness allows for faster and more efficient information processing by enabling qubits to correlate in ways impossible for classical bits. The combined power of superposition and entanglement revolutionises machine learning, optimization, and data analysis by drastically cutting down computation time.

This move from sequential, binary logic to parallel exploration of an exponential number of states signifies a paradigm shift, allowing QAI to theoretically explore an entire solution landscape simultaneously, tackling problems beyond classical computational reach.

What are Quantum Machine Learning (QML) and Quantum Neural Networks (QNNs), and how are they developing?

Quantum Machine Learning (QML) is a research area exploring how quantum computing can enhance machine learning, primarily by leveraging qubits and quantum operations to boost computational speed and data storage. QML investigates whether quantum computers can accelerate the training or evaluation of machine learning models. Conversely, machine learning is also being used to aid foundational quantum research, such as discovering new quantum error-correcting codes.

Quantum Neural Networks (QNNs) are a specific class of QML models that integrate concepts from quantum computing and artificial neural networks. Early QNN models tried to directly translate classical neural network structures, but these often faced challenges due to quantum mechanics (e.g., the no-cloning theorem). More recent and successful approaches focus on Variational Quantum Circuits (VQCs). VQCs are pragmatic hybrid models that combine a "Parameterized Quantum Circuit" (an adjustable sequence of quantum gates), a "Cost Function" to measure solution quality, and a "Classical Optimizer" to adjust parameters. This variational approach makes VQCs resilient to noise and errors in current "Noisy Intermediate-Scale Quantum" (NISQ) devices, making them highly suitable for near-term applications like optimization, classification, and quantum chemistry simulations. The shift towards VQCs reflects an adaptation to current hardware limitations, prioritising hybrid quantum-classical optimisation.

What are the most significant recent breakthroughs in Quantum AI hardware and algorithms (2024-2025)?

The period of 2024-2025 has seen crucial advancements in Quantum AI:

Hardware Innovations:

Logical Qubits: A major development is the experimental demonstration of logical qubits (error-corrected qubits) outperforming their underlying physical qubits. For instance, Microsoft and Quantinuum entangled 12 logical qubits with significantly reduced error rates, leading to the first chemistry simulation combining high-performance computing, AI, and quantum computing. This indicates a shift from merely increasing physical qubit count to prioritising qubit stability and quality.

Fault Tolerance: Advances in physical gate fidelity, especially in trapped ion systems, have brought error rates closer to the threshold required for fault tolerance, making useful scientific simulations plausible within the next decade.

Qubit Architectures: Companies like QuEra are launching logical quantum processors based on reconfigurable atom arrays, and breakthroughs in semiconductor nanolasers could impact future optical quantum hardware.

Algorithmic Advancements:

Optimisation: NASA's Quantum Artificial Intelligence Laboratory (QuAIL) has pioneered hybrid quantum-classical optimisation algorithms for complex logistical problems like flight gate assignment and the Maximum Cut Problem.

Simulation: Progress includes optimised quantum simulation algorithms for scalar quantum field theories and compiler optimisations for quantum Hamiltonian simulation.

AI for Quantum Error Correction (QEC): A significant development is the use of AI (e.g., convolutional neural networks, reinforcement learning, generative models) to enhance QEC. AI assists in discovering more efficient error-correcting codes and optimising quantum algorithms, leading to more reliable and longer-running computations. This highlights a powerful "AI for Quantum" symbiotic relationship, where AI improves quantum systems.

These breakthroughs show a maturing field, with a strong focus on quality and practical utility, driven by a self-reinforcing innovation cycle between AI and quantum computing.

How will Quantum AI transform industries like drug discovery, finance, and logistics?

Quantum AI holds immense potential to revolutionise numerous industries by solving previously intractable problems:

Drug Discovery and Materials Science: QAI can drastically accelerate drug discovery by simulating molecular interactions at the quantum level with unprecedented accuracy, reducing research time and cost. It can also enable the discovery of new materials with extraordinary properties by precisely modelling atomic and molecular behaviour. Quantum-accelerated simulations are already being used to train better machine learning models for drug discovery.

Financial Modelling and Optimisation: QAI can streamline financial processes by analysing vast market data and numerous variables simultaneously with unprecedented speed and accuracy. This capability improves financial risk assessments, enhances trading strategies, identifies anomalies for fraud detection, and optimises investment portfolios.

Logistics and Supply Chain Optimisation: QAI can provide more efficient and sustainable solutions for complex logistical challenges, such as optimising delivery routes and managing resources across supply chains. This leads to reduced costs and emissions.

Cybersecurity: While quantum computers pose a threat to current encryption, QAI also introduces new security principles through quantum cryptography, such as inherently secure quantum key distribution.

Natural Language Processing (NLP): Quantum-enhanced NLP models could process language structures more efficiently, leading to better chatbots, translators, and advanced AI assistants.

Autonomous Vehicle Testing: QAI will be instrumental in accelerating road testing for autonomous systems by performing millions of complex simulations related to weather, traffic, and terrain much faster.

These applications demonstrate QAI's potential to address complex real-world problems, offering significant economic and societal benefits.

What are the main challenges hindering the widespread adoption of Quantum AI?

Despite its promise, Quantum AI faces significant hurdles across hardware, software, and human capital:

Hardware Constraints:

Qubit Instability (Decoherence): Qubits are extremely fragile and susceptible to environmental interference, causing them to lose their quantum state quickly. High error rates for basic operations necessitate extensive error correction.

Scalability Challenges: Scaling quantum systems to useful sizes is difficult. Adding more qubits often increases noise and crosstalk, degrading performance. Effective error correction requires thousands of physical qubits for a single stable logical qubit, a current hardware limitation.

Specialised Environments: Quantum hardware requires highly specialised, costly, and complex environments (e.g., near absolute zero temperatures for superconducting qubits, ultra-high vacuum for trapped ions), making integration with classical infrastructure challenging.

Algorithmic and Software Development Hurdles:

Novel Algorithm Development: Quantum computers require entirely new algorithms to effectively leverage quantum properties. Designing these algorithms for AI tasks demands expertise across quantum physics and machine learning.

Integration with Existing AI Frameworks: Seamlessly integrating quantum computing with established classical AI frameworks requires extensive development of new programming languages, compilers, and development frameworks.

Optimisation Bottlenecks: Developing scalable quantum machine learning algorithms that provide real-world advantages over classical AI remains an active research area.

Expertise Gap: There is a significant shortage of professionals with expertise spanning both quantum computing and artificial intelligence. This skills gap slows down research, development, and commercialisation efforts, highlighting the critical need for interdisciplinary collaboration and education.

What is the expected future trajectory of Quantum AI, and what are its long-term societal implications?

The future of Quantum AI is characterised by rapid innovation, with a strong emphasis on hybrid quantum-classical computing. This approach, where classical computers handle routine tasks and quantum computers tackle specific, computationally intensive subroutines, is seen as the pragmatic path forward, especially as quantum hardware matures. This integrated model ensures efficiency and practicality, facilitating the transition towards more robust, error-corrected quantum computers.

Economically, the quantum technology market, including QAI, is projected to be substantial, with quantum computing potentially generating up to $72 billion in revenue globally by 2035. This growth is fuelled by increasing investments and the formation of innovation "clusters" worldwide. There's an anticipated shift in value from hardware to software within quantum technology startups over the next decade.

Societally, QAI holds the potential to address some of humanity's most pressing challenges, such as public health, food security, and climate emergencies, by enabling unprecedented computational capabilities. However, its immense power also raises critical concerns:

Technological Inequality: The high cost and specialised nature of quantum hardware could exacerbate existing technological inequalities.

Bias and Misuse: If QAI systems are trained on biased data, they may perpetuate and amplify those biases.

Algorithmic Transparency: The added complexity of quantum mechanics could further obscure the interpretability of QAI models, posing challenges for accountability and trust.

Therefore, responsible AI development, including proactive governance frameworks addressing issues like equitable access, bias, transparency, and accountability, is crucial to ensure the technology serves humanity broadly and responsibly.

What strategic recommendations are crucial for advancing Quantum AI responsibly?

To responsibly advance and fully realise the potential of Quantum AI, several strategic recommendations are crucial:

Prioritise Hybrid System Development: Continued investment in hybrid quantum-classical architectures and seamless integration frameworks is essential. This pragmatic approach leverages nascent quantum capabilities for real-world problems while relying on the stability and scalability of classical systems.

Intensify Research in Quantum Error Correction (QEC): Given the inherent fragility of qubits, breakthroughs in QEC are paramount for achieving fault-tolerant quantum computers. Research should focus on AI-driven error mitigation techniques, novel error-correcting codes, and hardware-software co-design.

Foster Interdisciplinary Talent and Education: Address the expertise gap by investing in educational programmes that bridge quantum physics, computer science, and machine learning. Collaborative research initiatives between academia, industry, and government are vital for building a skilled workforce.

Invest in Scalable Quantum Algorithms: Alongside hardware progress, continued emphasis on developing scalable quantum algorithms that demonstrate clear quantum advantage for commercially viable problems is critical, encompassing both quantum-accelerated classical algorithms and truly quantum-native solutions.

Establish Proactive Governance and Ethical Frameworks: Develop ethical guidelines and regulatory frameworks for QAI deployment early in its development cycle. This includes addressing concerns related to equitable access, algorithmic bias, transparency, and potential misuse to ensure the technology serves humanity responsibly.

Encourage Industry-Specific Pilot Programmes: Support pilot projects in high-impact sectors like pharmaceuticals, finance, and logistics. These early applications will provide valuable insights into practical challenges, refine algorithms, and demonstrate tangible returns on investment, accelerating broader adoption.

By addressing these areas, stakeholders can collectively guide the evolution of Quantum AI towards a transformative technology that redefines computational possibilities and addresses complex societal challenges.