3. Understanding Real-Time Data Analytics

Real-time data analytics (RTDA) represents a paradigm shift in how organizations interact with their data. It is defined as the process of capturing, processing, and analyzing data as it is generated, or with extremely minimal latency, typically within seconds or even milliseconds, to provide immediate insights and enable rapid responses. Unlike traditional batch analytics, which primarily focuses on historical data to inform future decisions, RTDA centers its attention on the present, providing insights for day-to-day, hour-to-hour, and minute-to-minute operational decisions.

3.1. Definition and Distinguishing Characteristics

The efficacy of real-time analytics is underscored by its five core facets:

Data Freshness: Data holds its highest value immediately after creation. Real-time systems are engineered to capture data at its peak freshness, meaning as soon as it is generated, with availability often measured in milliseconds.

Low Query Latency: Given that real-time analytics frequently powers user-facing data applications, rapid query response times are imperative. Queries should ideally respond within 50 milliseconds or less to prevent any degradation of the user experience.

High Query Complexity: Despite the demand for low latency, real-time analytics systems are designed to handle complex queries involving filters, aggregates, and joins over vast datasets.

Query Concurrency: Real-time analytics platforms are built to support access by numerous end-users concurrently, capable of managing thousands or even millions of concurrent requests without performance degradation.

Long Data Retention: While prioritizing freshness, real-time analytics also retains historical data for comparison and enrichment. These systems are optimized to access data captured over extended periods, often preferring to store long histories of aggregated data to minimize raw data storage where possible.

Additional key characteristics further distinguish real-time data:

Immediate Availability: The data becomes accessible the moment it is generated.

Continuous Flow: Information is constantly updated, providing an uninterrupted stream of current data.

Time-Sensitive: Its value is intrinsically linked to its recency, making it crucial for decisions that depend on the most up-to-the-minute information.

3.2. Core Components and Architectural Patterns

A modern data streaming architecture, essential for real-time analytics, is typically conceptualized as a stack of logical layers, each comprising purpose-built components addressing specific requirements.

Data Sources: These are the origin points of data, encompassing sensors, social media feeds, IoT devices, log files from web and mobile applications, e-commerce platforms, or financial systems. A critical distinction in real-time architectures is their preference for pure event-driven approaches. Data is placed onto message queues as soon as it is generated, rather than relying on potentially stale application databases or data warehouses as primary sources.Data from static sources can still be used to enrich event streams.

Event Streaming Platforms: These platforms form the cornerstone of real-time data architectures, providing the necessary infrastructure for ingesting and transporting streaming data. They connect data sources to downstream consumers, ensure high availability, and can offer limited in-flight stream processing capabilities. Apache Kafka is widely recognized as the most popular event streaming platform, known for its fault-tolerance, high-throughput, and low-latency capabilities. Other examples include Confluent Cloud, Redpanda, Google Pub/Sub, and Amazon Kinesis.

Stream Processing Engines (and Streaming Analytics): These engines are responsible for applying transformations to data as it flows, processing data over bounded time windows for near-real-time analysis and transformation. They differ from real-time databases in their focus on in-flight processing rather than persistent long-term storage. Notable examples include Apache Flink, Apache Spark Structured Streaming, Kafka Streams, AWS Lambda, Amazon EMR, and AWS Glue.

Real-time Databases: This is a specialized class of databases optimized specifically for real-time data processing, in contrast to traditional batch processing. They are exceptionally efficient at storing time series data, making them ideal for systems generating timestamped events. Unlike stream processing engines, real-time databases can retain long histories of raw or aggregated data that can be utilized in real-time analysis. Examples include ClickHouse, Apache Pinot, and Apache Druid.

Real-time APIs: A more recent development in event streaming, APIs serve as a popular method to expose real-time data pipelines to downstream consumers who are building user-facing applications and real-time dashboards. They represent a general real-time analytics publication layer, allowing data teams to build interfaces that developers can use to serve real-time analytics in various data formats.

Downstream Data Consumers: These are the ultimate recipients and users of real-time streaming data. This category encompasses business intelligence tools, real-time data visualizations, machine learning models, user-facing applications, and real-time operations and automation systems.

The adoption of real-time analytics necessitates a profound architectural shift from traditional batch processing to an event-driven paradigm. Traditional data analytics is fundamentally built upon batch processing, where data is collected over time and processed periodically, inherently introducing delays. Real-time analytics, however, demands a re-imagining of data flow. The emphasis on "pure event-driven architectures" that place "event data streams onto message queues as soon as the data is generated" signifies a departure from relying on traditional data warehousing as the primary source for immediate insights. Instead, data sources are designed to immediately emit events , which are then ingested and processed continuously. This decoupling of services is critical for achieving the low latency and high concurrency required for real-time operations, even though it introduces considerations such as eventual consistency. This transformation is not merely a technical upgrade; it represents a fundamental re-conceptualization of how data flows and is utilized within an enterprise.

Furthermore, a key observation is the concept of data's "peak value" and its economic implications. Real-time data analytics explicitly captures insights from data at its peak value: immediately after it is generated. This implies that data possesses a time-decaying value; the longer data remains unprocessed, the less relevant and actionable its insights become for immediate operational decisions. This concept highlights an economic imperative: to maximize the utility and return on investment (ROI) from data, businesses must act on it before its relevance diminishes. This extends beyond mere operational efficiency; it is about transforming data from a historical record into a dynamic, actionable asset that drives immediate business outcomes and provides a significant competitive advantage. The speed of insight generation directly correlates with the ability to "seize critical moments" and capitalize on opportunities before competitors, thereby unlocking the full economic potential of data.

4. Synergizing Real-Time Data Analytics with Demand Prediction

The true power of real-time data analytics in demand prediction emerges from its synergistic integration with advanced analytical techniques, particularly Artificial Intelligence (AI) and Machine Learning (ML), underpinned by robust event-driven architectures.

4.1. How Real-Time Data Streams Feed into Predictive Models

Streaming analytics plays a crucial role by continuously capturing, processing, and analyzing data as it is being generated. This allows organizations to gain immediate insights and anticipate future outcomes with unprecedented speed. Modern machine learning models are specifically designed to accept these continuous data streams and provide continuous outputs. This enables them to factor in up-to-date information, such as real-time inflation rates or consumer price indices, directly into their predictions. This continuous flow of fresh data facilitates a capability known as "demand sensing," where businesses can detect and respond to shifts in customer behavior or market conditions as they happen, rather than days or weeks later, which is typical of traditional batch processing. Real-time sales data, for instance, transforms how demand is predicted by instantly analyzing purchasing patterns, allowing for the identification of subtle trends that traditional, delayed methods would inevitably miss.

4.2. The Pivotal Role of AI and Machine Learning (ML)

AI and ML are central to unlocking the full potential of real-time demand forecasting. AI can rapidly process vast amounts of data, identify hidden patterns, and dynamically adjust forecasts in real-time based on new incoming data, significantly enhancing prediction reliability. Machine learning models are capable of analyzing extensive historical and real-time datasets, encompassing past sales figures, customer behavior patterns, prevailing market conditions, seasonality, broader market trends, external influences (such as economic indicators or weather patterns), and the impact of sales promotions. These models continuously refine their accuracy as they process more data over time.

Neural networks, a subset of deep learning, are particularly effective in this domain. They mimic the human brain's information processing, enabling them to identify complex relationships in large datasets that traditional methods cannot detect. These models process multiple factors simultaneously and refine forecasts through multiple layers of interconnected nodes, crucially incorporating real-time data updates to maintain accuracy in rapidly changing markets. AI-driven predictive analytics profoundly enhances demand forecasting accuracy, empowering firms to optimize stock levels and mitigate the risks of both stockouts and overstocking. This advanced capability can reduce forecasting errors by a substantial margin, ranging from 20% to 50%.

4.3. Event-Driven Architectures for Continuous Data Ingestion and Processing

Event-driven architectures (EDA) are foundational to enabling continuous data ingestion and processing for real-time demand prediction. EDAs efficiently support the production and consumption of events, which represent state changes within a system. This facilitates the creation of flexible connections between disparate systems and enables near real-time updates across an enterprise. A key characteristic of EDA is the decoupling of publishers and subscribers: publishers simply announce that an event has occurred (ee.g., "item sold"), and other services react accordingly (e.g., the stock service reduces inventory). This architectural pattern is crucial for real-time data ingestion and transportation, ensuring that data is placed onto message queues as soon as it is generated. Furthermore, EDAs facilitate asynchronous and parallel processing, which is essential for handling resource-intensive jobs and the high volumes of data characteristic of real-time environments.

4.4. Mechanisms for Real-Time Model Deployment, Updates, and Continuous Learning

Achieving real-time demand prediction requires sophisticated mechanisms for model deployment, continuous updates, and ongoing learning.

Real-time Prediction Deployment: Upon the completion of the training pipeline, predictive models are deployed using specialized hosting services, such as Amazon SageMaker hosting services. This process establishes inference endpoints that enable real-time predictions. These endpoints facilitate seamless integration with various applications and systems, providing on-demand access to the model's predictive capabilities through secure interfaces.

Model Updates and Continuous Learning: Machine learning models are inherently designed to adapt swiftly to changing conditions and continuously improve over time by learning from incoming data. This allows them to adjust to new trends, disruptions, or seasonality. Streaming analytics empowers data scientists to monitor model performance and analyze results in real-time, enabling the instant application of corrective measures when deviations are detected. Orchestration tools, such as Amazon SageMaker Pipelines, manage the entire workflow from fine-tuning models to their deployment. These pipelines enable the simultaneous execution of multiple experiment iterations, the monitoring and visualization of performance, and the invocation of downstream workflows for further analysis or model selection. Hyperparameter tuning, often integrated into these pipelines, automatically identifies the optimal version of a model by running multiple training jobs in parallel with various methods and predefined hyperparameter ranges. Model registries, such as SageMaker Model Registry, play a critical role in managing production-ready models, organizing model versions, capturing essential metadata, and governing their approval status. This ensures proper versioning and management for future updates and deployments. The broader concept of "online learning" and continuously adapting models allows AI systems to evolve with new data, progressively improving their accuracy and relevance over time.

The integration of real-time data and AI/ML capabilities forms a symbiotic relationship. Real-time data provides the essential fuel for AI/ML models to achieve their full potential in forecasting. Without continuous, fresh data streams, AI/ML models would be trained on stale information, significantly limiting their responsiveness and accuracy.Conversely, without AI/ML, the sheer volume and velocity of real-time data would overwhelm human analytical capabilities, making immediate insights impossible. This creates a mutually reinforcing relationship where each component amplifies the other's capabilities, leading to "unmatched accuracy and efficiency" and "setting new standards for precision" in predictions. The ability of AI to "spot hidden patterns" and "continuously improve as they process more data" is directly contingent on a continuous, fresh data supply.

This powerful integration also signifies a fundamental shift from static prediction to dynamic adaptation. Traditional forecasting methods typically provide a relatively static prediction based on historical trends. However, the combination of real-time data with AI/ML transforms this into a dynamic, continuously adapting process. Models are no longer merely predicting a future state; they are actively "sensing" and "responding" to immediate shifts in market conditions and customer behavior. The continuous feedback loop, facilitated by automated data ingestion, model re-evaluation through pipelines, and seamless deployment , means the forecast itself is a living, evolving entity. This has profound implications for business agility, enabling organizations to "react faster to market changes and customer demands" and implement "proactive strategies" , moving beyond simple prediction to intelligent, automated adaptation and continuous optimization.

5. Strategic Benefits and Advantages of Real-Time Demand Prediction

The adoption of real-time data analytics for demand prediction offers a multitude of strategic benefits that extend across various facets of a business, fostering enhanced decision-making, operational efficiency, and customer satisfaction.

5.1. Enhanced Decision-Making and Competitive Advantage

Access to up-to-the-minute information empowers businesses to make better and faster decisions, which is a critical factor for maintaining and gaining a competitive edge. Real-time insights enable organizations to stay ahead of market trends and changes, providing a significant advantage over competitors. This capability facilitates informed decision-making, mitigates risks, and allows for the efficient allocation of resources by anticipating future outcomes. Furthermore, it enables more dynamic and intelligent sourcing decisions within the supply chain, optimizing procurement strategies.

5.2. Optimized Inventory Management and Reduced Stockouts/Overstockin

Real-time demand prediction is instrumental in empowering businesses to maintain optimal inventory levels, thereby significantly minimizing the risk of stockouts (which lead to lost sales and customer dissatisfaction) or excess inventory (which incurs storage costs, waste, and potential total loss of investment). Real-time inventory tracking, leveraging technologies such as RFID, IoT devices, and barcoding, provides instant updates on item location and condition, drastically reducing errors and inefficiencies in stock management. Prominent organizations like Walmart utilize real-time data to power their inventory and replenishment systems, processing massive volumes of events daily to proactively prevent stockouts across their vast operations.

5.3. Improved Resource Allocation and Production Planning

Real-time demand insights provide valuable foresight into future demand trends, enabling businesses to allocate critical resources—such as manpower, machinery, and capital—in a manner that maximizes productivity and minimizes costs.This capability optimizes production schedules, minimizes lead times, and streamlines overall supply chain operations, ensuring the timely delivery of products to customers. For manufacturers, it allows for the precise adaptation of production capacities to fluctuating demand and accurate planning of raw material orders, which directly contributes to reduced production costs and increased efficiency.

5.4. Increased Operational Efficiency and Cost Savings

The ability to process and analyze data in real-time helps teams promptly identify and address operational issues, leading to reduced downtime and improved overall operational efficiency. Real-time analytics can also automate various tasks and processes, resulting in significant time and cost savings. AI-driven supply chain forecasting, for example, has been shown to reduce forecasting errors by 20% to 50%, which directly translates into lower costs, greater agility, and faster response times across the supply chain. In logistics, dynamic route optimization, powered by real-time data, reduces fuel consumption and ensures timely deliveries. Furthermore, it minimizes overproduction, excessive inventory, and emergency procurement costs, freeing up valuable resources that can be reinvested into growth initiatives.

5.5. Elevated Customer Satisfaction and Loyalty

By accurately predicting demand and ensuring product availability, businesses can significantly enhance customer satisfaction and foster long-term loyalty. This proactive approach prevents the frustration customers experience due to stockouts or delivery delays. Real-time data also enables businesses to deliver more personalized and timely responses to individual customer needs and behaviors. Real-time shipment tracking, for instance, allows for immediate updates on package location, building trust and ensuring a positive customer experience. Companies like AO.com have demonstrated that hyper-personalized shopping experiences, enabled by real-time event streaming, can lead to substantial increases in customer conversion rates (e.g., 30%).

The benefits of real-time demand prediction are not isolated but form a synergistic cycle that creates a holistic value proposition for the business. For example, optimized inventory management directly leads to increased operational efficiency and cost savings by reducing waste and storage expenses. This, in turn, contributes to enhanced customer satisfaction by ensuring product availability. Improved customer experience can then lead to a stronger competitive advantage and ultimately increased profits. This interconnectedness indicates that implementing real-time demand prediction is not merely about solving a single operational problem, such as inventory, but about driving compounding positive effects across the entire business value chain, leading to a more robust and profitable enterprise.

Beyond optimizing for growth and efficiency, real-time demand prediction emerges as a powerful strategy for risk mitigation in dynamic environments. The capabilities enable organizations to reduce the impact of uncertainties and improve resilience against supply chain disruptions. It facilitates the identification and addressing of disruptions before they escalate and allows for the anticipation of potential equipment failures. This suggests that in today's volatile global landscape, real-time demand prediction is transitioning from a "nice-to-have" to a fundamental requirement for business continuity and resilience. It empowers organizations to implement proactive strategies against unforeseen events, transforming potential crises into manageable challenges, thereby safeguarding revenue, reputation, and operational stability.

6. Key Technologies, Platforms, and Analytical Models

The successful implementation of real-time demand prediction relies on a sophisticated ecosystem of technologies, platforms, and analytical models, each contributing to the rapid ingestion, processing, analysis, and deployment of predictive insights.

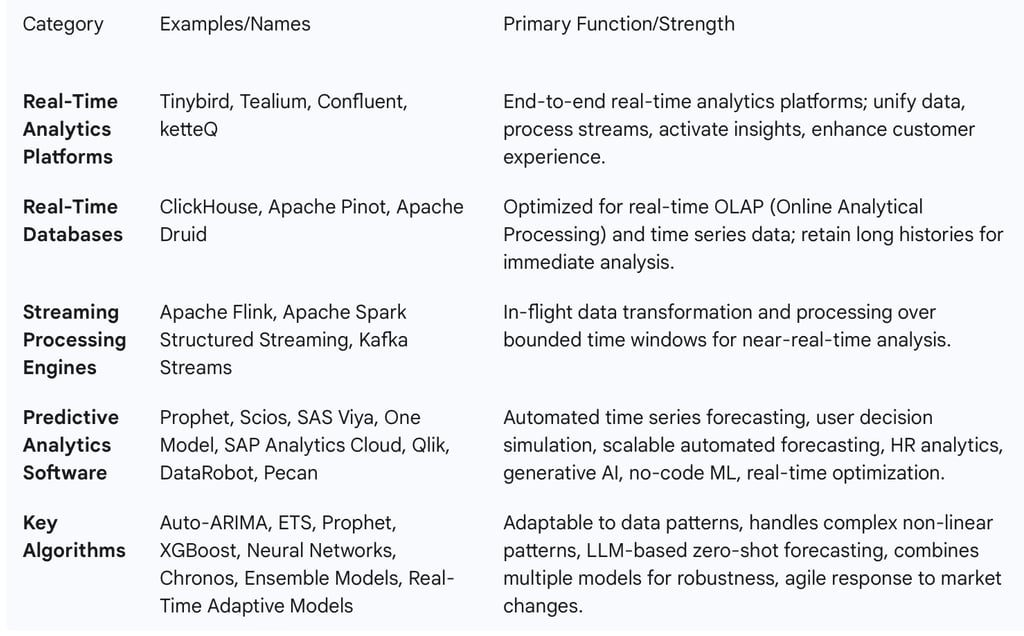

6.1. Overview of Leading Real-Time Analytics Platforms and Tools

A variety of tools and platforms facilitate the entire data supply chain, from real-time collection and processing to analysis and activation.

6.2. Discussion of Specific Algorithms for Real-Time Time Series Forecasting

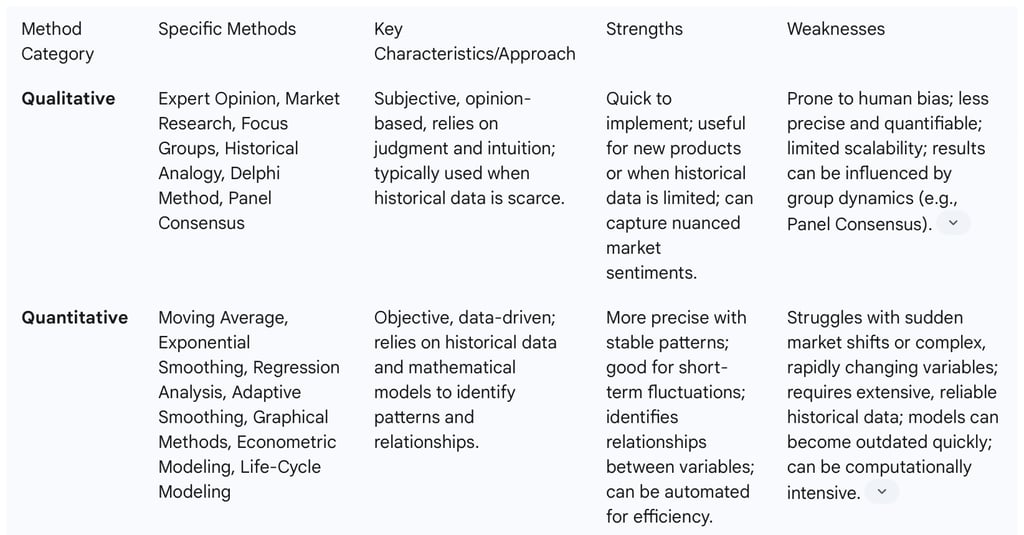

The evolution of demand forecasting has moved from simple statistical methods to sophisticated deep neural networks and real-time adaptive AI systems.

Traditional Algorithms (adapted for real-time context): While foundational, methods like Moving Averages, Exponential Smoothing, and Regression Analysis can still be integrated into modern real-time systems, often as part of more complex ensembles or as baseline models.

Time Series Specific Algorithms (often with real-time updates):

Auto-ARIMA: Suited for stationary time series data that exhibits stable patterns over time, such as seasonal fluctuations or trends.

ETS (Error, Trend, Seasonality): A versatile algorithm that adapts to the shape of historical demand data, continuously updating its states (level, trend, and seasonality) as new data points arrive.

Prophet: Specifically designed for forecasting time series data with strong seasonal effects and trends, commonly used for daily, weekly, and yearly patterns.

Machine Learning and Deep Learning Models:

XGBoost: A powerful gradient boosting framework that fits decision trees to residuals, employing regularization techniques to prevent overfitting. It is capable of processing vast amounts of historical and real-time data for accurate predictions.

Neural Networks and Deep Learning Models: These models possess powerful capabilities to capture complex non-linear patterns within large volumes of data. They effectively model long-term dependencies and seasonality in time series data using various architectures such as LSTM (Long Short-Term Memory), CNN (Convolutional Neural Network), and Transformer models. A key differentiator is their ability to process unstructured data.

Chronos: A cutting-edge family of time series models that leverages large language model (LLM) architectures. Pre-trained on large and diverse datasets, Chronos can generalize forecasting capabilities across multiple domains, excelling at "zero-shot forecasts" by treating time series data as a language to be modeled.

Ensemble Models: These models combine the results of multiple individual models (e.g., combining ARIMA and Prophet) to synthesize opinions and achieve a more robust and accurate final prediction.

Real-Time Adaptive Models: Specifically designed to respond agilely to rapid changes in the market environment. These models collect real-time data from IoT sensors, mobile apps, and POS systems, utilizing distributed processing architectures that connect edge computing with the cloud.

6.3. Role of Real-Time Databases and Streaming Processing Engines

Real-time Databases: These are crucial for storing time series data and are optimized for real-time processing. They enable the retention of long histories of raw or aggregated data, which can then be utilized in real-time analysis. Examples include ClickHouse, Apache Pinot, and Apache Druid.

Streaming Processing Engines: Essential for applying transformations to data in flight, these engines process data over bounded time windows for near-real-time analysis and transformation. They are distinct from real-time databases in their primary focus on in-flight data manipulation rather than long-term persistence. Examples include Apache Flink and Apache Spark Structured Streaming.

The proliferation of specialized platforms like Tinybird, Tealium, and ketteQ, alongside open-source tools such as Prophet, and comprehensive cloud-native services like AWS SageMaker and Azure ML, indicates a clear trend towards making advanced real-time forecasting more accessible. Historically, sophisticated forecasting capabilities often required extensive in-house data science expertise and custom development efforts. However, the emergence of "no-code utility" features (as seen in Qlik ) and "automated machine learning (AutoML)" (offered by DataRobot ) as common functionalities signifies a democratization of predictive analytics. This development lowers the barrier to entry, enabling businesses without large, specialized data science teams to leverage powerful real-time forecasting capabilities, thereby broadening its adoption across various industries and company sizes.

Effective real-time demand prediction is not merely about selecting individual algorithms or platforms, but about their seamless integration into a cohesive, end-to-end system. For instance, AWS SageMaker Pipelines orchestrate the entire lifecycle from data generation and model fine-tuning to hyperparameter search, model registration, and deployment. This highlights a critical convergence where AI/ML models are deeply intertwined with the real-time data ingestion, processing, and deployment infrastructure. The implication is a shift from disparate tools to integrated solutions that manage the complete lifecycle of real-time predictive models, from initial data capture to actionable insights and continuous improvement. This holistic approach is essential for achieving scalability, reliability, and sustained accuracy in highly dynamic business environments.

Table 2: Key Technologies and Analytical Models for Real-Time Demand Prediction