Schema & Data Versioning for Resilient Data Ecosystems

Managing this continuous flux effectively is paramount for ensuring data integrity, enabling robust analytics, and supporting advanced applications like machine learning. The strategic implementation of schema and data versioning provides the necessary framework to navigate these complexities, offering control, traceability, and reliability across diverse data landscapes.

Modern data environments are characterized by their dynamic nature, with data structures and content constantly evolving to meet new business demands. Managing this continuous flux effectively is paramount for ensuring data integrity, enabling robust analytics, and supporting advanced applications like machine learning. The strategic implementation of schema and data versioning provides the necessary framework to navigate these complexities, offering control, traceability, and reliability across diverse data landscapes.

1.1 Defining Schema Versioning and Data Versioning

At its core, schema versioning refers to the systematic process of tracking and managing changes to the structural blueprint, or schema, of a dataset or document over time. This practice is particularly relevant in flexible data models, such as those found in NoSQL databases like Azure Cosmos DB. Here, schema changes can be tracked by embedding a SchemaVersion field directly within the document. If this field is absent, it is typically assumed to represent the original schema version. This approach allows NoSQL databases to adapt alongside evolving applications, minimizing disruptions and safeguarding data integrity, especially when different teams are responsible for data modeling and application development.

Complementing schema versioning is data versioning, which encompasses the comprehensive process of maintaining and managing distinct versions of entire datasets as they change over time. This systematic approach involves several key components: capturing snapshots of datasets at various points, meticulously tracking the alterations between these versions, maintaining rich metadata that describes each iteration, and providing robust mechanisms to access and compare these different historical states. It is important to distinguish data versioning from simple data backups; while backups are primarily intended for disaster recovery, data versioning focuses on preserving a detailed history of changes to ensure data integrity, facilitate reproducibility, and enable deeper analytical insights within today's rapidly evolving and complex data environments.

1.2 The Imperative for Versioning and Evolution in Modern Data Ecosystems

The necessity for robust versioning and schema evolution in contemporary data architectures stems directly from the inherent dynamism of modern applications and business requirements. Data structures are rarely static; they must continuously adapt to new features, increased user demand, and evolving analytical needs. Schema evolution provides the means for organizations to accommodate these changes without causing widespread disruption to existing data pipelines or applications.

A significant advantage of versioning is the enhanced agility and seamless introduction of changes it affords. As applications evolve, schema modifications can be introduced smoothly, fostering an environment of continuous adaptation rather than disruptive overhauls. This agility is closely tied to the preservation of backward compatibility, which ensures that applications can gracefully handle and function with data conforming to both older and newer schema formats during transitional periods. This means that consumers of data can continue to process existing information even as producers begin to generate data with updated structures.

Beyond mere adaptability, versioning is a cornerstone for enhanced data integrity and reliability. By maintaining a clear and immutable historical record of all changes, versioning ensures the accuracy and consistency of data over time. It provides a structured methodology for managing adaptations, guaranteeing that older data versions remain comprehensible and usable, which is particularly crucial in domains like sustainability where data is constantly refined and expanded.

The ability to reliably recreate past states of data and schema is fundamental for reproducibility and traceability. This capability is indispensable for easily reproducing analyses, reports, and especially machine learning experiments by referencing the exact dataset versions used at a specific point in time. This is not merely a convenience; it is paramount for debugging issues, conducting thorough audits, and building upon previous findings in a scientifically rigorous manner. For instance, if a machine learning model's performance degrades, the ability to revert to the precise data used for its training enables effective debugging and performance tracking. This capability elevates data versioning beyond a simple data management task to a strategic enabler for data-driven innovation. Organizations aiming for reliable AI/ML deployments, comprehensive data observability, and strong data governance must view robust data versioning as a foundational investment, not just a technical detail. It directly impacts the ability to derive confident insights and build resilient data products.

Furthermore, versioning facilitates efficient troubleshooting by allowing rapid identification of when and where specific data or schema changes occurred, thereby streamlining the debugging process. It also actively supports experimentation, making A/B testing and other iterative approaches more feasible by enabling easy comparison between different dataset versions. For regulated industries, maintaining compliance and auditability is non-negotiable, and versioning provides the critical audit trails required to meet stringent regulatory standards and ensure robust data governance. Ultimately, these benefits collectively lead to more reliable analyses and, consequently, improved decision-making across the organization.

In environments often described as "schema-less," such as NoSQL databases, the need for schema versioning might initially appear contradictory. However, this apparent paradox highlights a crucial aspect: "schema-less" does not imply the absence of a schema, but rather the flexibility in its enforcement at the database layer. This flexibility, while beneficial for agile development, inherently introduces the risk of data inconsistency if not carefully managed. Therefore, explicit schema versioning becomes a critical design pattern that brings order and integrity to this flexibility. The paradox is resolved by understanding that flexibility demands a structured approach to versioning to prevent chaotic data states. This underscores that even in the most fluid data environments, a structured approach to schema management is not an optional add-on but a fundamental requirement for long-term maintainability, interoperability, and ensuring data quality for consumption. While the database might not enforce a rigid schema, the application and data consumers still rely on a logical schema, which must be versioned.

1.3 Key Challenges in Managing Schema and Data Evolution

Despite its undeniable benefits, managing schema and data evolution presents a complex set of challenges that organizations must address strategically. One of the most significant concerns is the risk to data integrity and consistency. Unsynchronized schema changes can lead to data misinterpretation, loss, or even corruption, particularly if data types are altered without proper coordination across systems. This can manifest as application downtime and incompatibility, where applications relying on outdated schema versions may fail, resulting in service interruptions. Data consumers, unaware or unprepared for schema modifications, can experience system errors, application crashes, or incorrect data processing.

These issues contribute to increased maintenance costs and complexity. Identifying and rectifying problems caused by unmanaged schema changes, often referred to as "schema drift," can be time-consuming and expensive, frequently necessitating manual intervention or intricate troubleshooting. The complexity is further compounded by the need to manage multiple data versions, their associated metadata, and the intricate dependencies between data and applications, which can significantly overburden existing infrastructure.

A lack of predictability and standardization in schema changes is another major hurdle. Without robust versioning practices, schema modifications can occur in an ad-hoc, inconsistent manner. This unpredictability jeopardizes data quality and compatibility, especially when logical constraints are not uniformly applied across disparate teams or systems. This highlights a critical distinction for data professionals: the goal should be to shift from a reactive stance against "schema drift" to a proactive, controlled approach of "schema evolution." Schema drift is largely a symptom of inadequate or absent schema evolution strategies, where unexpected changes lead to negative consequences. Implementing systematic tools and processes for schema evolution is essential to mitigate the risks and costs associated with uncontrolled changes, ensuring data quality and operational stability.

The absence of a clear historical record makes difficulty in rollbacks a prominent challenge. If a schema change introduces issues, reverting to a previous stable state becomes arduous, particularly if data has undergone irreversible transformations. As organizations scale, the scaling challenges associated with managing schema evolution become exponentially more complex and risky. The sheer volume of organizational data and the increasing number of interconnected services amplify the potential for breaking existing functionalities with any change.

Furthermore, in highly regulated industries, unmanaged schema changes can inadvertently lead to compliance violations, potentially resulting in substantial fines or security breaches. From a resource perspective, storage limitations become a concern for large datasets, as maintaining multiple versions can drastically increase storage requirements, necessitating careful planning for data retention policies. Finally, team collaboration overhead is significant, as managing data and configuration changes, in addition to traditional code changes, adds layers of complexity for multiple individuals collaborating on the same project. This also extends to pipeline integration complexity, where incorporating data versioning into existing data pipelines, especially Extract, Transform, Load (ETL) processes, demands meticulous planning to ensure seamless data flows and consistent version tracking throughout the data lifecycle.

2. Data Lake Table Formats: Delta Lake

Delta Lake represents a significant advancement in data lake architecture, transforming raw object storage into a reliable and performant foundation for analytical workloads. It provides a robust layer that addresses many of the challenges inherent in large-scale data management, particularly around schema and data versioning.

2.1 Architectural Overview and Core Principles (ACID Transactions, Transaction Log)

Delta Lake is an open-source storage layer that forms the bedrock for tables within a lakehouse architecture, especially prominent on Databricks platforms. It extends standard Parquet data files by incorporating a file-based transaction log, which is fundamental to its capabilities. This architecture delivers full ACID (Atomicity, Consistency, Isolation, Durability) guarantees, a critical feature for ensuring data reliability and consistency in data lakes, a domain traditionally lacking such assurances. These properties mean that concurrent read and write operations can occur without the risk of data corruption, even when multiple users or systems interact with the same tables simultaneously.

The core of Delta Lake's versioning and ACID capabilities lies in its transaction log. This log meticulously records every operation performed on a Delta table, including writes, updates, deletes, and merges. Each operation creates a new, distinct table version, which is precisely tracked within this log. The transaction log adheres to a well-defined open protocol, allowing any system to read and interpret its contents. To optimize querying of historical versions and manage the growing size of the log files, Delta Lake periodically aggregates table versions into Parquet checkpoint files. This process reduces the need to read every individual JSON version of the history, streamlining access to past states. Azure Databricks typically automates this checkpointing frequency based on data size and workload, abstracting much of this complexity from the user.

Delta Lake is fully compatible with Apache Spark APIs and is specifically optimized for tight integration with Structured Streaming. This synergy allows organizations to leverage a single copy of data for both batch and streaming operations, enabling scalable incremental processing without data duplication. Given its robust features and seamless integration, Delta Lake is the default format for all table operations on Databricks, meaning users automatically benefit from its advanced capabilities simply by saving their data to the lakehouse with default settings.

2.2 Deep Dive into Schema Evolution Capabilities

Delta Lake's automatic schema evolution is a pivotal feature for managing data over time, enabling seamless and agile adaptation to evolving data sources without requiring manual intervention to update schemas. This is particularly valuable when consuming data from hundreds of sources, where manual schema updates would be impractical.

Schema evolution in Delta Lake can be enabled through several mechanisms:

By setting the .option("mergeSchema", "true") on a Spark DataFrame write or writeStream operation, new columns present in the incoming data can be automatically added to the target table's schema.

Utilizing the MERGE WITH SCHEMA EVOLUTION SQL syntax, or its equivalent in Delta table APIs, specifically allows for resolving schema mismatches during MERGE operations. This enables columns present in the source table but not in the target table to be automatically added.

Setting the Spark configuration spark.databricks.delta.schema.autoMerge.enabled to true for the current SparkSession enables automatic schema evolution globally for that session.

Delta Lake's schema evolution capabilities are described as highly comprehensive, supporting a wide array of complex scenarios. This includes adding new fields at the root level of the schema, removing existing fields, and managing changes within nested structures. For instance, it seamlessly handles the addition or removal of fields within complex struct types or even within array of structs types. While renaming a column is conceptually treated as a combination of removing the old column and adding a new one, Delta Lake also provides more direct capabilities like "column mapping" to rename or delete columns without necessitating a full data rewrite. This flexibility ensures that data consumers can quickly and agilely adapt to new characteristics of data sources, avoiding manual updates that can be tedious and error-prone.

2.3 Understanding Schema Enforcement Mechanisms

Azure Databricks, leveraging Delta Lake, enforces a strict schema on write policy for tables. This mechanism validates data quality at the point of ingestion, ensuring that all incoming data conforms to the predefined schema requirements of the target table. This proactive approach serves as a critical data quality gate. By validating incoming data against the defined schema at the point of ingestion, Delta Lake acts as a proactive gatekeeper, preventing malformed, inconsistent, or non-compliant data from entering the lakehouse. This directly mitigates data integrity issues and compliance violations, shifting quality control to an earlier stage in the data pipeline. This means Delta Lake inherently promotes higher data quality and reliability from the outset, significantly reducing the need for extensive, often reactive, downstream data cleaning and validation processes. It is a key differentiator for use cases where data integrity and adherence to strict data contracts are paramount, especially in highly regulated industries or for critical analytical workloads.

Specific rules are enforced for different operations:

For insert operations, Azure Databricks mandates that all inserted columns must already exist in the target table's schema. Furthermore, all column data types in the incoming data must precisely match the data types defined in the target table. While strict, Azure Databricks attempts to safely cast column data types to match the target table where such conversions are possible and safe.

During MERGE operations, similar validation rules apply. If a data type in the source statement does not match the target column, the MERGE operation attempts a safe cast to align with the target table's data type. Crucially, any columns that are the target of an UPDATE or INSERT action within the MERGE statement must already exist in the target table. When using the INSERT or UPDATE SET syntax, columns present in the source dataset but not in the target table are ignored. Conversely, the source dataset must contain all the columns present in the target table for these wildcard operations to execute successfully.

Despite this strict enforcement, the schema of a Delta table is not immutable. It can be modified through explicit ALTER TABLE statements or by leveraging the automatic schema evolution features discussed previously. This combination of strict enforcement and flexible evolution provides a robust yet adaptable framework for data management.

2.4 Time Travel: Capabilities, Syntax, and Practical Use Cases

Delta Lake's time travel features are a cornerstone of its data versioning capabilities, allowing users to query previous table versions based on either a specific timestamp or a unique table version number. This functionality is made possible because every operation performed when writing to a Delta table or directory is automatically versioned and meticulously recorded in the underlying transaction log. Users can access historical data through various methods:

Timestamp-based queries enable retrieval of data as it existed at a precise point in time. The timestamp expression can be a specific string (e.g., '2018-10-18T22:15:12.013Z'), a date string (e.g., '2018-10-18'), or a dynamic timestamp expression (e.g., current_timestamp() - interval 12 hours).

Alternatively, version number-based queries allow users to retrieve a specific historical version of the table by providing its unique version number, which can be obtained from the DESCRIBE HISTORY command.

Delta Lake also supports a concise @ syntax, allowing the version or timestamp to be included directly within the table name (e.g., people10m@20190101000000000 for timestamp, or people10m@v123 for version).

The practical applications of Delta Lake's time travel are extensive and impactful:

Auditing data changes is simplified, as the DESCRIBE HISTORY command provides comprehensive information about table modifications, including operations, the user who performed them, and timestamps.

Rolling back data becomes straightforward in the event of accidental poor writes or deletes. The

RESTORE command can be used to revert a table to a specific previous state, enabling quick recovery from errors.

For data science and machine learning, time travel is crucial for reproducing analyses and ML models. Data scientists can recreate experiments and models by accessing the exact dataset versions used previously. Integration with tools like MLflow allows linking specific data versions to training jobs, ensuring full reproducibility of machine learning workflows.

It provides a powerful mechanism for fixing data mistakes, allowing users to correct incorrect updates or recover accidentally deleted data by querying a past version and re-inserting or merging it into the current state.

Time travel significantly simplifies the execution of complex temporal queries, such as identifying new clients within a specific past week, which would otherwise be cumbersome to construct.

Finally, it offers snapshot isolation for a set of queries, ensuring that all queries within a batch operate on a consistent snapshot of fast-changing tables, preventing inconsistencies that can arise from concurrent modifications.

It is important to understand the history and retention configuration for Delta tables. The delta.logRetentionDuration property controls how long the transaction log history for a table is preserved, with a default of 30 days. Separately, delta.deletedFileRetentionDuration determines the threshold that the VACUUM operation uses to remove data files no longer referenced in the current table version, with a default of 7 days. For time travel queries to function beyond the default 7-day period for data files, both delta.logRetentionDuration and delta.deletedFileRetentionDuration must be explicitly configured to a larger value. This reveals an implicit trade-off between time travel retention and storage costs. While time travel offers immense benefits for auditing, reproducibility, and disaster recovery, its practical implementation necessitates a careful cost-benefit analysis. Organizations must strategically balance their need for historical data access (e.g., for regulatory compliance or long-term trend analysis) with their budget constraints for data storage. This highlights a common architectural dilemma in data lakehouses: enhanced data capabilities often come with increased resource consumption. Data architects must design retention policies not only based on data governance requirements but also on economic realities. This also suggests the need for robust data lifecycle management and tiered storage strategies to manage older, less frequently accessed historical data efficiently.

Delta Lake's capabilities also bridge the historical divide between batch and streaming data processing paradigms. Its tight integration with Structured Streaming allows for the use of a single copy of data for both batch and streaming operations. This means data ingested via streaming can be immediately available for batch analytics or vice-versa, simplifying the overall data architecture and reducing data duplication. This unified transactional storage layer eliminates the friction that historically led to batch and streaming pipelines operating in silos, often using different formats and processing engines. Delta Lake enables a true "lakehouse" pattern where real-time data can be seamlessly integrated and processed alongside historical data. This accelerates time-to-insight, reduces operational complexity, and allows organizations to build more agile and responsive data platforms that can handle diverse analytical and operational workloads from a single source of truth.

3. Data Lake Table Formats: Apache Iceberg

Apache Iceberg is another leading open-source table format designed to bring robust data management capabilities, akin to those found in traditional SQL databases, to large-scale data lakes. It offers high performance and reliability for huge analytic tables, enabling a wide array of query engines to work safely and concurrently with the same datasets.

3.1 Architectural Overview and Core Principles (ACID Transactions, Metadata Layer)

Apache Iceberg is defined as a high-performance, open-source table format for massive analytical datasets. Its primary objective is to infuse the reliability and simplicity of SQL tables into big data environments. This design allows various modern query engines, such as Apache Spark, Trino, Flink, Presto, Hive, and Impala, to safely operate on the same tables simultaneously.

A cornerstone of Iceberg's design is its full ACID (Atomicity, Consistency, Isolation, Durability) compliance, which is crucial for ensuring data consistency and reliability within data lakes. Iceberg manages data changes to prevent partial writes and conflicting updates, ensuring that tables remain consistent and reliable even when multiple users or systems are reading and writing data concurrently. It achieves atomic transactions through its sophisticated snapshot mechanism.

A key differentiator for Iceberg is its sophisticated, hierarchical metadata layer. Unlike traditional data lake approaches that often rely on directory structures to infer table state, Iceberg maintains a precise list of files and manages table schemas, snapshots, and partitioning information independently from the data itself. This metadata layer is essentially the central nervous system of Iceberg. Snippets consistently highlight Iceberg's "metadata layer" and "metadata files" as the core mechanism underpinning its advanced features like schema evolution, hidden partitioning, ACID transactions, and time travel. This suggests that Iceberg's intelligence, flexibility, and performance optimizations are not primarily derived from its data files, but from its rich, versioned, and independently managed metadata. This is a significant departure from traditional data lake approaches where metadata is often inferred or loosely coupled. The metadata layer acts as a comprehensive, self-describing catalog of the data's history, structure, and organization. This metadata-centric design shifts the burden of complex data management and query optimization from manual user intervention to an intelligent, automated system. This allows for more robust, self-optimizing data lakes that can adapt to changing requirements with minimal re-engineering, providing a more "managed" data lake experience that bridges the gap between raw data lakes and traditional data warehouses. It enables a higher degree of abstraction and automation for data engineers.

The metadata layer comprises:

Metadata Files: These are the top-level files that track the overall table state, including the schema, partitioning configuration, and pointers to current and historical snapshots. Any change to the table state, such as a commit, results in a new metadata file that atomically replaces the older one, ensuring consistency.

Manifest Lists: These files enumerate the manifest files that collectively constitute a specific snapshot of an Iceberg table at a given point in time.

Manifest Files: These track individual data files and delete files, providing vital statistics (e.g., column value ranges) that help query engines optimize performance by reducing the need to scan irrelevant files.

Iceberg stores data in immutable file formats such as Parquet, Avro, or ORC. When a table is updated, Iceberg creates new data files rather than modifying existing ones, which simplifies table management and ensures consistency. Furthermore, Iceberg supports various

catalog implementations, including Hive Metastore, AWS Glue, and REST-based catalogs, offering flexibility in integration with existing data ecosystems. Its

vendor-neutral and cloud-agnostic design ensures seamless operation with object storage solutions across different cloud providers, such as Amazon S3, Google Cloud Storage, and Azure Blob Storage.

3.2 Deep Dive into Schema Evolution Capabilities

Iceberg's schema evolution is a prominent feature, enabling flexible updates to a table's schema without necessitating a rewrite of the entire underlying dataset. This capability is particularly practical and critical in dynamic data environments where data requirements frequently change.

Iceberg supports a comprehensive range of schema modifications:

Adding new columns.

Dropping (deleting) existing columns.

Renaming columns.

Reordering columns.

Promoting (widening) data types (e.g., changing an int to a bigint).

A core principle of Iceberg's schema evolution is its backward compatibility. It manages schema changes primarily through its innovative metadata table evolution architecture. This ensures that existing queries and data pipelines continue to function correctly even as new data adopts the latest structural changes. Old data does not become "zombie data" that is unreadable by new schemas. This is achieved because schema changes only modify the metadata layer, not the underlying immutable data files. This design avoids expensive data rewrites during schema evolution, leading to significant time and resource savings for large datasets. Each schema within Iceberg is assigned a unique schemaId for tracking.

3.3 Hidden Partitioning and its Impact on Performance

A unique and powerful feature of Iceberg is its hidden partitioning. Unlike traditional table formats that require users to explicitly define and manage partitioning columns—a practice that can often lead to suboptimal query performance—Iceberg automatically tracks and optimizes partitions without exposing these columns directly to the end-user.

This automatic management significantly contributes to improved query performance. Iceberg handles partition pruning internally. For example, when querying sales data that is partitioned by date, Iceberg will automatically prune irrelevant partitions, drastically reducing the amount of data scanned and consequently improving query times. This abstraction optimizes query planning and eliminates performance bottlenecks often caused by an excessive number of small files in traditional partitioning schemes like Hive. This is more than just a convenience; it is a fundamental paradigm shift in how data lake performance is managed. By abstracting the physical partitioning details away from the user and query engine, Iceberg prevents common pitfalls (e.g., suboptimal partitioning schemes chosen by users, too many small files resulting from rigid partitioning) that often plague traditional data lakes and lead to performance degradation. It allows the table format to dynamically evolve its physical layout and optimize partition pruning without breaking existing queries or requiring user intervention. This feature significantly lowers the operational burden on data engineers and democratizes data lake optimization. It makes data lakes more user-friendly and consistently performant for analytical workloads, as users no longer need deep knowledge of the underlying physical data layout to write efficient queries. This contributes to the "lakehouse" vision by providing warehouse-like performance and manageability on top of object storage.

Iceberg also allows for flexible partition evolution, meaning users can add, drop, or split partitions incrementally without requiring tedious and expensive rebuild procedures or rewriting existing data files. For instance, an e-commerce marketplace might initially partition order data by day and later split to day and customer ID partitions as data accumulates, all seamlessly managed by Iceberg. This capability stems from Iceberg's design to decouple the logical partitioning scheme from the physical data layout by storing partitioning information in the metadata layer rather than relying solely on the physical directory structure. This enables the partitioning strategy to evolve independently of the data itself, providing unparalleled flexibility.

3.4 Time Travel and Rollback: Functionality and Applications

Iceberg's time travel feature empowers users to query historical snapshots of their data. This capability is underpinned by Iceberg's comprehensive maintenance of a complete history of all table changes. Each snapshot includes detailed metadata, specifying when changes occurred, who initiated them, and what modifications were applied.

Complementing time travel is the robust rollback capability, which allows users to easily revert a table to a previous version. This is invaluable for recovering lost data, correcting errors, or undoing accidental data deletions or corruptions. It provides a rapid means to restore tables to a known "good" state, ensuring data reliability and operational continuity.

The applications of these features are diverse and critical for modern data management:

They serve as a data recovery lifeline in scenarios where a dataset was accidentally overwritten.

They enable historical analysis, allowing users to query data as it existed at any specific point in the past for trend analysis, regulatory reporting, or forensic investigations.

The complete history of table changes facilitates robust auditing and debugging of issues, providing a clear lineage of data modifications.

Crucially, time travel ensures reproducibility by allowing users to specify and query exactly the same table snapshot, which is vital for consistent analytical results and machine learning model training.

While not explicitly named as a separate feature, the functionalities of Time Travel and Rollback inherently provide comprehensive data versioning. Every change to an Iceberg table creates a new snapshot, and these snapshots are retained, allowing users to access and query any past state of the data.

A notable difference between Iceberg and Delta Lake lies in their transaction models for handling updates and merges. Apache Iceberg uses a "merge-on-read" approach, whereas Delta Lake employs a "merge-on-write" strategy. While both achieve ACID compliance and data consistency, their underlying mechanisms have significant implications for workload performance. Merge-on-write (Delta Lake) typically involves physically rewriting data files during updates or deletes, which can lead to slower write operations but potentially faster read operations as the data is already "clean" and optimized. Conversely, merge-on-read (Iceberg) records updates and deletes as delta files (e.g., delete files) that are then applied during read operations. This can result in faster write operations but potentially slower reads if many deltas accumulate and require extensive merging at query time. This fundamental difference dictates which table format is more suitable for specific workload patterns. For highly transactional workloads with frequent small updates and deletes, where write latency is critical, Iceberg's merge-on-read might be advantageous. For analytical workloads where read performance is paramount and writes are less frequent or occur in larger batches, Delta Lake's merge-on-write might be preferred. This understanding is critical for data architects when making technology choices based on the primary use cases and performance requirements of their data pipelines.

4. Database Migration Tools: Flyway and Liquibase

For relational databases, managing schema changes and ensuring consistent deployments across environments has historically been a complex and error-prone process. Tools like Flyway and Liquibase have emerged as industry standards, automating these tasks and integrating database evolution into modern software development lifecycles.

4.1 Role in Relational Database Schema Changes and Version Control

Both Flyway and Liquibase are widely adopted open-source database migration tools, with their primary function being to manage database schema changes and bring them under stringent version control. They address the significant challenges associated with manual database migrations, such as the risk of data loss, application downtime, and compatibility problems, by automating the intricate process of applying database changes, thereby enhancing safety and improving efficiency.

These tools enable the controlled and seamless schema evolution of relational databases over time. As applications grow and their underlying data requirements change, the database schema must adapt without compromising existing data or application functionality. A core principle behind these tools is the treatment of database changes as code. Changes are defined in versioned scripts, which can be written in SQL, XML, YAML, or JSON, and are stored in source control repositories like Git. This approach mirrors application code management practices, ensuring full traceability, allowing teams to recreate any database version at a specific point in time, and fostering a "single source of truth" for the schema.

Crucially, both Flyway and Liquibase are designed for seamless integration into Continuous Integration/Continuous Deployment (CI/CD) pipelines. This automation ensures that the latest schema updates are consistently and reliably applied across all environments—development, testing, staging, and production—reducing human error and significantly accelerating deployment cycles.

4.2 Core Features, Workflow, and Scripting Approaches

While Flyway and Liquibase share the common goal of database version control, they differ in their approach to defining and orchestrating changes.

Versioned Scripts and Changelogs:

Flyway operates on versioned SQL scripts (e.g., V1__create_table.sql, V2__add_column.sql) that adhere to a strict naming convention, including a prefix, version number, separator, description, and suffix. These scripts are typically stored in a designated directory (e.g.,

classpath:db/migration). Flyway automatically determines the order of execution based solely on the numeric version embedded in the filename, ensuring a linear progression of changes. It tracks applied migrations internally within a

schema_version table in the database itself, recording details like installed rank, version, description, type, script name, and checksum.

Liquibase utilizes "ChangeLogs," which are master files that group individual "ChangeSets." Each ChangeSet represents an atomic database change and can be written in various formats, including SQL, XML, YAML, or JSON. Liquibase tracks applied changes in a dedicated

DATABASECHANGELOG table within the target database. Unlike Flyway, Liquibase offers more explicit control over the order of changes, as it is specified within the changelog file itself.

Both tools intelligently manage incremental changes by only applying new migrations or changesets that have not yet been executed against a specific database instance. This ensures that existing data remains intact during schema updates and prevents redundant operations.

Dependency Management:

Flyway implicitly manages dependencies by enforcing a sequential execution order based on version numbers. If a migration V2 depends on V1, Flyway ensures V1 is executed first.

Liquibase offers more explicit dependency management through its changelog structure, allowing for more complex orchestration of migration steps with features like preconditions, contexts, and labels.

Rollback Capabilities:

Flyway requires users to manually write rollback scripts, which are then supported in its Teams version.

Liquibase provides more automated rollback support, allowing users to revert a single changeset or an entire update based on a deployment ID.

Schema Drift Detection: Both tools offer capabilities for schema drift detection, which involves comparing the current state of a target database with the schema defined by the migration scripts in source control. This helps identify unauthorized or accidental changes that have occurred outside the version control process.

Multi-Database Support: A significant advantage of both tools is their extensive multi-database support. Flyway supports a variety of databases including PostgreSQL, MySQL, and Oracle. Liquibase boasts broader coverage, supporting over 60 relational databases, NoSQL databases, data lakes, and data warehouses, and can translate abstract commands into specific SQL dialects, reducing the need to maintain multiple schema versions manually.

Finally, both tools emphasize the importance of automated testing and validation of migration scripts in staging environments before deployment to production, which is crucial for ensuring data quality and preventing corruption.

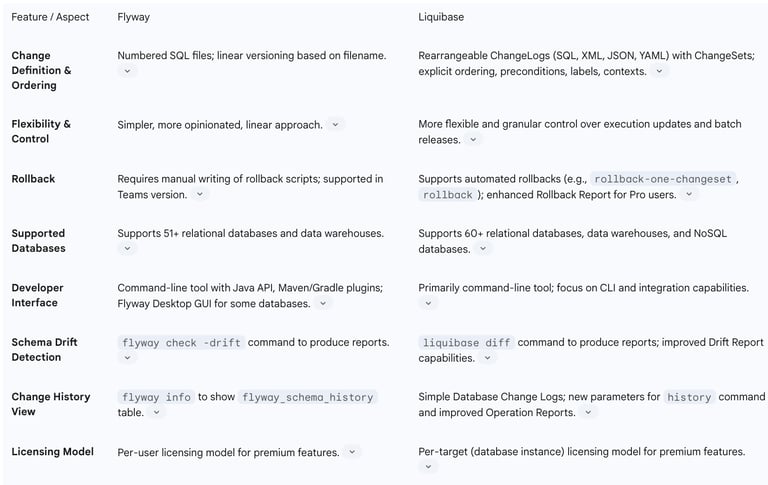

4.3 Comparison: Flyway vs. Liquibase

While both Flyway and Liquibase are robust solutions for database schema migration and version control, their design philosophies and feature sets cater to slightly different needs.

The core difference between Flyway and Liquibase lies in their approach to change orchestration. Flyway adopts a simpler, more opinionated, and linear versioning model based on file naming conventions. This makes it straightforward to learn and implement for projects with a clear, sequential migration path. In contrast, Liquibase introduces the concepts of ChangeLogs and ChangeSets, offering more granular control and flexibility. Users can specify explicit migration ordering, preconditions, labels, and contexts, allowing for more sophisticated migration logic and complex scenarios, particularly in enterprise environments with multiple databases and dependencies.

This distinction reflects a difference in design philosophy: Flyway prioritizes simplicity and a "migrations-based" approach, where each script is a step in a linear history. Liquibase, on the other hand, emphasizes more flexible, "artifact-based" deployments, allowing for non-linear changes, branching, and merging, which can be crucial for team collaboration and complex release cycles. While Flyway is often praised for its ease of use and quick implementation, Liquibase is frequently recognized for its power, flexibility, and comprehensive features, especially concerning rollbacks and broader database support. The choice between them often depends on the project's complexity, team size, and specific requirements for control over the database change workflow.

5. Schema Registries

In modern event-driven architectures and streaming data pipelines, ensuring data consistency and managing schema evolution is critical. Schema Registries provide a centralized solution to these challenges, acting as a single source of truth for data contracts.

5.1 Functionality and Role in Event-Driven Architectures

A Schema Registry serves as a centralized repository for schemas that are shared across multiple applications within an event-driven ecosystem. Historically, without such a registry, teams relied on informal agreements—like verbal understandings, shared documents, or wiki pages—to define message formats and handle serialization/deserialization. The Schema Registry eliminates this ambiguity by ensuring consistent message encoding and decoding, providing a formal description of the data within a message, including fields, data types, and nested structures.

The benefits of using a Schema Registry are substantial: it helps maintain data consistency, simplifies schema evolution, enhances interoperability between different systems, and significantly reduces development effort in loosely coupled and event-streaming workflows. For large, distributed organizations, a centralized schema repository enables highly reliable data processing and governance with minimal operational overhead. It provides a repository where multiple schemas can be registered, managed, and evolved, performing data validation for all schematized data.

The Schema Registry organizes schemas hierarchically:

A Schema defines the structure and data types of a message, identified by a unique schema ID that applications use to retrieve it.

A Subject acts as a logical container for different versions of a schema, managing how schemas evolve over time using compatibility rules. Each subject typically corresponds to a Kafka topic or a specific record object.

A Version is created and registered under a relevant subject whenever business logic necessitates changes to a message structure, allowing for tracking of schema evolution.

A Context provides a higher-level grouping or namespace for subjects, enabling different teams or applications to use the same subject name without conflicts within the same registry.

The Registry itself is the top-level container, storing and managing all schemas, subjects, versions, and contexts.

Schema Registries also provide client-side libraries (serializers and deserializers) for producers and consumers. These serializers can automatically register schemas when a message is produced, and deserializers retrieve the correct schema using an identifier passed with the message, ensuring consumers can correctly interpret the binary data. The registry supports multiple schema formats simultaneously, including Avro (the original default), Protocol Buffers, and JSON Schema, and is extensible to support custom formats.

5.2 Schema Evolution and Compatibility Rules

Schema evolution is a critical aspect of data engineering, allowing organizations to adapt to changing data structures and requirements. Schema Registries play a central role in managing this evolution by versioning every schema and enforcing specific compatibility rules. When a schema is first registered for a subject, it receives a unique ID and version number (e.g., version 1). Subsequent updates, if they pass compatibility checks, receive new IDs and incremented version numbers.The compatibility type determines how the Schema Registry compares a new schema with previous versions for a given subject. There are several primary compatibility modes:

Backward Compatibility: This mode ensures that consumers using a new schema version can still read and process data produced with older schema versions. Allowed changes include deleting fields or adding optional fields. If a field is removed, it should ideally be marked as deprecated to allow consumers to gracefully handle its absence in older data.

Forward Compatibility: This mode allows data produced with a new schema to be read by consumers using an older schema version. Allowed changes typically involve adding new fields or deleting optional fields. The older consumer will simply ignore the new fields.

Full Compatibility: This is a harmonious blend of both backward and forward compatibility, meaning consumers using new schemas can read old data, and consumers using old schemas can read new data. This offers the most flexibility for upgrading producers and consumers independently.

None Compatibility: In this mode, compatibility checks are disabled, requiring careful coordination between client upgrades to avoid breaking changes.

These compatibility types can also have "transitive" variants (e.g., BACKWARD_TRANSITIVE), which ensure compatibility not just with the immediately preceding version, but with all previous versions of the schema. Compatibility checks are performed during schema registration and updates, helping to catch potentially breaking changes early. Organizations can configure compatibility rules globally or on a per-subject basis, tailoring requirements to specific use cases. The Schema Registry ensures that both producers and consumers adhere to these defined compatibility rules, maintaining data quality and preventing corruption.

5.3 Data Governance and Data Contracts

Schema Registries are a key component for robust data governance, helping organizations ensure data quality, adherence to standards, visibility into data lineage, audit capabilities, foster collaboration across teams, and streamline application development protocols. By enforcing schema definitions, they help prevent data corruption and simplify schema management by providing a centralized location for managing schema versions.

A significant evolution in data governance, supported by Schema Registries, is the concept of Data Contracts. A data contract is a formal agreement between an upstream data producing component and a downstream data consuming component regarding the structure and semantics of data in motion. A schema is merely one element of a comprehensive data contract.

A data contract specifies and supports several critical aspects of this agreement:

Structure: Defined by the schema, outlining fields and their types.

Integrity Constraints: Declarative constraints or data quality rules on field values (e.g., an age must be a positive integer).

Metadata: Additional information about the schema or its parts, such as sensitive data flags or documentation.

Rules or Policies: Enforceable rules, such as requiring encryption for sensitive fields or routing invalid messages to a dead-letter queue.

Change or Evolution: Data contracts are versioned and can support declarative migration rules for transforming data between versions, accommodating complex schema evolution that might otherwise break downstream components.

The upstream component is responsible for enforcing the data contract, while the downstream component can then safely assume that the data it receives conforms to the agreed-upon contract. Data contracts are vital because they provide transparency over dependencies and data usage in a stream architecture, ensuring the consistency, reliability, and quality of data in motion. Schema Registries, by providing a centralized repository for managing and validating schemas, act as powerful enablers of data contracts. They formalize the communication and enforcement of data quality standards in distributed systems. This shifts data quality from an implicit understanding to an explicit, machine-enforceable agreement, significantly reducing data inconsistency and integration issues across diverse applications and teams. Starting a project with a Schema Registry is considered a best practice, as it prevents data inconsistency, simplifies data governance, reduces development time by eliminating custom schema management code, facilitates collaboration, and improves system performance through optimized schema validation.

5.4 Integration with Data Lake Table Formats (Delta Lake, Iceberg)

Schema Registries extend their utility beyond traditional messaging systems by integrating with modern data lake table formats like Delta Lake and Apache Iceberg. This integration is crucial for maintaining end-to-end data consistency and enabling seamless schema evolution from streaming sources into the lakehouse.

For Delta Lake, Schema Registries, particularly Confluent Schema Registry, play a vital role in data ingestion pipelines. Confluent's Tableflow, for instance, offers a robust integration with Databricks Unity Catalog, creating a seamless pathway between data in motion (Kafka topics) and data at rest (Delta Lake tables). This integration involves converting Kafka segments and schemas (in Avro, JSON, or Protobuf) into Delta Lake compatible schemas and Parquet files, with Schema Registry acting as the source of truth for schema definitions. The system automatically detects schema changes, such as adding fields or widening types, and applies them to the respective Delta Lake tables. Confluent's Delta Lake Sink connector also supports input data from Kafka topics in schema-based formats (Avro, JSON Schema, Protobuf), requiring Schema Registry to be enabled for schema validation and management. This allows for centralized data discovery and fine-grained access control over the materialized Delta Lake tables, ensuring security and compliance.

Similarly, Apache Iceberg integrates with Schema Registries to manage schema for streaming data. When data is consumed from Kafka topics and written to Iceberg tables, especially through tools like Kafka Connect, the Schema Registry ensures that the incoming data conforms to a defined schema. By registering schemas in the Schema Registry, data can be sent to Kafka with a small payload that includes only a pointer to the schema, while the full schema definition remains available for any consumer to use. This ensures that data lands in Iceberg with correct data types and structures, facilitating schema evolution without manual intervention. Iceberg's inherent features like schema evolution and ACID compliance complement the Schema Registry's capabilities, providing a robust solution for managing large analytic datasets sourced from streaming pipelines. The Schema Registry acts as a critical component in ensuring that the schema evolution capabilities of Iceberg are fully leveraged, providing a consistent and validated schema for data as it moves from real-time streams into the analytical lakehouse.

Conclusion

The effective management of schema and data versioning is no longer a mere technical consideration but a strategic imperative for organizations navigating the complexities of modern data ecosystems. From the structured world of relational databases to the dynamic landscapes of data lakes and real-time streaming, the ability to track, control, and evolve data structures and content is fundamental to maintaining data integrity, ensuring reproducibility, and fostering agility.

Delta Lake and Apache Iceberg stand out as foundational technologies for data lakes, each offering robust solutions for ACID transactions, schema evolution, and time travel. Delta Lake's "schema on write" enforcement acts as a proactive data quality gate, ensuring data integrity from ingestion, while its unified transaction log seamlessly bridges batch and streaming paradigms. Apache Iceberg, with its sophisticated metadata layer, provides a highly managed data lake experience, abstracting complex optimizations like hidden partitioning and enabling flexible schema evolution without data rewrites. The differing "merge-on-write" (Delta Lake) and "merge-on-read" (Iceberg) strategies present distinct performance characteristics, requiring careful consideration based on specific workload patterns.

Complementing these data lake formats, tools like Flyway and Liquibase provide essential version control for relational database schemas. By treating database changes as code and integrating with CI/CD pipelines, they automate migrations, reduce errors, and ensure consistent deployments. While Flyway offers a simpler, linear approach, Liquibase provides more granular control and broader database support, catering to diverse project needs.

Finally, Schema Registries are indispensable for event-driven architectures, acting as centralized repositories that enforce data contracts and manage schema evolution for streaming data. They ensure consistent message encoding/decoding, simplify interoperability, and provide critical data governance capabilities. Their integration with data lake formats like Delta Lake and Iceberg extends schema management from the stream to the lakehouse, establishing a single source of truth for data structures across the entire data journey.

In essence, the comprehensive implementation of schema and data versioning across these diverse data management components—from database migration tools to data lake table formats and schema registries—creates a resilient, auditable, and adaptable data infrastructure. This integrated approach not only mitigates the significant challenges of data inconsistency, application downtime, and compliance risks but also empowers organizations to confidently leverage their data for reliable analytics, reproducible machine learning, and informed decision-making in an ever-evolving digital landscape.

FAQ

What are Schema Versioning and Data Versioning, and why are they crucial in modern data environments?

Schema versioning involves systematically tracking and managing changes to the structural blueprint of a dataset over time. For instance, in a NoSQL database, a "SchemaVersion" field might be embedded in documents to indicate their structure. Data versioning, on the other hand, is the comprehensive process of maintaining and managing distinct versions of entire datasets as they evolve. This includes capturing snapshots, tracking alterations, maintaining metadata, and providing mechanisms to access and compare historical states.

Both are vital because modern data environments are inherently dynamic. Data structures continuously adapt to new features and business demands. Versioning ensures data integrity, facilitates backward compatibility (allowing older applications to work with new data), enhances reliability by maintaining an immutable historical record, and enables reproducibility for analytics and machine learning. It's also critical for troubleshooting, experimentation, and meeting compliance requirements, ultimately leading to more reliable analyses and better decision-making. Even in "schema-less" environments, explicit schema versioning brings order and integrity, preventing chaotic data states.

What are the main challenges in managing schema and data evolution, and how can they be mitigated?

Managing schema and data evolution presents several complex challenges. A significant risk is to data integrity and consistency, as unsynchronised schema changes can lead to misinterpretation, loss, or corruption, potentially causing application downtime and incompatibility. This "schema drift" can be time-consuming and expensive to fix, increasing maintenance costs and complexity, especially with multiple data versions and interdependencies. A lack of predictability and standardization in schema changes can jeopardise data quality and compatibility, while the absence of a clear historical record makes rollbacks difficult. Scaling these challenges with increasing data volume and interconnected services is also a concern. Furthermore, unmanaged changes can lead to compliance violations, and storing multiple versions can increase storage requirements and team collaboration overhead.

These challenges can be mitigated by shifting from a reactive "schema drift" approach to a proactive "schema evolution" strategy. Implementing systematic tools and processes, ensuring proper coordination across systems, and maintaining a clear historical record of changes are key. Robust versioning practices, careful planning for data retention, and integrating versioning into data pipelines can help manage complexity and ensure data quality.

How do Delta Lake and Apache Iceberg facilitate schema and data versioning in data lakes?

Delta Lake and Apache Iceberg are open-source table formats that bring robust data management capabilities to data lakes, significantly addressing schema and data versioning.

Delta Lake Delta Lake provides full ACID (Atomicity, Consistency, Isolation, Durability) guarantees through its file-based transaction log, which meticulously records every operation, creating distinct table versions. Its automatic schema evolution feature allows seamless adaptation to evolving data sources without manual intervention, supporting changes like adding or removing columns and managing nested structures. Delta Lake enforces a strict "schema on write" policy, validating incoming data against the predefined schema at ingestion to ensure quality and prevent malformed data. Its time travel feature allows users to query previous table versions based on timestamp or version number, enabling auditing, rollbacks, and reproducibility of analyses and ML models.

Apache Iceberg Apache Iceberg also offers full ACID compliance and achieves atomic transactions through a sophisticated snapshot mechanism. A key differentiator is its hierarchical metadata layer, which precisely tracks file lists, schemas, snapshots, and partitioning independently from the data. This metadata-centric design underpins its advanced features, providing a self-describing catalog of data history. Iceberg's schema evolution supports adding, dropping, renaming, reordering columns, and promoting data types without rewriting the entire dataset, thanks to its metadata-only changes. Its unique hidden partitioning automatically optimises partitions without user intervention, improving query performance. Like Delta Lake, Iceberg provides time travel for querying historical snapshots and a robust rollback capability for data recovery and error correction.

What are the key differences between Delta Lake's and Apache Iceberg's approaches to managing data and schema?

While both Delta Lake and Apache Iceberg provide ACID compliance, schema evolution, and time travel for data lakes, they have distinct architectural and operational differences.

Schema Enforcement and Evolution:

Delta Lake: Employs a strict "schema on write" enforcement, meaning incoming data is validated against the table's schema at ingestion. This acts as a proactive data quality gate. Schema evolution is automatic and can be enabled via options like mergeSchema or MERGE WITH SCHEMA EVOLUTION SQL syntax, allowing new columns to be added or types to be safely cast.

Apache Iceberg: Offers flexible schema evolution that allows changes (add, drop, rename, reorder columns, widen types) without necessitating a rewrite of the underlying data files. This is primarily achieved by modifying its sophisticated metadata layer. Iceberg's "schema-on-read" characteristics are more prominent, handling schema evolution through metadata updates rather than strict write-time enforcement for all changes.

Transaction Models and Performance:

Delta Lake: Uses a "merge-on-write" strategy. When updates or deletes occur, data files are physically rewritten. This can lead to slower write operations but potentially faster read operations because the data is always "clean" and optimised for querying. It seamlessly bridges batch and streaming paradigms with a unified transaction log.

Apache Iceberg: Employs a "merge-on-read" approach. Updates and deletes are recorded as delta or delete files that are applied during read operations. This can result in faster write operations as it avoids immediate data rewrites, but potentially slower reads if many deltas accumulate, requiring extensive merging at query time. This makes it potentially more suitable for workloads with frequent small updates.

Partitioning:

Delta Lake: Requires explicit definition and management of partitioning columns, similar to traditional Hive tables.

Apache Iceberg: Features "hidden partitioning," where it automatically tracks and optimises partitions without exposing these columns directly to the end-user. This abstracts the physical partitioning details, preventing common pitfalls and allowing for flexible partition evolution without data rewrites or expensive rebuilds.

How do database migration tools like Flyway and Liquibase manage schema changes in relational databases?

Flyway and Liquibase are open-source database migration tools designed to manage and version schema changes in relational databases, integrating them into modern software development lifecycles. They automate the process of applying database changes, reducing risks like data loss or downtime associated with manual migrations.

Their core principle is to treat database changes as "code." Changes are defined in versioned scripts (SQL, XML, YAML, JSON) and stored in source control (e.g., Git), providing full traceability and a "single source of truth" for the schema. Both tools track applied migrations within the database itself (e.g., Flyway uses schema_version, Liquibase uses DATABASECHANGELOG), ensuring only new changes are applied incrementally.

Flyway operates on numbered SQL scripts that adhere to a strict naming convention. It enforces a linear execution order based on the version number in the filename.

Liquibase uses "ChangeLogs" that group "ChangeSets," each representing an atomic database change. It offers more explicit control over the order of changes within the changelog file and supports various formats beyond SQL.

Both tools facilitate schema drift detection by comparing the database state with the version-controlled scripts. They are designed for seamless integration into CI/CD pipelines, ensuring consistent and reliable schema deployments across development, testing, staging, and production environments, thereby accelerating deployment cycles and reducing human error.

What are Schema Registries, and how do they support schema evolution in event-driven architectures?

A Schema Registry is a centralized repository for managing and sharing schemas across multiple applications in an event-driven ecosystem, such as those built with Apache Kafka. It eliminates ambiguity in message formats by providing a formal description of data structure and types, ensuring consistent message encoding and decoding.

Schema Registries support schema evolution by versioning every schema and enforcing specific compatibility rules. When a schema is updated, it receives a new version number if it passes compatibility checks. The primary compatibility modes include:

Backward Compatibility: Ensures new consumers can read data produced with older schema versions (e.g., by allowing optional field additions or field deletions).

Forward Compatibility: Allows data produced with a new schema to be read by consumers using an older schema version (e.g., new fields are ignored by old consumers).

Full Compatibility: A blend of both, allowing independent upgrades of producers and consumers.

None Compatibility: Disables checks, requiring careful coordination.

These compatibility types can also have "transitive" variants. Compatibility checks are performed during schema registration and updates, helping to catch breaking changes early. By providing client-side libraries for serialization and deserialization, the Schema Registry ensures producers register schemas and consumers retrieve the correct schema, maintaining data quality and preventing corruption across the event stream.

How do Schema Registries contribute to data governance and the concept of Data Contracts?

Schema Registries are a key component for robust data governance. By providing a centralized location for managing schema versions and enforcing schema definitions, they help maintain data quality, ensure adherence to standards, and provide visibility into data lineage, simplifying auditing and fostering cross-team collaboration. They streamline application development by formalising data structures and reducing the need for custom schema management code.

Furthermore, Schema Registries are powerful enablers of "Data Contracts." A data contract is a formal agreement between a data producing component (upstream) and a data consuming component (downstream) regarding the structure, semantics, and quality of data in motion. While a schema defines the data structure, a data contract expands on this to include:

Integrity Constraints: Rules on field values (e.g., age must be positive).

Metadata: Additional information like sensitive data flags.

Rules or Policies: Enforceable rules (e.g., encryption requirements).

Change or Evolution: Declarative migration rules to transform data between versions during complex schema evolution.

The Schema Registry centralises the management and validation of the schema component of these contracts, ensuring the upstream producer adheres to the agreed-upon format, and the downstream consumer can safely assume data conforms. This formalisation shifts data quality from implicit understanding to an explicit, machine-enforceable agreement, significantly reducing data inconsistency and integration issues across diverse applications and teams in distributed systems.

Can Schema Registries integrate with data lake table formats like Delta Lake and Apache Iceberg, and why is this important?

Yes, Schema Registries can integrate with modern data lake table formats like Delta Lake and Apache Iceberg. This integration is crucial for maintaining end-to-end data consistency and enabling seamless schema evolution from streaming data sources (e.g., Kafka topics) into the lakehouse environment.

For Delta Lake, Schema Registries (such as Confluent Schema Registry) play a vital role in data ingestion pipelines. Tools like Confluent's Tableflow can seamlessly convert data from Kafka topics with schemas (Avro, JSON, Protobuf) into Delta Lake compatible schemas and Parquet files. The Schema Registry acts as the source of truth for these schema definitions, allowing the system to automatically detect and apply schema changes (like adding fields or widening types) to the respective Delta Lake tables. This ensures centralised data discovery and schema validation for data materialised in Delta Lake.

Similarly, Apache Iceberg integrates with Schema Registries to manage schemas for streaming data. When data from Kafka topics is written to Iceberg tables, the Schema Registry ensures that the incoming data conforms to a defined schema. By registering schemas in the registry, data can be sent to Kafka with a small payload that merely points to the schema, while the full definition remains available for any consumer. This ensures data lands in Iceberg with correct data types and structures, facilitating schema evolution without manual intervention.

This integration is important because it bridges the gap between real-time streaming data and analytical data at rest in the lakehouse. It ensures that schema evolution is consistently managed across the entire data journey, from the moment data is produced in a stream to when it is queried in an analytical table. This provides a unified approach to schema management, enhancing data quality, simplifying data governance, and enabling more reliable analytics and machine learning on data sourced from real-time pipelines.