The Trillion-Parameter Price Tag: A Definitive Economic Analysis of GPT-5

The narrative of GPT-5 being merely a "cost-saving exercise" is a significant oversimplification. Instead, it represents a paradoxical investment: a higher upfront research and development (R&D) cost to build a more complex, efficient system, enabling a pricing strategy that is aggressively competitive and aims to commoditize high-end AI capabilities, thereby pressuring rivals and solidifying market leadership.

This report provides a comprehensive economic deconstruction of OpenAI's GPT-5, addressing the multifaceted question of its perceived expense. The analysis demonstrates that GPT-5's cost is not a simple metric but a complex equation balancing four primary forces: 1) the exponentially scaling capital expenditure for training at the frontier of artificial intelligence; 2) the sophisticated architectural innovations designed to mitigate long-term operational (inference) costs; 3) the significant and growing "safety tax" required to align and secure highly capable models; and 4) a strategic pricing model designed for market consolidation rather than direct cost recovery.

The narrative of GPT-5 being merely a "cost-saving exercise" is a significant oversimplification. Instead, it represents a paradoxical investment: a higher upfront research and development (R&D) cost to build a more complex, efficient system, enabling a pricing strategy that is aggressively competitive and aims to commoditize high-end AI capabilities, thereby pressuring rivals and solidifying market leadership. The report concludes that GPT-5 is "expensive" to build because it operates at the bleeding edge of technological possibility, but its pricing is a deliberate strategic choice reflecting the hyper-competitive landscape of the AI industry.

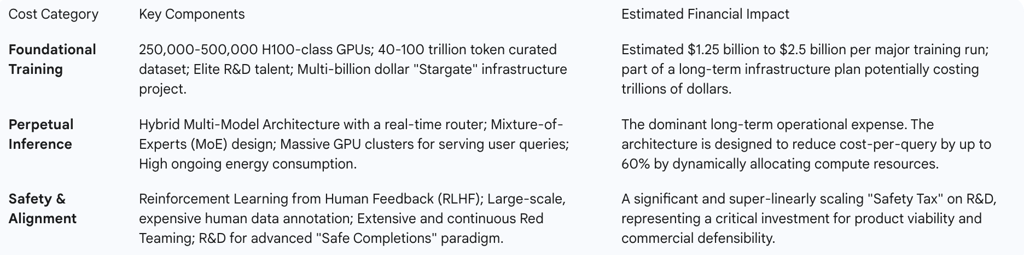

The Foundational Cost — Training a Frontier Model

The creation of a frontier large language model (LLM) like GPT-5 requires a monumental, non-recurring capital expenditure that serves as a primary driver of its overall cost. These foundational expenses are growing at an exponential rate with each successive model generation, creating a formidable barrier to entry for all but a handful of the world's most well-capitalized technology firms. This section dissects the primary components of this cost: the unprecedented scale of computational hardware, the vast and meticulously curated data engine, the price of elite human talent, and the staggering final price tag relative to its predecessors.

1.1 The Unprecedented Scale of Compute

The largest single capital expenditure in training GPT-5 is the acquisition, deployment, and operation of a massive fleet of specialized computational hardware. The scale required for this endeavor has moved beyond building a simple server cluster and into the realm of constructing national-level digital infrastructure.

The training of GPT-5 is rumored to have required a fleet of 250,000 to 500,000 NVIDIA H100-class Graphics Processing Units (GPUs). This represents a 10- to 20-fold increase in raw computing power compared to its predecessor, GPT-4, which was trained on an estimated 25,000 A100 GPUs. The cost of this hardware is immense; a single high-end H100 accelerator can cost between $25,000 and $40,000, placing the hardware cost for the training cluster alone in the billions of dollars. Beyond procurement, these massive data centers consume vast amounts of electricity, not only to power the chips but also for the industrial-scale cooling systems required to prevent them from overheating during the weeks or months of continuous operation needed for a training run.

This immense hardware cost cannot be viewed in isolation. It is a component of OpenAI's broader, long-term infrastructure strategy, which operates on a scale previously unseen in the private sector. CEO Sam Altman has publicly stated that OpenAI expects to spend "trillions" of dollars on data center construction in the near future, a figure that dwarfs the GDP of many nations. This ambition is embodied by the "Stargate" initiative, a joint venture with strategic partners including SoftBank and Oracle. Initially announced with a $500 billion, four-year investment plan, Stargate aims to build a network of AI supercomputers dedicated exclusively to OpenAI's needs. This reframes the cost of GPT-5's training compute not as a one-time product expense, but as a significant down payment on a multi-generational capital investment plan. The high cost of creating the model is, therefore, a strategic decision to build a defensible technological moat, ensuring that only a few global entities can afford to compete at the frontier of AI development.

1.2 The Data Engine: Fuel for the Machine

While hardware represents the engine, data is the fuel, and the cost of acquiring and refining a dataset suitable for training a model like GPT-5 is a substantial and often underestimated expense. The model is estimated to have been trained on a corpus of 40 to 100 trillion tokens, a four- to ten-fold increase from GPT-4's estimated 10 trillion tokens.

The cost is driven not merely by the sheer volume of data but by its quality. The process involves sourcing, scraping, and then meticulously cleaning and filtering petabytes of raw data from the web, licensed partner content, books, and academic papers. A critical and increasingly costly component of this process is the generation of high-quality synthetic data. This involves using existing powerful AI models to generate "textbook quality" content on specific topics, a process that itself consumes significant compute resources but is essential for imbuing the new model with structured, reliable knowledge. The curation of a dataset of this magnitude and quality is a massive data engineering and R&D challenge, contributing significantly to the final development cost.

1.3 The Human Element: The Price of Elite Talent

The third pillar of foundational cost is the immense investment in human capital. Building a frontier model is a complex R&D endeavor that requires a team of the world's most sought-after and highly compensated AI researchers and engineers. OpenAI's financial reports reflect this reality, with projected losses of $5 billion against $3.7 billion in revenue for 2024 and an estimated annual spend of $28 billion by some analyses, a significant portion of which is dedicated to R&D and personnel.

Sam Altman has described the AI talent market as the "most intense" he has ever witnessed, a statement substantiated by independent analysis. Research from Epoch AI estimates that R&D staff salaries can constitute between 29% and 49% of a frontier model's total development cost, a figure that rivals the cost of the hardware itself. OpenAI's reported average annual spend of approximately $2.1 million per employee further underscores the extreme cost of attracting and retaining the elite talent necessary to push the boundaries of AI research. The total cost of GPT-5 must therefore account for the years of high-cost R&D that preceded its final training run. The publicly cited training cost figures represent only the final, successful execution. The true development cost is likely 2 to 3 times higher, accounting for the numerous failed or exploratory experiments that are an inherent part of the scientific process at this scale.

1.4 Benchmarking the Billions: A Generational Leap in Cost

To fully appreciate the scale of investment in GPT-5, it is essential to place its cost in the context of its predecessors and competitors. This comparison reveals an exponential growth trend in the cost of training frontier models.

GPT-3, released in 2020, had an estimated training compute cost of up to $4.6 million. Just a few years later, the cost for GPT-4 reportedly exceeded $100 million. In a similar timeframe, Google's Gemini Ultra cost an estimated $191 million in training compute. GPT-5's rumored training cost, estimated to be between $1.25 billion and $2.5 billion, represents another dramatic, order-of-magnitude leap in expenditure. This aligns with industry analysis suggesting that the cost of training frontier AI models has been growing by a factor of 2 to 3 times per year, a trend that, if it continues, will push the cost of the largest training runs past a billion dollars by 2027.

This exponential increase underscores a fundamental economic reality of the AI industry: staying at the technological frontier requires a level of capital investment that is itself growing at a staggering rate, solidifying the market power of the few firms capable of sustaining such an arms race.

The Perpetual Cost — The Economics of Inference

While the multi-billion-dollar cost of training captures headlines, it represents a one-time capital expenditure. The dominant, long-term economic challenge for any scaled AI service is the perpetual cost of inference—the computational expense of running the model for every single user query. This ongoing operational cost is a primary driver of the final price paid by consumers and developers. GPT-5's core architecture is a direct, albeit expensive, response to this challenge, representing a sophisticated attempt to manage and mitigate the tyranny of inference at a global scale.

2.1 The Tyranny of Inference: Why Every Prompt Has a Price

The act of generating a response from a trained LLM, known as inference, is a computationally intensive process that incurs a real cost every time it is performed. Unlike traditional software, where the marginal cost of serving a user is near zero, each AI query consumes a measurable amount of GPU cycles, electricity, and network bandwidth. When scaled to hundreds of millions of daily requests, as is the case with ChatGPT, this operational expenditure becomes immense. At its peak, ChatGPT was estimated to require nearly 30,000 GPUs just to handle its daily inference load, a hardware footprint dedicated solely to running the model, separate from the cluster used for training.

The economics of inference are governed by complex technical trade-offs. Achieving fast, low-latency responses at scale is fundamentally constrained not just by raw arithmetic processing power, but by bottlenecks in memory bandwidth (the speed at which data can be moved onto the GPU) and network latency (the delay in communication between GPUs working in parallel). This creates a difficult optimization problem: increasing speed by parallelizing a query across more GPUs can exponentially increase the cost per token generated. This relationship defines a "Pareto frontier" of efficiency, where labs must constantly balance performance against cost. It is this perpetual, scaling cost of inference, more so than the one-time training cost, that dictates the long-term economic viability of any LLM service.

2.2 An Architecture of Efficiency: The GPT-5 System

A core reason for GPT-5's expense is that OpenAI invested heavily in a more complex and costly-to-develop system architecture specifically to make it cheaper to run at scale. This represents a classic engineering trade-off: incurring higher fixed costs in R&D to lower the variable costs of operation. The "expensiveness" of GPT-5 lies not just in the size of its models, but in the complexity of the cost-optimization system built around them.

GPT-5 is not a single, monolithic model but is described as a "unified system" composed of multiple, specialized sub-models. This hybrid architecture includes a fast, efficient model (

gpt-5-main) designed to handle the majority of standard queries with low latency, and a separate, deeper, and more computationally expensive reasoning model (gpt-5-thinking) reserved for more complex problems.

The linchpin of this entire system is a real-time router. This component analyzes each incoming prompt and, based on its complexity, context, and tool requirements, dynamically allocates the most appropriate and cost-effective model to handle the request. This router is the system's primary cost-control mechanism. By avoiding the use of the expensive "thinking" model unless absolutely necessary, it can save up to 60% on inferencing costs compared to a system that uses a single large model for all tasks.

At a more granular level, the models within the GPT-5 system almost certainly leverage a Mixture-of-Experts (MoE) architecture. In an MoE model, the neural network is composed of many smaller "expert" sub-networks. For any given input token, a routing mechanism activates only a small subset of these experts. This allows for the creation of models with a massive total number of parameters (rumored to be in the trillions for GPT-5) while keeping the number of active parameters—and thus the computational cost of inference—relatively low. This architectural choice is fundamental to making models of this scale economically feasible to operate.

The botched launch of GPT-5, however, exposed a critical vulnerability in this sophisticated architecture. A malfunctioning router was reported to have misdirected user queries to cheaper, less capable models, making the entire system appear "way dumber" than it actually was. This incident highlights that the user's perception of "GPT-5's intelligence" is no longer tied to the raw capability of its best model, but is now mediated entirely by the performance of its cost-saving router. The economic viability of this complex system, therefore, hinges on the flawless execution of this single component, creating a new and critical point of failure.

2.3 The "Cost-Saving Exercise" Debate

The introduction of this router-based system led many observers to conclude that GPT-5 was primarily a "cost-saving exercise" designed to manage OpenAI's immense operational expenses. This view is supported by statements from Sam Altman, who acknowledged that a "big GPU crunch" and a strategic goal to "optimize for inference cost" were significant factors in GPT-5's design. The decision to deprecate older, more expensive models like o3 and replace them with a system that defaults to cheaper sub-models further fueled this narrative.

However, to characterize GPT-5 solely as a cost-cutting measure is to overlook the substantial investment in its enhanced capabilities. Despite the clear focus on efficiency, GPT-5 established new state-of-the-art (SOTA) performance across a wide range of difficult academic and real-world benchmarks, including mathematics (94.6% on AIME 2025), coding (74.9% on SWE-bench Verified), and multimodal understanding (84.2% on MMMU). It significantly outperforms its predecessors, GPT-4o and o3, in these demanding domains. This demonstrates that the investment was not merely in cost reduction but also in a genuine and expensive leap forward in capability. GPT-5 is therefore best understood as a dual-purpose system: one that pushes the performance frontier while simultaneously implementing a more sustainable economic architecture for delivering that performance at scale.

The Hidden Costs of Capability and Safety

Beyond the immense costs of pre-training and inference lies a third, often underestimated, category of expenditure: the investment required to make a powerful model safe, reliable, and commercially viable. As models like GPT-5 become more capable, their potential for misuse and unintended harm grows exponentially. Consequently, the investment needed to align them with human values and secure them against adversarial attacks—a "Safety Tax"—scales even faster. This post-training refinement is not an optional add-on but a critical and costly component of developing any frontier model intended for public deployment.

3.1 Aligning with Humanity: The High Cost of RLHF

The primary technique used to align LLMs with human preferences is Reinforcement Learning from Human Feedback (RLHF). This is a complex, multi-stage process that adds significant computational and labor costs on top of the initial pre-training. The process begins with the collection of a large dataset of human preference data, where human labelers rank or compare different model outputs. This data is then used to train a separate "reward model," which learns to predict human preferences. Finally, the original LLM is fine-tuned using reinforcement learning, with the reward model providing the feedback signal to guide the model's behavior toward more helpful, honest, and harmless responses.

The most significant bottleneck and cost driver in this process is the sourcing of high-quality human preference data. Data annotation has become a multi-billion dollar industry, reflecting the high cost and time-consuming nature of this work. Skilled human annotators, particularly those with specialized expertise in areas like coding or law, can command hourly rates of $20 to $40 or more. To create a dataset large and diverse enough to effectively align a model of GPT-5's complexity requires a massive investment in this specialized human labor, making RLHF a major contributor to the model's overall development cost.

3.2 Building Resilience: The Economics of Large-Scale Red Teaming

Before a model can be safely deployed, it must undergo rigorous adversarial testing, known as red teaming. This process involves simulating a wide range of attacks to proactively identify and mitigate vulnerabilities, such as the potential to generate harmful content, leak sensitive information, or be "jailbroken" to bypass safety controls. Red teaming is a resource-intensive necessity, requiring both creative human expertise to devise novel attacks and significant compute resources to run these tests at scale.

The economics of red teaming are inherently inefficient. A benchmark analysis of automated red teaming processes revealed that failed attempts—where the model successfully resists the attack—can be nearly ten times more expensive in terms of compute than successful jailbreaks, as the attacking model burns resources exploring unsuccessful pathways. This means that a more robust and secure model is paradoxically more expensive to test. Despite this substantial investment, the security of frontier models remains an ongoing challenge. Initial versions of GPT-5 were reportedly still vulnerable to jailbreaking techniques, highlighting that red teaming is not a one-time cost but a continuous and expensive arms race between developers and potential adversaries.

3.3 From "Hard Refusals" to "Safe Completions": The R&D Cost of Nuanced Safety

With GPT-5, OpenAI has invested in a more sophisticated safety paradigm that moves beyond the simple, often frustrating, "hard refusals" of previous models. This new approach, termed "safe completions," trains the model to provide the most helpful and useful response possible while still adhering to strict safety boundaries. For example, when faced with a dual-use query, instead of refusing entirely, the model might provide safe, high-level educational information while declining to provide specific, actionable instructions that could be misused.

This shift is not merely an ethical choice but a strategic product decision. A model that frequently refuses to answer legitimate queries is less useful and therefore commercially less viable. By investing heavily in the R&D required to develop, train, and validate this more nuanced safety system, OpenAI is directly increasing the product's utility and user retention. This preempts competitors from gaining a market advantage by offering a "more helpful" but potentially less safe alternative. The development of this advanced safety system requires more complex reward models and more detailed fine-tuning datasets, adding another layer of expense to the project. This "Safety Tax" is a permanent and accelerating component of frontier model development, as the investment required to secure a model grows faster than its raw capabilities.

Market Dynamics and Strategic Pricing

The final piece of the puzzle in understanding GPT-5's cost is to connect its immense underlying expenses to its public-facing price. An analysis of OpenAI's pricing strategy reveals that it is not a simple cost-plus model designed for immediate profitability. Instead, it is a highly strategic tool, funded by massive capital reserves, designed to consolidate market share, pressure competitors, and accelerate the commoditization of advanced AI in a fiercely competitive landscape.

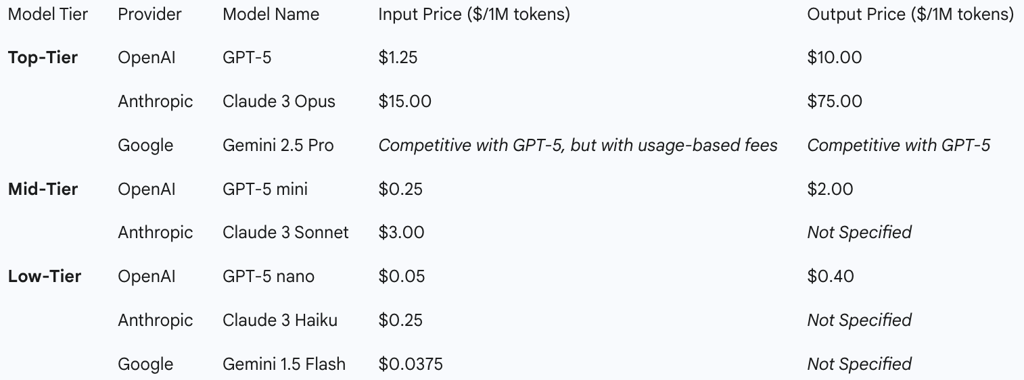

4.1 The Price on the Tin: Deconstructing the GPT-5 Offering

OpenAI has released GPT-5 with a multi-tiered pricing structure for both API developers and direct consumers, segmenting the market by capability and cost.

For API users, the GPT-5 family is designed to cater to a range of use cases. The flagship gpt-5 model is priced at $1.25 per million input tokens and $10.00 per million output tokens. For less demanding or more cost-sensitive applications, gpt-5-mini is available at a significantly lower rate of $0.25/$2.00, while gpt-5-nano, optimized for high-volume, low-latency tasks, is priced at just $0.05/$0.40.

For consumers using ChatGPT, access is also tiered. Free users can access the base GPT-5 system, subject to usage limits. Subscribers to the $20 per month ChatGPT Plus plan receive higher usage caps and access to enhanced modes like "Thinking." Finally, users of the premium "Pro" tier gain access to the most powerful variant, GPT-5 Pro, which features extended reasoning capabilities for the most complex tasks.

4.2 A Competitive Battlefield: Pricing as a Strategic Weapon

When placed in the context of the broader market, OpenAI's pricing for GPT-5 is revealed to be a strategic weapon. The pricing is described by industry observers as "aggressively competitive" and has sparked concerns of a looming LLM price war.

This strategy is evident across all tiers. The main gpt-5 model's pricing significantly undercuts its primary competitor, Anthropic's top-tier Claude 3 Opus, which is priced at $15 per million input tokens and $75 per million output tokens. At the other end of the spectrum, the low-cost

gpt-5-nano is priced even more cheaply than Google's budget-friendly Gemini Flash models. This aggressive posture signals a clear intent to compete on price at every level of the market, putting immense pressure on rivals. This is not the pricing of a company looking to recoup its multi-billion-dollar R&D investment in the short term; it is the pricing of a well-capitalized market leader aiming to make its product the default choice for developers and enterprises.

The release of a full family of models (Pro, mini, nano) simultaneously is a sophisticated market segmentation strategy designed to preemptively counter competitors. A rival can no longer easily carve out a niche by offering only a "cheaper, faster" model, because gpt-5-nano already occupies that space at a highly competitive price point. Similarly, it is difficult to compete on a "balanced" enterprise model when the standard gpt-5 and gpt-5-mini already target that segment. This comprehensive offering forces competitors to engage in a multi-front war of attrition, a battle that OpenAI, with its vast funding and infrastructure, is uniquely positioned to win.

4.3 The Business Imperative: Balancing Burn Rate with Market Domination

This aggressive pricing strategy is made possible by OpenAI's unique financial position. The company operates at a significant loss, with a projected cash burn of $8 billion in 2025 against revenues of $13 billion, and an estimated annual spend that may be as high as $28 billion. It is heavily reliant on its substantial venture capital funding and its deep strategic partnership with Microsoft to sustain these operations.

This financial structure allows OpenAI to pursue a strategy of strategic price compression. The company is leveraging its massive fixed costs—the multi-billion-dollar investments in compute, data, and R&D—to build a system that has a lower marginal cost per query. It then passes these efficiencies directly to the market through low prices, even if it means deepening its operational losses. This puts immense pressure on less-funded competitors who lack the scale of investment and therefore cannot match these prices without destroying their own financial viability. In this sense, the very "expensiveness" of building GPT-5 is what enables OpenAI to make it strategically "cheap" to use.

This strategy is deployed against the backdrop of an AI market experiencing explosive growth, projected to reach $1.81 trillion by 2030, with 87% of organizations believing AI provides a critical competitive advantage. OpenAI is willing to endure a high cash burn rate in the short term to capture the dominant share of this rapidly expanding and strategically vital market.

Conclusion & Strategic Outlook

The question of why GPT-5 is expensive cannot be answered with a single number. Its cost is the product of a complex interplay between the escalating physics of technological progress and the calculated economics of market domination. The analysis presented in this report synthesizes four primary cost pillars to provide a holistic answer.

First, the foundational training cost is a direct consequence of operating at the frontier of AI development, a domain governed by exponential scaling laws. Each generational leap demands an order-of-magnitude increase in investment across compute, data, and elite talent, with GPT-5's multi-billion-dollar price tag representing the current high-water mark in a technological arms race with immense barriers to entry.

Second, the perpetual cost of inference is the dominant long-term economic challenge. GPT-5's complex, multi-model architecture is an expensive R&D investment made precisely to mitigate this ongoing operational expense. This creates an architectural paradox: OpenAI spent more to build a system that is ultimately cheaper to run at a global scale.

Third, the hidden costs of safety and alignment represent a growing "Safety Tax" on frontier model development. As capabilities increase, the potential for harm escalates, requiring a super-linear investment in resource-intensive processes like RLHF and red teaming to ensure the model is safe, reliable, and commercially viable.

Finally, GPT-5's market price is not a simple reflection of these underlying costs. It is a strategically calibrated weapon in a hyper-competitive market. By leveraging its massive capital advantage to absorb operational losses, OpenAI has priced GPT-5 aggressively to undercut rivals, accelerate the commoditization of advanced AI, and consolidate its position as the market's central platform.

In conclusion, GPT-5 is expensive to build because it represents the pinnacle of a demanding and resource-intensive technological paradigm. However, its price to use is a strategic decision designed to secure market leadership. The future economics of the AI industry will likely be defined by this fundamental tension: the astronomical cost of innovation at the frontier versus the strategic imperative to drive down the cost of access. For competitors, investors, and enterprises, navigating this landscape will require a clear understanding that in the race for AI supremacy, the ability to fund the future is the ultimate competitive advantage.