What is Cloud AutoML Video Intelligence Object Detection in GCP?

The Cloud AutoML Video Intelligence Object Detection in GCP is a game-changer 🌟. This tool magnifies the power of visual content 🎥, boosting engagement and delivering deep insights 📈. Truly, it's like having a Swiss Army knife 🛠️ for all your video analysis needs 🎯.

Cloud AutoML Video Intelligence Object Detection, now a core component of the Vertex AI platform, represents a significant evolution in Google Cloud's artificial intelligence services. This service is a sophisticated, high-level tool designed to democratize the power of computer vision for a broad audience, including those with minimal machine learning expertise. At its core, the service enables the creation of custom machine learning models to identify and track user-defined objects within video content.

The service's value proposition is centered on its ability to solve highly specific, niche business problems that are not addressed by off-the-shelf, pre-trained models. This is achieved by automating the complex, time-consuming aspects of the machine learning workflow, such as data preprocessing and hyperparameter tuning. It provides a streamlined, low-code interface for model development, from data ingestion to deployment. The seamless integration with other Google Cloud services, such as Cloud Storage and BigQuery, establishes a unified and scalable MLOps pipeline.

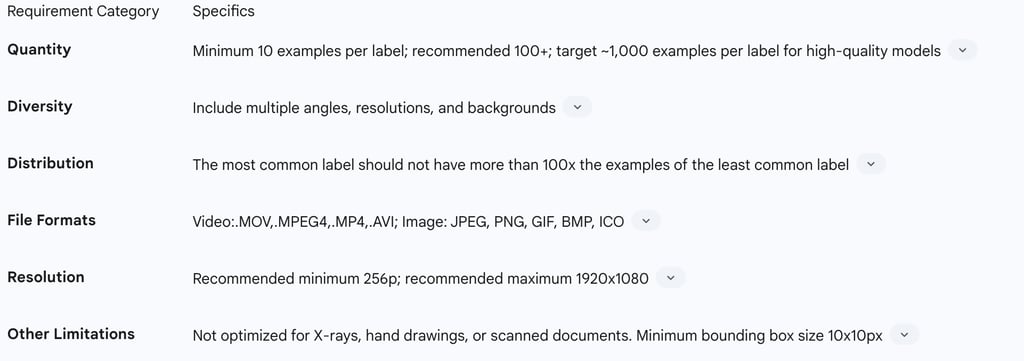

While the platform automates many technical steps, the success and cost-effectiveness of a project are overwhelmingly dependent on the quality and preparation of the training data. This report provides a detailed examination of the service, including its key capabilities, a practical guide to the model lifecycle, and a critical analysis of its strategic applications, technical limitations, and commercial considerations. The analysis concludes that for organizations with a well-defined business problem and access to high-quality, annotated video data, this service is a powerful and efficient solution for unlocking unique value from vast video libraries.

Introduction to Google Cloud Video AI: A Unified Platform

Google Cloud Platform offers a suite of services for video analysis, collectively known as Video AI. This suite is composed of different tools that cater to varying levels of technical expertise and project requirements. Understanding the specific components and their relationship is essential for selecting the correct solution for a given problem. The service formerly known as Cloud AutoML Video Intelligence is a specialized part of this ecosystem, now consolidated into the unified Vertex AI platform.

2.1 Defining the Core Concepts

To grasp the full functionality of the service, it is helpful to define three key concepts:

AutoML (Automated Machine Learning): This is a set of techniques and tools that automate the machine learning workflow, from data preprocessing to model selection and hyperparameter tuning. The core purpose of AutoML is to make machine learning accessible to a wider audience, including those who are not data scientists. It removes the need for specialized knowledge and manual configuration of complex models.

Video Intelligence: This is the overarching Google Cloud service that applies machine learning to analyze video content. It is capable of extracting rich metadata at the video, shot, or frame level, making videos searchable and discoverable. The service offers a pre-trained API that can recognize over 20,000 entities, including objects, places, and actions.

Object Detection and Tracking: This is a specific computer vision task that identifies and locates objects within a digital image or video. Unlike image labeling, which assigns a tag to an entire image, object detection assigns a label to a specific region of an image and draws a bounding box around it. When applied to video, this capability is extended to "object tracking," which follows the movement of a detected object across a series of frames.

2.2 Navigating the Product Suite: Pre-trained API vs. Custom AutoML

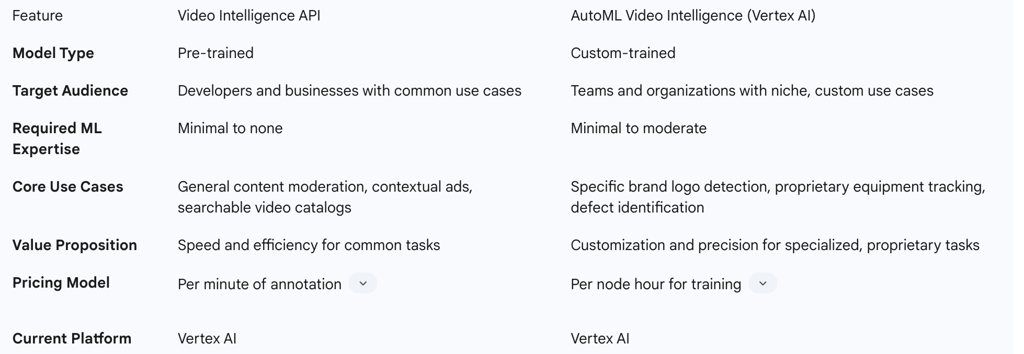

The Google Cloud Video AI platform offers two primary pathways for video analysis, each designed for a distinct set of use cases. The first is the Video Intelligence API, which uses pre-trained models, and the second is AutoML Video Intelligence (now on Vertex AI), which is used to train custom models.

The Video Intelligence API provides a "plug-and-play" solution with pre-trained models that automatically recognize a vast number of objects, places, and actions. This offering is highly efficient and ideal for common use cases, such as general content moderation, creating searchable video catalogs, or enabling contextual advertising by identifying common entities. A media company like CBS Interactive, for example, can use this API to generate video metadata by simply plugging it into their existing encoding framework.

In contrast, AutoML Video Intelligence, now integrated into the Vertex AI platform, is the tool for training custom models with user-defined labels. This is an essential distinction. While the Video Intelligence API is excellent for recognizing "a car" or "a person," AutoML is needed when a project requires the identification of a specific, proprietary object, such as a company's brand logo, a unique piece of manufacturing equipment, or a particular type of building. The Vertex AI platform, with its graphical interface, is designed to make this custom model training accessible even to individuals with minimal machine learning experience.

The consolidation of legacy services like Firebase ML's AutoML Vision Edge and the original AutoML Video Intelligence into the broader Vertex AI platform is a critical development. This is more than a simple product name change; it represents a strategic shift towards a single, unified MLOps platform. By moving from a collection of specialized, standalone tools to a comprehensive ecosystem, Google provides a seamless workflow for every stage of the model lifecycle, from data preparation to deployment and management. This integrated approach eliminates data transfer overhead, simplifies permissions, and ensures that future innovations will be available on a single, coherent platform. This is a significant architectural advantage for enterprises seeking a scalable, end-to-end solution.