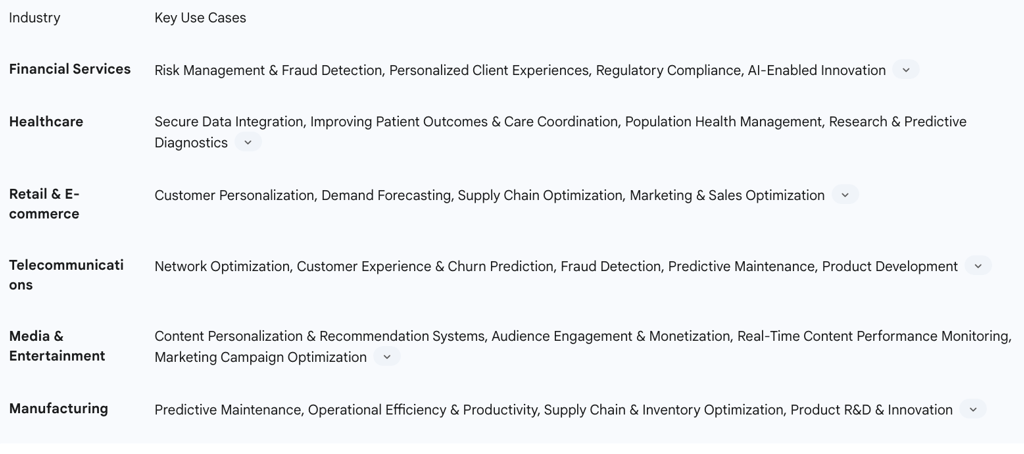

Financial Services: Enhancing Risk Management, Fraud Detection, and Personalized Client Experiences

Financial institutions, including banks and investment firms, are among the leading adopters of data lakes due to the industry's data-intensive nature and stringent regulatory requirements. Data lakes provide a robust platform for managing vast amounts of complex financial data, enabling advanced analytics crucial for maintaining stability and driving growth.

A primary application is in risk management and fraud detection. Financial entities critically rely on data lakes to develop sophisticated models for customer behavior analysis. This capability allows them to predict and mitigate risks such as credit defaults or fraud by combining structured financial records with unstructured data like web activity and real-time market feeds. The ability to process real-time market data facilitates immediate assessment of transaction fraud risks and other financial anomalies, ensuring agile decision-making in highly dynamic markets.

Data lakes are also instrumental in delivering personalized client experiences. They consolidate diverse customer data from various touchpoints, including CRM platforms, social media analytics, marketing platforms (e.g., buying history), and incident tickets. This unified view empowers businesses to understand their most profitable customer cohorts, identify the root causes of customer churn, and design highly tailored promotions or rewards to increase loyalty and retention. This comprehensive understanding translates into personalized insights, custom client-facing tools, and optimized customer journeys through better segmentation and "next-best-action" recommendations.

Given the stringent regulatory environment in finance, data lakes play a vital role in regulatory compliance. Standards like FINRA's Know Your Customer and the EU's AI Act necessitate the collection and secure management of extensive client data. Data lakes provide a unified and flexible platform that enhances data governance, metadata management, and data lineage tracking, supporting robust data operations, auditing, and monitoring functions, thereby ensuring compliance while adapting to evolving regulations.

Furthermore, data lakes serve as a centralized, secure repository for the vast amounts of financial information firms are required to collect, laying the groundwork for AI-enabled innovation. This data foundation is critical for training advanced AI models, enabling automated decisions, and revealing deeper understandings that human analysis might miss. Examples include using alternative data sets, such as phone bills and utility payments, to enhance credit decision-making and generate new business understandings.

The benefits derived include enabling real-time analysis of streaming market data, which facilitates swift decision-making and highly effective risk management. They provide deeper, holistic understandings of client financial situations, fostering greater transparency and trust, which are paramount in financial services. Data lakes also improve operational efficiency and data accuracy by breaking down data silos between disparate software systems. Ultimately, they unlock possibilities for uncovering new revenue streams and capturing additional market share by leveraging comprehensive planning data.

Healthcare: Improving Patient Outcomes, Research, and Operational Efficiency

The healthcare industry is increasingly reliant on data-driven decision-making to improve patient outcomes and streamline clinical workflows. Data lakes are customized data repositories that are growing in popularity within healthcare, designed to break down data silos and provide actionable understandings.

A key application is secure data integration. Healthcare data lakes integrate copious, broad, and substantial data from a multitude of sources, including electronic health records (EHRs), claims data, medical imaging, patient telemetry, genomic research, patient-reported data, family medical history, and data from smart devices. This integration provides a single source of truth for analyzing complex health issues, effectively breaking down traditional data silos. This comprehensive data strategy provides an ideal, secure platform to assimilate vast volumes of data with widely varying content, which can then be sorted to make it more accessible and actionable.

This comprehensive data integration directly contributes to improving patient outcomes and care coordination. By merging and analyzing diverse patient data, data lakes generate a holistic view of the patient. This enables data-driven decisions at the point of care, facilitates quicker judgments during treatment encounters, and significantly improves overall care coordination. Real-time monitoring of patient vital signs and immediate notifications to providers about changes in a patient's condition are also enabled, leading to more responsive and effective care delivery.

Data lakes also support population health management by enabling the tracking of population and patient levels. This drives quality measure improvement, ensures chronic conditions are accurately assessed and coded annually, and helps mitigate the impacts of social determinants of health (SDOH) on care outcomes by highlighting their importance.

For research and predictive diagnostics, data lakes facilitate streamlined medical research and enable predictive diagnostics and personalized treatment plans. They achieve this by allowing the exploration of previously inaccessible unstructured data, such as provider notes and clinical trial information, and supporting the development of advanced predictive statistical models for disease detection and management.

The data lake's unique capacity to store all types of data (structured, semi-structured, unstructured) from any source (CRM, ERP, IoT devices, social media, clinical systems, maintenance logs) in a single, centralized repository directly enables the creation of a comprehensive, "360-degree view" of a key entity, such as a patient. This is because it systematically breaks down the traditional data silos that previously fragmented information and prevented the effective correlation of disparate data points. For patients, this leads to better health outcomes, more effective care coordination, and personalized treatment plans. By unifying previously fragmented data, the data lake empowers organizations to shift from siloed, partial analyses to integrated, comprehensive strategies, leading to more effective decision-making, a deeper understanding of complex systems, and the ability to proactively address challenges and seize opportunities.

The benefits derived from data lakes in healthcare include providing a secure and compliant solution for handling electronically protected health information (ePHI), adhering to strict regulatory requirements like HIPAA, through robust administrative, physical, and technical measures. They increase the speed of data access and automation, enabling faster understandings from acquired data and the quick deployment of relevant care interventions. Data lakes reduce the time and effort required for data preparation, allowing healthcare organizations to focus on higher-value activities such as data analysis and interpretation, rather than manual data cleansing. Finally, they uncover new understandings into patient behavior, disease patterns, and treatment outcomes through the application of advanced analytics techniques like machine learning and predictive modeling.

Retail & E-commerce: Driving Customer Personalization, Demand Forecasting, and Supply Chain Optimization

The retail and e-commerce sectors are highly competitive and customer-centric, making data lakes indispensable for understanding consumer behavior, optimizing operations, and delivering tailored experiences.

A core application is customer personalization. Retail chains and e-commerce platforms leverage data lakes to integrate a wide array of customer data, including purchase history, social media activity, website browsing behavior, clickstream data, and customer reviews. This unified data enables the creation of highly tailored shopping experiences, personalized product recommendations, and targeted marketing campaigns for individual customers. Netflix serves as a prime example, processing over 500 million hours of daily content consumption data to deliver hyper-personalized content suggestions, demonstrating the power of data lakes in driving user engagement.

Data lakes also significantly enhance demand forecasting accuracy by integrating historical sales data, real-time inventory updates, and external factors such as weather patterns or seasonal trends. This allows for more precise inventory management and promotional planning.

For supply chain optimization, retailers utilize data lakes to centralize customer data, inventory levels, and sales metrics, enabling teams to track preferences, manage supply chains efficiently, and optimize pricing strategies from a single source. Data lakes provide real-time visibility into supply chain operations by integrating data from suppliers, transportation networks, and warehouses, leading to optimized inventory management, improved delivery routes, and enhanced transportation efficiency.

In marketing and sales optimization, by understanding how a buyer navigated to a company's website, where customers live, and their demographic information, companies can significantly optimize their marketing and sales strategies, leading to more effective campaigns and increased conversions.

The benefits derived include the elimination of data silos by centralizing critical business information, making it accessible across teams, as exemplified by Amazon's centralization of customer data, inventory, and sales metrics. Data lakes enable scalable and cost-effective storage of vast user data, viewing habits, and streaming behavior, as highlighted by Netflix's successful implementation. This drives personalized recommendations and content creation, significantly increasing user engagement and customer retention. Furthermore, data lakes facilitate rapid prototyping and sentiment analysis from diverse data sources, allowing for agile adaptation to market trends.

Telecommunications: Optimizing Networks, Reducing Churn, and Detecting Fraud

The telecommunications industry, characterized by massive data volumes from network operations, customer interactions, and IoT devices, heavily relies on data lakes to enhance service quality, manage customer relationships, and ensure operational efficiency.

A crucial application is network optimization. Telecommunications companies leverage data lakes to analyze vast amounts of historical traffic data, bandwidth usage, and geographical trends. This enables them to predict periods of high network congestion, proactively manage network resources, deploy infrastructure more effectively, and strategically expand coverage areas to maintain service quality.

Data lakes are also vital for customer experience enhancement and churn prediction. By analyzing customer usage patterns, feedback, and overall behavior, data lakes help identify customers who are at risk of churning. This allows companies to implement targeted retention strategies and personalize marketing and customer service interactions, leading to improved customer satisfaction and reduced churn rates.

Fraud detection and prevention represent another significant use case. Fraudulent activities are a major concern in the telecom industry. Data lakes enable advanced analytics to detect and prevent various forms of fraud, such as unauthorized service usage, SIM card cloning, and identity theft, by combining structured financial records with unstructured web activity and real-time data streams.

For predictive maintenance, maintaining complex network infrastructure is crucial for uninterrupted service. Data lakes facilitate predictive maintenance by analyzing high-velocity data from network equipment. This helps predict potential equipment failures and allows for proactive scheduling of maintenance, minimizing downtime and ensuring service continuity.

Finally, for product development and innovation, analyzing detailed customer usage patterns provides deep understandings into preferences and behaviors. This understanding guides the development of new products and services that are more aligned with customer needs, optimizes pricing strategies, and measures campaign effectiveness.

The benefits derived include achieving significant cost reduction in data management, with one telco reporting over a 20x reduction in costs related to their big data infrastructure after implementing a data lake solution. Data lakes accelerate speed to market for new services and significantly improve developer productivity, empowering innovation. They enhance the accuracy and timeliness of financial reporting, aiding in better strategic decision-making. Overall, data lakes improve operational efficiency and reduce business risk by enabling data-driven understandings. They also provide the necessary foundation for incorporating artificial intelligence and machine learning workloads, including the development of generative AI applications.

Media & Entertainment: Powering Content Personalization and Audience Engagement

The media and entertainment industry thrives on understanding audience preferences and delivering engaging content. Data lakes are central to achieving these objectives by providing the infrastructure for massive-scale data processing and advanced analytics.

A primary use case is content personalization and recommendation systems. Companies offering streaming music, radio, and podcasts, as well as video streaming services, heavily utilize data lakes. They collect and process vast amounts of data on customer behavior, viewing habits, geographic preferences, and interaction patterns. This rich dataset is then used to enhance recommendation algorithms, leading to hyper-personalized content suggestions that increase user consumption and engagement. Netflix is a prime example, processing over 500 million hours of daily content consumption data to power its revolutionary recommendation engine.

For audience engagement and monetization, data lakes enable deep analysis of audience behavior, real-time content performance indicators, and content popularity forecasts. This allows media companies to optimize pricing strategies, improve ad placements, and refine subscription models for maximum revenue. Data lakes also facilitate real-time content performance monitoring by processing vast types of advertising data in real-time, providing immediate understandings into content performance across different markets and media platforms.

In marketing campaign optimization, through the analysis of extensive datasets, media companies gain a deeper understanding of customer needs and preferences. This allows them to deliver content that resonates with the audience and to design more effective and targeted marketing campaigns.

The benefits include significantly increasing revenue through improved recommendation systems, leading to higher user engagement and increased opportunities for ad sales. Data lakes enhance the overall user experience and improve customer retention through highly tailored content suggestions and personalized interactions. They provide the capability to handle massive-scale streaming workloads and efficiently store and process diverse unstructured data types, including video and audio files. Ultimately, data lakes enable media organizations to operationalize their analytics efforts, driving better outcomes with big data and artificial intelligence.

Manufacturing: Leveraging IoT for Predictive Maintenance and Operational Insights

The manufacturing sector, with its complex machinery and intricate supply chains, generates enormous volumes of data, particularly from Internet of Things (IoT) devices. Data lakes are crucial for transforming this raw data into actionable understandings that drive efficiency and innovation.

A primary use case is predictive maintenance. Manufacturing firms heavily leverage data lakes to analyze high-velocity IoT data streaming from connected devices, sensors, and industrial IIoT equipment. This real-time analysis helps predict potential equipment failures, optimize maintenance schedules, and minimize costly downtime by identifying issues before they escalate.

For operational efficiency and productivity, manufacturing data lakes consolidate data from disparate sources across the plant, including IIoT devices, sensors, maintenance logs, customer orders, GPS tracking for supply chain shipments, and market fluctuations. By overcoming data silos, this unified data enables advanced analytics (AI/ML) to increase visibility into plant operations, speed up root cause analysis, and optimize production processes for greater efficiency.

In supply chain and inventory optimization, integrating IoT sensor data with supply chain and financial data within the data lake provides a holistic view of operations. This enables manufacturers to optimize production processes, manage inventory more effectively, and reduce overall costs across the supply chain.

Data lakes also provide the comprehensive data foundation necessary to drive product R&D and innovation for greater innovation. They also power improved forecasting accuracy, which is critical for better business decision-making in a competitive manufacturing landscape.

The inherent capability of data lakes to ingest and process high-velocity streaming data in real-time directly enables organizations to transition from reactive problem-solving to proactive and even predictive operational models. In manufacturing, real-time IoT data enables predictive maintenance

before equipment failures occur. This immediate feedback loop is not just about speed, but about enabling continuous, adaptive decision-making. In today's highly dynamic and competitive global markets, the speed at which an organization can derive understandings and act upon them translates directly into competitive agility. Industries that can leverage real-time data to optimize supply chains dynamically or predict equipment failures before they cause costly downtime gain a significant and often decisive advantage. This capability allows businesses to respond to market shifts, evolving customer needs, and emerging operational issues with unprecedented speed, thereby minimizing losses, maximizing emergent opportunities, and ultimately driving superior business outcomes and market leadership.

The benefits derived include effectively overcoming data silos by integrating diverse data sources from across the manufacturing plant, ensuring all necessary data is accessible for advanced analytics. Data lakes significantly increase productivity and efficiency within the plant by enabling data-driven optimization of workflows and processes. They contribute to more accurate forecasting and provide critical understandings that guide better business decision-making. Data lakes foster innovation within the manufacturing process and help organizations sharpen their competitive edge in the market. Finally, they provide a robust foundation for implementing advanced technologies such as predictive monitoring, digital twins, and inventory optimization.

Cross-Industry Applications and the Future of Data Lakes

Beyond specific industry applications, data lakes facilitate broader trends that are reshaping how organizations leverage data. The proliferation of IoT data and the evolution towards data lakehouses are two significant developments that underscore the enduring strategic importance of data lakes.

The Role of IoT Data

The Internet of Things (IoT) has emerged as a pervasive source of massive, high-velocity, and diverse data, presenting both opportunities and challenges for organizations. Data lakes are uniquely positioned to handle this influx of information. Hardware sensors generate enormous amounts of semi-structured to unstructured data about the physical world, and data lakes serve as a central repository for this vast influx, making it available for future analysis.

Across industries, IoT data, ingested into data lakes, enables transformative applications. In manufacturing, it powers predictive maintenance by monitoring machine health in real-time. In telecommunications, it aids in optimizing network performance and predicting equipment failures. In retail, IoT data from smart devices can contribute to understanding in-store customer behavior and optimizing store layouts. Healthcare leverages IoT data from wearables and medical devices for continuous patient monitoring and personalized care. The ability of data lakes to efficiently capture, store, and process these continuous streams of data, regardless of their format, makes them an essential component of modern IoT data management strategies. This capability allows businesses to monitor operations, predict maintenance needs, and develop new services based on real-world data, driving operational efficiencies and new revenue streams.

Emerging Trends: The Rise of Data Lakehouses

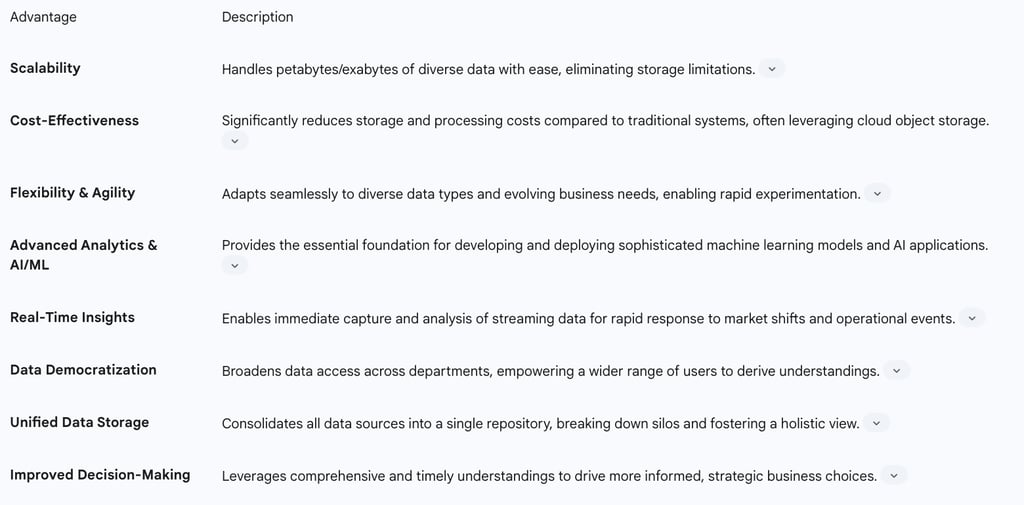

While traditional data lakes offer unparalleled flexibility and cost-effectiveness, they have historically faced challenges related to data quality, governance, and performance for certain analytical workloads. These limitations have given rise to the "data lakehouse" architecture, an emerging trend that seeks to combine the best attributes of data lakes and data warehouses.

A data lakehouse builds a transactional storage layer directly on top of the data lake, often leveraging open formats like Delta Lake, Apache Iceberg, or Apache Hudi. This layer introduces key features traditionally associated with data warehouses, such as ACID (atomicity, consistency, isolation, and durability) transactions for data reliability, streaming integrations, and advanced features like data versioning and schema enforcement.

The data lakehouse model addresses the drawbacks of traditional data lakes, transforming them from potential "data swamps" into highly reliable, high-performance data platforms. This convergence allows for a unified data platform that can handle all data types and workloads, from raw data ingestion and complex machine learning to traditional business intelligence and operational reporting. It simplifies analytics workflows, improves data quality and governance, and enhances performance, making it easier for a broader range of users—including data analysts, data scientists, and machine learning engineers—to access and derive value from the data. The rise of data lakehouses signifies a maturation of the data lake concept, promising even greater utility and broader adoption across industries by providing a more robust, integrated, and future-proof data management solution.